Volume 9, Issue 2 (March & April 2018 2018)

BCN 2018, 9(2): 107-120 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Khosrowabadi R. Stress and Perception of Emotional Stimuli: Long-term Stress Rewiring the Brain. BCN 2018; 9 (2) :107-120

URL: http://bcn.iums.ac.ir/article-1-791-en.html

URL: http://bcn.iums.ac.ir/article-1-791-en.html

Institute for Cognitive and Brain Sciences, Shahid Beheshti University, Tehran, Iran.

Full-Text [PDF 1685 kb]

| Abstract (HTML)

Full-Text:

1. Introduction

Long-term mental stress, one of the most important issues in today’s society, causes a variety of health problems. Our cognition, perception, and decision making can also be affected by long-term stress. This paper investigates that how stress can influence our perception to external emotional stimuli.

Long-term mental stress, one of the most important issues in today’s society, causes a variety of health problems. Our cognition, perception, and decision making can also be affected by long-term stress. This paper investigates that how stress can influence our perception to external emotional stimuli.

There is an association between mood (e.g. Long-term stress), emotional states and homeostatic changes (Craig, 2003). When a stimulus is perceived, the brain initiates a reverse course of actions that releases different biochemical compounds throughout the body to bring the body back into a balance state. A balance state is a metabolic equilibrium between stimulating and tranquilizing chemical forces in the body. If either stimulating or tranquilizing chemical forces dominates the other without relief, then an on-going state of internal imbalance is experienced. This condition is known as stress which can have a serious consequence on the brain cells. Stress is defined as an on-going and unrelieved imbalance between stimulating and tranquilizing biochemicals. Thus, this condition may damage brain cells. Stress can be acute (short-term) or long-term. Acute stress is usually not a health risk. In contrast, long-term stress causes a wide variety of health problems (Lundberg, 2008). As long-term stress lasts longer than emotional states (Ekman, 1999), the resulting homeostatic instability can be considered as a base for emotional states. This hemostatic instability can potentially affect subject’s emotional perception.

In this study, long-term mental stress level of a subject is estimated by PSS-14 questionnaire (Cohen, Kamarck, & Mermelstein, 1983) that comprises 14 items. In each item, subject is asked how often he/she has experienced certain occurrence of a stressful situations during the last month. Response for each item is scored from 0 to 4, thus total response scores range from 0 to 56. A higher response score correlates with a higher level of mental stress. However, there is no score cut-off point and comparisons are only sample-wise. In this study, subjects’ responses to PSS-14 had mean and standard deviation of µ=24, σ=6.7 respectively. Subjects with a score lower than µ-σ/2=21 were considered to be relatively stress-free whereas those with a score higher than µ+σ/2=28 were considered to have long-term stress.

Furthermore, emotions are psychophysiological phenomena associated with a wide variety of subjective feelings and observable behaviors. In general, perception of an emotional stimulus comprises cognitive process, subjective feeling, and physiological reactions. Subsequently, an expression in response to the stimulus is set by a series of chemical releases and reactions (Baumgartner, Esslen, & Jäncke, 2006). Physiological changes due to either perception or expression are detectable (Khosrowabadi, Hiok Chai, Wahab, & Kai Keng, 2010; Olofsson, Nordin, Sequeira, & Polich, 2008) and studies have shown that EEG also can be used to reveal them (Farquharson, 1942). In order to find EEG signatures related to perception of an emotional stimulus, subject’s feeling of the presented stimulus should be identified. Therefore, a way has to be described for distinction of subjects’ emotional states.

Theories in emotion have suggested a number of basic emotions. Basic emotions are defined as emotions that are common across cultures and selected by nature because of their high survival factors. Commonly accepted basic emotions are: happy, sad, fear, anger, surprise and disgust. Accordingly, complex emotions are then formed by combination of some basic emotions Theories in emotion have suggested several mutual emotions across cultures, aka: basic emotions, and some complex emotions that consist of elements of basic shared emotions, such as happiness, fear, anger, surprise, and disgust. These emotions were preserved by natural selection as they were necessary tools for survival (Ekman, 1999; Ortony & Turner, 1990). However, there is still no coherent definition for basic emotions. Therefore, to overcome this issue, it has been suggested to categorize emotions based on their valence and arousal levels (Ekman, 1999; Russell, 1980).

In this dynamic representation method, valence varies from unpleasant to pleasant and arousal varies from low (calm) to high level of excitement. In addition, basic emotions can also be differentiated in the valence–arousal plan. Consequently, four types of emotional stimuli including positive and negative either with low or high level of excitement were investigate in this study. However, it should be noted that different subjects may experience different emotions while exposed to the same stimulus. Therefore, Self-Assessment Manikin (SAM) questionnaire (Lang, 1980) was used to find out about a subject’s true feelings. In the next step, the SAM responses were used to categorize EEG features.

In this study, differentiable connectivity patterns of emotional states were investigated. The connectivity patterns between eight brain regions were estimated based on EEG data. It has been shown in our previous studies that connectivity-based features are superior to other existing feature extraction methods for recognition of emotions by EEG signals. The extracted features were then labeled

Furthermore, emotions are psychophysiological phenomena associated with a wide variety of subjective feelings and observable behaviors. In general, perception of an emotional stimulus comprises cognitive process, subjective feeling, and physiological reactions. Subsequently, an expression in response to the stimulus is set by a series of chemical releases and reactions (Baumgartner, Esslen, & Jäncke, 2006). Physiological changes due to either perception or expression are detectable (Khosrowabadi, Hiok Chai, Wahab, & Kai Keng, 2010; Olofsson, Nordin, Sequeira, & Polich, 2008) and studies have shown that EEG also can be used to reveal them (Farquharson, 1942). In order to find EEG signatures related to perception of an emotional stimulus, subject’s feeling of the presented stimulus should be identified. Therefore, a way has to be described for distinction of subjects’ emotional states.

Theories in emotion have suggested a number of basic emotions. Basic emotions are defined as emotions that are common across cultures and selected by nature because of their high survival factors. Commonly accepted basic emotions are: happy, sad, fear, anger, surprise and disgust. Accordingly, complex emotions are then formed by combination of some basic emotions Theories in emotion have suggested several mutual emotions across cultures, aka: basic emotions, and some complex emotions that consist of elements of basic shared emotions, such as happiness, fear, anger, surprise, and disgust. These emotions were preserved by natural selection as they were necessary tools for survival (Ekman, 1999; Ortony & Turner, 1990). However, there is still no coherent definition for basic emotions. Therefore, to overcome this issue, it has been suggested to categorize emotions based on their valence and arousal levels (Ekman, 1999; Russell, 1980).

In this dynamic representation method, valence varies from unpleasant to pleasant and arousal varies from low (calm) to high level of excitement. In addition, basic emotions can also be differentiated in the valence–arousal plan. Consequently, four types of emotional stimuli including positive and negative either with low or high level of excitement were investigate in this study. However, it should be noted that different subjects may experience different emotions while exposed to the same stimulus. Therefore, Self-Assessment Manikin (SAM) questionnaire (Lang, 1980) was used to find out about a subject’s true feelings. In the next step, the SAM responses were used to categorize EEG features.

In this study, differentiable connectivity patterns of emotional states were investigated. The connectivity patterns between eight brain regions were estimated based on EEG data. It has been shown in our previous studies that connectivity-based features are superior to other existing feature extraction methods for recognition of emotions by EEG signals. The extracted features were then labeled

by subjects’ SAM responses. Afterwards, the EEG features correlates on emotional states were classified in a supervised manner. A significant change in the accuracy of recognizer system was observed after categorizing the subjects to stressed and stress-free groups. Hence, it was hypothesized that long-term stress disrupts patterns of connectivity between the brain regions. To investigate the matter, possibility of recognition of long-term stress level from EEG data was explored. The subjects participated in our study were categorized to stressed and stress-free groups based on their EEG data acquired in resting state (eyes-closed condition). The result of resting state also confirmed that the long-term stress distracts patterns of connectivity among different brain regions. Interestingly, this effect on neural activities was detectable in EEG signals acquired in eyes-closed condition.

The remainder of this paper is structured as follows. Section II describes experimental protocol. Section III describes the signal processing of EEG, feature extraction and classification part. Section IV presents the experimental results. Section V concludes the paper. The reminder of this report is structured in four sections. Section two explains the launched experimental protocol. Section three discusses the method of EEG signal processing, feature extraction and classification. Section four is devoted to the experimental results. Section five concludes the research.

1.1. Experimental design

The following section describes the experimental design to collect EEG correlates on emotions.

1.2. Emotion elicitation

Studies have shown that elicitor (subject elicited vs. event elicited), setting (controlled condition in the lab vs. real world), focus (expression vs. perception), and subject awareness (open recording vs. hidden recording) are factors that can influence the emotion elicitation results (Picard, Vyzas, & Healey, 2001). Subject elicited category refers to the instruction given to the subject to express a specific emotion (for example to mimic the facial expression of happiness), or recalling past emotional episodes. Event-elicited category refers to use of some images, sounds, video clips or any emotionally evocative stimuli. The International Affective Picture System (IAPS) (Lang, Bradley, & Cuthbert, 2005), International Affective Digitized sound System (IADS) (Bradley, Lang, University of Florida, & National Institute of Mental Health, 1999) Bernard Bouchard’s synthesized musical clips (Vieillard et al., 2008), and Gross and Levenson’s movie clips (Gross & Levenson, 1995) are standard data sets used for elicitation of emotions. Although touching, smelling, and tasting are also known to influence human emotions, these are less studied in the literature (Kulish, Sourin, & Sourina, 2007).

Therefore in this study, a combination of arousing pictures from IAPS and synthesized musical excerpts of Bernard Bouchard were used to elicit emotions. The emotional stimuli data set was evaluated by experts accompanied by average judgments of several people. However, actual emotional feeling of a stimulus may differ from one subject to another based on their experiences. Therefore, even though predefined evaluation labels were available, self-assessments questionnaire were also used. The subjects were asked to rate their feelings about the presented stimulus on a SAM questionnaire (Figure 1). After selection of emotional stimuli, a strategy for sequence and durations of emotional stimuli should be selected.

1.3. Experimental protocol

The durations of affective stimuli are mainly defined based on study categories. Three major categories can be listed as: 1) full blown emotions that last from several seconds to minutes, 2) moods that last from several minutes to hours, and 3) emotional disorders that last from several years to entire lifetime (Cowie & Cornelius, 2003). Hence, this study focuses on full blown emotions (Cowie & Cornelius, 2003) and emotional stimuli were presented for one minute in a counterbalanced and random order. In fact, an emotion recognition system should be ideally able to discriminate the emotional states by EEG as fast as possible (Coan & Allen, 2004). Therefore, a window length of 2 s was used to process the acquired EEG data. Figure 2 shows the protocol of stimuli presentation.

The EEG data were collected while subject was seated in a comfortable chair in a lit and controlled temperature room. Prior to the experiment, the experimental procedure was explained to the subject in the registration room. The subject was asked to fill in a consent form, PSS-14, and handedness questionnaires (Oldfield, 1971). Then, 8 Ag/AgCl electrodes with a reference electrode (Cz) were attached bilaterally on the subject’s scalp. The 10/20 system of electrode placement was used in this study. Next, the EEG data were collected for a 10-min period that comprised 5 min in eyes-closed condition, 1 min in eyes-open condition, and 1 min for each emotional stimulus. All subjects were exposed to 4 emotional stimuli in different levels of arousal and valence as explained in previous section. The visual stimuli were displayed on a 19 inch monitor positioned 1 m away from the participant’s eyes and the audio stimuli were played with speakers with a constant output power. Four emotional stimuli for each participant were presented randomly while their sequences were counterbalanced to ensure each stimulus category was seen by each subject.

The EEG was recorded using the BIMEC device (Brainmarker BV, The Netherlands) with a sampling rate of 250 Hz. The impedance of recording electrodes was monitored for each subject prior to data collection and it was kept below 10 kΩ. The EEG was recorded by the BIMEC device (Brainmarker BV, The Netherlands). It was setup to sample with a rate of 250 Hz. The impedance level aimed to be below 10 kΩ so that the recording electrodes were carefully monitored for each subject prior to data collection and be kept within the impedance limits.

1.4. EEG correlates on emotion

As explained before, the valence-arousal plane was used for labeling the EEG data in this study. The subjects’ responses to SAM questionnaires were used to label EEG features.

1.5. Subjects

Statistically, a large sample size increases the precision of estimation. Therefore, EEG data were collected from as many as 26 healthy university students (all right-handed, 18-30 years old, 20 male). The preparation for exams during the examination period was considered as a stressful situation. The EEG data were acquired from 15 subjects during the examination period and 11 subjects 2 weeks after their last exam. However, the PSS-14 answers were used to categorize subjects into stressed and stress-free groups. It has been shown that long-term stress can impair subject’s ability to flexibly shift his or her attention by reducing connectivity to an attention-regulating area of the prefrontal cortex (Arnsten, 2009). Such impairment can potentially influence the cognitive process involved in perception of emotional stimuli. Considering the time lasting of long-term mental stress, this effect should be detectable in resting state as well which is explained in the next section.

2. Methods

This section describes the methodology used to classify EEG correlates of emotion in this study. The EEG was processed as shown in Figure 3. In the first stage, the EEG data was normalized to amplitude range of .The methodology used to classify EEG correlates of emotion is explained here. Figure 3 shows the EEG processing protocol. Normalization was performed to fit amplitude range of 0 to 1. This scaling helps remove effects of recording conditions for different subjects while it does not change the connectivity features. Since EEG contains a lot of noises and artifacts from bodily movements or eye blinks, noise removal was performed using a band-pass filter in range of 4 to 32 Hz. After, preprocessing, some EEG features were extracted from EEG data.

2.1. Feature extraction

The remainder of this paper is structured as follows. Section II describes experimental protocol. Section III describes the signal processing of EEG, feature extraction and classification part. Section IV presents the experimental results. Section V concludes the paper. The reminder of this report is structured in four sections. Section two explains the launched experimental protocol. Section three discusses the method of EEG signal processing, feature extraction and classification. Section four is devoted to the experimental results. Section five concludes the research.

1.1. Experimental design

The following section describes the experimental design to collect EEG correlates on emotions.

1.2. Emotion elicitation

Studies have shown that elicitor (subject elicited vs. event elicited), setting (controlled condition in the lab vs. real world), focus (expression vs. perception), and subject awareness (open recording vs. hidden recording) are factors that can influence the emotion elicitation results (Picard, Vyzas, & Healey, 2001). Subject elicited category refers to the instruction given to the subject to express a specific emotion (for example to mimic the facial expression of happiness), or recalling past emotional episodes. Event-elicited category refers to use of some images, sounds, video clips or any emotionally evocative stimuli. The International Affective Picture System (IAPS) (Lang, Bradley, & Cuthbert, 2005), International Affective Digitized sound System (IADS) (Bradley, Lang, University of Florida, & National Institute of Mental Health, 1999) Bernard Bouchard’s synthesized musical clips (Vieillard et al., 2008), and Gross and Levenson’s movie clips (Gross & Levenson, 1995) are standard data sets used for elicitation of emotions. Although touching, smelling, and tasting are also known to influence human emotions, these are less studied in the literature (Kulish, Sourin, & Sourina, 2007).

Therefore in this study, a combination of arousing pictures from IAPS and synthesized musical excerpts of Bernard Bouchard were used to elicit emotions. The emotional stimuli data set was evaluated by experts accompanied by average judgments of several people. However, actual emotional feeling of a stimulus may differ from one subject to another based on their experiences. Therefore, even though predefined evaluation labels were available, self-assessments questionnaire were also used. The subjects were asked to rate their feelings about the presented stimulus on a SAM questionnaire (Figure 1). After selection of emotional stimuli, a strategy for sequence and durations of emotional stimuli should be selected.

1.3. Experimental protocol

The durations of affective stimuli are mainly defined based on study categories. Three major categories can be listed as: 1) full blown emotions that last from several seconds to minutes, 2) moods that last from several minutes to hours, and 3) emotional disorders that last from several years to entire lifetime (Cowie & Cornelius, 2003). Hence, this study focuses on full blown emotions (Cowie & Cornelius, 2003) and emotional stimuli were presented for one minute in a counterbalanced and random order. In fact, an emotion recognition system should be ideally able to discriminate the emotional states by EEG as fast as possible (Coan & Allen, 2004). Therefore, a window length of 2 s was used to process the acquired EEG data. Figure 2 shows the protocol of stimuli presentation.

The EEG data were collected while subject was seated in a comfortable chair in a lit and controlled temperature room. Prior to the experiment, the experimental procedure was explained to the subject in the registration room. The subject was asked to fill in a consent form, PSS-14, and handedness questionnaires (Oldfield, 1971). Then, 8 Ag/AgCl electrodes with a reference electrode (Cz) were attached bilaterally on the subject’s scalp. The 10/20 system of electrode placement was used in this study. Next, the EEG data were collected for a 10-min period that comprised 5 min in eyes-closed condition, 1 min in eyes-open condition, and 1 min for each emotional stimulus. All subjects were exposed to 4 emotional stimuli in different levels of arousal and valence as explained in previous section. The visual stimuli were displayed on a 19 inch monitor positioned 1 m away from the participant’s eyes and the audio stimuli were played with speakers with a constant output power. Four emotional stimuli for each participant were presented randomly while their sequences were counterbalanced to ensure each stimulus category was seen by each subject.

The EEG was recorded using the BIMEC device (Brainmarker BV, The Netherlands) with a sampling rate of 250 Hz. The impedance of recording electrodes was monitored for each subject prior to data collection and it was kept below 10 kΩ. The EEG was recorded by the BIMEC device (Brainmarker BV, The Netherlands). It was setup to sample with a rate of 250 Hz. The impedance level aimed to be below 10 kΩ so that the recording electrodes were carefully monitored for each subject prior to data collection and be kept within the impedance limits.

1.4. EEG correlates on emotion

As explained before, the valence-arousal plane was used for labeling the EEG data in this study. The subjects’ responses to SAM questionnaires were used to label EEG features.

1.5. Subjects

Statistically, a large sample size increases the precision of estimation. Therefore, EEG data were collected from as many as 26 healthy university students (all right-handed, 18-30 years old, 20 male). The preparation for exams during the examination period was considered as a stressful situation. The EEG data were acquired from 15 subjects during the examination period and 11 subjects 2 weeks after their last exam. However, the PSS-14 answers were used to categorize subjects into stressed and stress-free groups. It has been shown that long-term stress can impair subject’s ability to flexibly shift his or her attention by reducing connectivity to an attention-regulating area of the prefrontal cortex (Arnsten, 2009). Such impairment can potentially influence the cognitive process involved in perception of emotional stimuli. Considering the time lasting of long-term mental stress, this effect should be detectable in resting state as well which is explained in the next section.

2. Methods

This section describes the methodology used to classify EEG correlates of emotion in this study. The EEG was processed as shown in Figure 3. In the first stage, the EEG data was normalized to amplitude range of .The methodology used to classify EEG correlates of emotion is explained here. Figure 3 shows the EEG processing protocol. Normalization was performed to fit amplitude range of 0 to 1. This scaling helps remove effects of recording conditions for different subjects while it does not change the connectivity features. Since EEG contains a lot of noises and artifacts from bodily movements or eye blinks, noise removal was performed using a band-pass filter in range of 4 to 32 Hz. After, preprocessing, some EEG features were extracted from EEG data.

2.1. Feature extraction

The challenging task is to extract distinctive features from EEG that correlates to subject’s emotional states. In the literature, techniques for extracting features from EEG correlates of emotional states include event-related synchronization or desynchronization, event-related potentials, visual-evoked potentials, and quantitative EEG (Aftanas, Varlamov, Pavlov, Makhnev, & Reva, 2001; Coan & Allen, 2004; Frantzidis et al., 2010; Kemp, Gray, Eide, Silberstein, & Nathan, 2002; Olofsson et al., 2008; Petrantonakis & Hadjileontiadis, 2010). Studies have also shown that the cortical-subcortical interactions play an important role in the perception of emotional stimulus (Kober et al., 2008; Lewis & Todd, 2007; Swanson, 2003).

Therefore, brain connectivity features should be very informative. In addition, our previous studies have shown that emotional states can be accurately recognized using these features extracted from EEG data. Therefore, various connectivity features were applied in this study to categorize EEG data collected in different emotional states. Several connectivity measures including phase slope index (Nolte et al., 2008), directed transfer function (Korzeniewska, Manczak, Kaminski, Blinowska, & Kasicki, 2003), and generalized partial directed coherence (Baccald & de Medicina, 2007) were applied in this study.

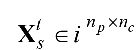

Mathematical notations used to extract connectivity features are explained here. Inputs, outputs, related variables and functions are presented as follows.

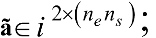

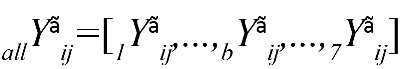

denotes the multi-channel, multi-subject, and single-trial EEG data that are correlated to the valence and arousal

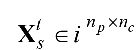

denotes the multi-channel, multi-subject, and single-trial EEG data that are correlated to the valence and arousal  ; whereby nc denotes the number of EEG channels, np denotes the number of time samples per single-trial EEG, ns refers to the number of subjects, and ne is the number of categories of emotional stimuli.

; whereby nc denotes the number of EEG channels, np denotes the number of time samples per single-trial EEG, ns refers to the number of subjects, and ne is the number of categories of emotional stimuli.

, whereby

, whereby  denotes the tth single-trial EEG data from the sth subject also 1≤t≤ne and 1≤s≤ns. The numbers nc, ne, ns, np are respectively fixed at 8, 4, 26, 15000 in this study.

denotes the tth single-trial EEG data from the sth subject also 1≤t≤ne and 1≤s≤ns. The numbers nc, ne, ns, np are respectively fixed at 8, 4, 26, 15000 in this study.

, whereby

, whereby  denotes a vector that represents the EEG time series from the ith channel and , whereby xn denotes the nth sample. Note that a simplified notation of xn was used to

denotes a vector that represents the EEG time series from the ith channel and , whereby xn denotes the nth sample. Note that a simplified notation of xn was used to denote in the remainder of this paper.

denote in the remainder of this paper.

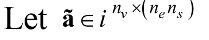

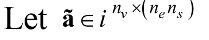

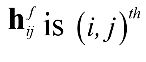

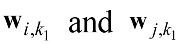

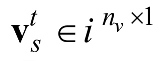

denotes the extracted features that are correlated to

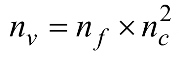

denotes the extracted features that are correlated to  , whereby nv denotes the number of features extracted for a particular feature extraction method.

, whereby nv denotes the number of features extracted for a particular feature extraction method.

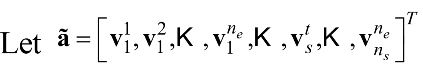

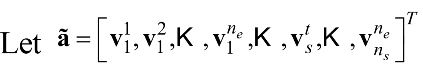

whereby ,

whereby ,  ,

,  and vk denotes the kth feature.

and vk denotes the kth feature.

The basic functions applied to extract the features in this study include rectangular window, autocorrelation, cross correlation, discrete Fourier transform, power spectral density, and cross power spectral density. The mathematical notations of these functions are given as follows:

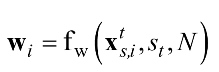

let , , whereby

, , whereby  , denotes a vector that represents the windowed time series EEG signal from the ith channel.

, denotes a vector that represents the windowed time series EEG signal from the ith channel.

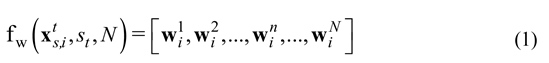

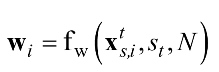

Let denotes the rectangular window function of given by Equation (1).

denotes the rectangular window function of given by Equation (1).

, where N denotes the window length, st refers to the start point, and denotes the nth sample of the windowed signal that is the sample of the ith channel of the EEG data. This study used a sampling frequency Fs=250 Hz, a window length N=512, and a starting point St=500 (2 s).

sample of the ith channel of the EEG data. This study used a sampling frequency Fs=250 Hz, a window length N=512, and a starting point St=500 (2 s).

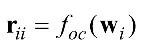

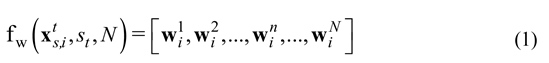

Let denotes the autocorrelation function and

denotes the autocorrelation function and  denotes the cross correlation function between the ith and the jth channel, whereby

denotes the cross correlation function between the ith and the jth channel, whereby and

and . Since wi and wj are stationary with the same length, the autocorrelation and the cross correlation functions for l=0, …, N-1 are respectively given by equations (2) and (3).

. Since wi and wj are stationary with the same length, the autocorrelation and the cross correlation functions for l=0, …, N-1 are respectively given by equations (2) and (3).

, where denotes the complex conjugate.

denotes the complex conjugate.

Let denotes the Discrete Fourier Transform (DFT), whereby transforms the windowed EEG signal wi from time domain to frequency domain.

transforms the windowed EEG signal wi from time domain to frequency domain.

Let Zfi denotes the fth sample of Zi given by Equation (4).

, where , and J is the imaginary unit.

, and J is the imaginary unit.

denotes the Power Spectral Density (PSD) of w, whereby

denotes the Power Spectral Density (PSD) of w, whereby  is the DFT of the autocorrelation function

is the DFT of the autocorrelation function  . denotes the Cross Power Spectral Density (CPSD) of wi and wj, whereby

. denotes the Cross Power Spectral Density (CPSD) of wi and wj, whereby  is the DFT of the cross correlation function rij. The PSD and the CPSD functions are respectively given by equations (5) and (6).

is the DFT of the cross correlation function rij. The PSD and the CPSD functions are respectively given by equations (5) and (6).

,

The Welch’s method, also called the periodogram method, computes the PSD for the entire input signal. This method is performed by dividing the time signal into successive blocks and by averaging the squared-magnitude DFTs of the signal blocks (Welch, 1967).

2.2. Directed transfer function

Directed Transfer Function (DTF) is a method that simultaneously takes into account all channels of the process to estimate the activity flow in a given direction as a function of frequency based on the concept of Granger causality (Kaminski & Blinowska, 1991). DTF is closely related to spectral G-causality. The time series EEG signal from channel i (wi) is said to Granger cause another channel j (wj) if it can be shown by using statistical test (normally F-test) on lagged values of wi (or lagged values of wj ) and values of wi provide statistically significant information about future values of wj. This is fundamental for effective connectivity and here the important point is that this predictability improvement is not reciprocal, i.e. wi may Granger cause wi withoutwj necessarily Granger cause wi.

Using linear prediction of future values of multivariate data with an rth order Multivariate Auto Regressive (MVAR) modeling is the simplest way to exploit this idea. Therefore, data can be modeled by Equation (7).

is the matrix of innovation process (a zero-mean Gaussian noise with an estimated covariance matrix Σ). Each vector of , is considered as an element of w and , respectively.

is the matrix of innovation process (a zero-mean Gaussian noise with an estimated covariance matrix Σ). Each vector of , is considered as an element of w and , respectively. is coefficients matrices, each corresponding to a specific lag r and m representing the order of AR model. The diagonal elements of

is coefficients matrices, each corresponding to a specific lag r and m representing the order of AR model. The diagonal elements of  measure the remaining error when the future values of wj are modeled simultaneously with all the time series values. To analyze the EEG signal, frequency decompositions are often of interest. Therefore, Geweke (Geweke, 1982) proposed using Fourier methods to examine G-causality in the spectral domain which is computed as follows. Equation (9) is computed by transforming Equation (7) to the frequency.

measure the remaining error when the future values of wj are modeled simultaneously with all the time series values. To analyze the EEG signal, frequency decompositions are often of interest. Therefore, Geweke (Geweke, 1982) proposed using Fourier methods to examine G-causality in the spectral domain which is computed as follows. Equation (9) is computed by transforming Equation (7) to the frequency.

By recasting Equation (9) into the transfer function format, we obtain , where the transfer function (prediction error filter representation) is given by Equation (10).

where the transfer function (prediction error filter representation) is given by Equation (10).

, for r=0,…, m; where and I is the identity matrix.

and I is the identity matrix.

Therefore,

, where denotes the coefficients that describes the relationship between the present of time series and the past of .

DTF represents the causal influence from channel j to channel i which is defined by Equation (12).

, where is element of Hf.

is element of Hf.

The normalized DTF is presented as Equation (13).

, whereby Hf is estimated using Equation (10).

To estimate A, Equation (7) should be multiplied by and after taking the expectation, the Yule Walker Equation is obtained as shown in Equation (14).

, where and

and , denotes the transpose of

, denotes the transpose of .

.

Note that because the perturbation of the current time is unrelated to previous values of the process and s are kept outside the expectance operator because they are deterministic rather than statistical quantities. Partial correlation estimation using unbiased covariance was applied in this study (Schlögl, 2006).

because the perturbation of the current time is unrelated to previous values of the process and s are kept outside the expectance operator because they are deterministic rather than statistical quantities. Partial correlation estimation using unbiased covariance was applied in this study (Schlögl, 2006).

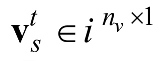

After the normalized DTF is computed for all EEG channels, the feature vector

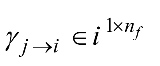

is computed for all EEG channels, the feature vector is given by

is given by  , where the total number of extracted features is

, where the total number of extracted features is  .

.

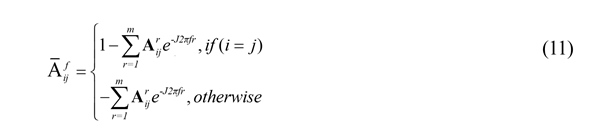

2.3. Generalized Partial directed coherence function

In multichannel EEG analysis, sometimes negative causality occurs at certain frequencies that has no physical interpretation. This problem can be overcome by using Partial Directed Coherence (PDC) function. The PDC describes the direction of information flow between multivariate data in frequency domain. The PDC is based on the decomposition of multivariate partial coherences computed using multivariate autoregressive models. It closely reflects Granger causality by paralleling the definition of Granger causality test estimators. This method allows factoring the classical coherence function (the frequency domain counterpart of correlation analysis) of a pair of structures into two directed coherences; one representing the feed-forward aspect and the other representing the feedback aspect of the interaction (Baccald & de Medicina, 2007). In calculating causality between pairs of electrodes, a negative connection at a certain frequency may not convey any physical information. Therefore, Partial Directed Coherence (PDC) was used to avoid this by modeling of connectivity using a multivariate autoregressive model (Baccald & de Medicina, 2007).

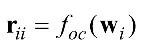

The partial directed coherence from series j to series i, at frequency f can be defined using Equation (15).

, where has been defined in Equation (11). Also,

has been defined in Equation (11). Also,  takes values in the interval [0-1].

takes values in the interval [0-1].

The main difference between DTF and PDC is related to normalization part as shown in Equations (13) and (15). DTF is normalized with respect to the structure that receives the signal whereas PDC is normalized with respect to the structure that sends the signal.

is related to normalization part as shown in Equations (13) and (15). DTF is normalized with respect to the structure that receives the signal whereas PDC is normalized with respect to the structure that sends the signal.

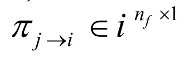

After the normalized PDC is computed for all the EEG channels, the feature vector is given by

is given by  , where the total number of extracted features is

, where the total number of extracted features is  .

.

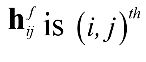

2.4. Phase slope index

The PSI estimates the direction of information flow between the multivariate data in the frequency domain. The time delay of τ is considered for information to be propagated from the ith channel to the jth channel. The phase of cross spectrum between the ith channel and the jth channel is a factor of frequency and time delay (2πfτ). The PSI is the derivative of this phase with respect to f. In comparison to Granger causality, the PSI method is insensitive to instantaneous mixtures of independent sources. Furthermore, it gives meaningful results even if the phase spectrum is not linear since it has proper weights contributions from different frequencies (Nolte et al., 2008).

The phase slope index presents directional connectivity between pairs of electrodes in the frequency domain. The propagation time of information flow between channel i and j is denoted as τ. The time delay of τ could be estimated from phase of cross spectrum between channel i and j. Derivative of this phase with respect to f is called PSI, which is not sensitive to transient mixture of sources more stable than Granger causality method (Nolte et al., 2008).

PSI is estimated by applying the cross spectrum of wi and wj. However, in an alternative approach, the whole data set, for instance wi is divided into k1 segments of duration LT as shown in Equation (16).

, where denotes the nkth sample of wi.

denotes the nkth sample of wi.

Then cross spectral density of Hanning-windowed is estimated for each frequency of f as defined in Equation (17).

is estimated for each frequency of f as defined in Equation (17).

, where denotes the Fourier transform of the Hanning windowed using Equation (4).

denotes the Fourier transform of the Hanning windowed using Equation (4).

After the cross spectral density is estimated, the complex coherency is computed using Equation (18).

Then PSI, is computed using Equation (19).

, where im denotes taking the imaginary part, is the frequency resolution and Fp is the set of frequencies over which the slope is summed. Typically, Fp contains all frequencies but restricted to a specified band in this study.

In order to see how ɸij corresponds to a meaningful estimate of the average slope it is convenient to rewrite Equation (19) as Equation (20).

, where are frequency dependent weights.

are frequency dependent weights.

For smooth phase spectra

Therefore, corresponds to a weighted average of the slope.

Finally, as for the Granger causality, it is convenient to normalize by an estimate of its standard deviation that is done using Equation (22).

.

The value of std( ) is estimated using the Jackknife method and is validated in simulations.

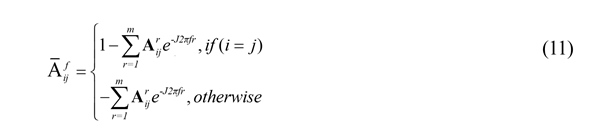

In this study, Fp denotes the EEG frequency bands. A filter bank of a number of 4 Hz bands was implemented.

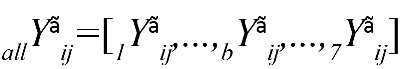

As shown in Figure 4, the frequency band of [4-32] Hz was divided to 7 parts as follows:

[4-8]|[8-12]|[12-16]|[16-20]|[20-24]|[24-28]|[28-32] Hz.

PSI is computed for each part, as example in part b

, where bYãij=-bYãij . Also, bYãij denotes the information flow from channel i to channel j in frequency band of part b.

Therefore, brain connectivity features should be very informative. In addition, our previous studies have shown that emotional states can be accurately recognized using these features extracted from EEG data. Therefore, various connectivity features were applied in this study to categorize EEG data collected in different emotional states. Several connectivity measures including phase slope index (Nolte et al., 2008), directed transfer function (Korzeniewska, Manczak, Kaminski, Blinowska, & Kasicki, 2003), and generalized partial directed coherence (Baccald & de Medicina, 2007) were applied in this study.

Mathematical notations used to extract connectivity features are explained here. Inputs, outputs, related variables and functions are presented as follows.

denotes the multi-channel, multi-subject, and single-trial EEG data that are correlated to the valence and arousal

denotes the multi-channel, multi-subject, and single-trial EEG data that are correlated to the valence and arousal  ; whereby nc denotes the number of EEG channels, np denotes the number of time samples per single-trial EEG, ns refers to the number of subjects, and ne is the number of categories of emotional stimuli.

; whereby nc denotes the number of EEG channels, np denotes the number of time samples per single-trial EEG, ns refers to the number of subjects, and ne is the number of categories of emotional stimuli. , whereby

, whereby  denotes the tth single-trial EEG data from the sth subject also 1≤t≤ne and 1≤s≤ns. The numbers nc, ne, ns, np are respectively fixed at 8, 4, 26, 15000 in this study.

denotes the tth single-trial EEG data from the sth subject also 1≤t≤ne and 1≤s≤ns. The numbers nc, ne, ns, np are respectively fixed at 8, 4, 26, 15000 in this study.  , whereby

, whereby  denotes a vector that represents the EEG time series from the ith channel and , whereby xn denotes the nth sample. Note that a simplified notation of xn was used to

denotes a vector that represents the EEG time series from the ith channel and , whereby xn denotes the nth sample. Note that a simplified notation of xn was used to denote in the remainder of this paper.

denote in the remainder of this paper. denotes the extracted features that are correlated to

denotes the extracted features that are correlated to  , whereby nv denotes the number of features extracted for a particular feature extraction method.

, whereby nv denotes the number of features extracted for a particular feature extraction method. whereby ,

whereby ,  ,

,  and vk denotes the kth feature.

and vk denotes the kth feature.The basic functions applied to extract the features in this study include rectangular window, autocorrelation, cross correlation, discrete Fourier transform, power spectral density, and cross power spectral density. The mathematical notations of these functions are given as follows:

let

, , whereby

, , whereby  , denotes a vector that represents the windowed time series EEG signal from the ith channel.

, denotes a vector that represents the windowed time series EEG signal from the ith channel.Let

denotes the rectangular window function of given by Equation (1).

denotes the rectangular window function of given by Equation (1).

, where N denotes the window length, st refers to the start point, and denotes the nth sample of the windowed signal that is the

sample of the ith channel of the EEG data. This study used a sampling frequency Fs=250 Hz, a window length N=512, and a starting point St=500 (2 s).

sample of the ith channel of the EEG data. This study used a sampling frequency Fs=250 Hz, a window length N=512, and a starting point St=500 (2 s).Let

denotes the autocorrelation function and

denotes the autocorrelation function and  denotes the cross correlation function between the ith and the jth channel, whereby

denotes the cross correlation function between the ith and the jth channel, whereby and

and . Since wi and wj are stationary with the same length, the autocorrelation and the cross correlation functions for l=0, …, N-1 are respectively given by equations (2) and (3).

. Since wi and wj are stationary with the same length, the autocorrelation and the cross correlation functions for l=0, …, N-1 are respectively given by equations (2) and (3).

, where

denotes the complex conjugate.

denotes the complex conjugate.Let denotes the Discrete Fourier Transform (DFT), whereby

transforms the windowed EEG signal wi from time domain to frequency domain.

transforms the windowed EEG signal wi from time domain to frequency domain.Let Zfi denotes the fth sample of Zi given by Equation (4).

, where

, and J is the imaginary unit.

, and J is the imaginary unit. denotes the Power Spectral Density (PSD) of w, whereby

denotes the Power Spectral Density (PSD) of w, whereby  is the DFT of the autocorrelation function

is the DFT of the autocorrelation function  . denotes the Cross Power Spectral Density (CPSD) of wi and wj, whereby

. denotes the Cross Power Spectral Density (CPSD) of wi and wj, whereby  is the DFT of the cross correlation function rij. The PSD and the CPSD functions are respectively given by equations (5) and (6).

is the DFT of the cross correlation function rij. The PSD and the CPSD functions are respectively given by equations (5) and (6).,

The Welch’s method, also called the periodogram method, computes the PSD for the entire input signal. This method is performed by dividing the time signal into successive blocks and by averaging the squared-magnitude DFTs of the signal blocks (Welch, 1967).

2.2. Directed transfer function

Directed Transfer Function (DTF) is a method that simultaneously takes into account all channels of the process to estimate the activity flow in a given direction as a function of frequency based on the concept of Granger causality (Kaminski & Blinowska, 1991). DTF is closely related to spectral G-causality. The time series EEG signal from channel i (wi) is said to Granger cause another channel j (wj) if it can be shown by using statistical test (normally F-test) on lagged values of wi (or lagged values of wj ) and values of wi provide statistically significant information about future values of wj. This is fundamental for effective connectivity and here the important point is that this predictability improvement is not reciprocal, i.e. wi may Granger cause wi withoutwj necessarily Granger cause wi.

Using linear prediction of future values of multivariate data with an rth order Multivariate Auto Regressive (MVAR) modeling is the simplest way to exploit this idea. Therefore, data can be modeled by Equation (7).

is the matrix of innovation process (a zero-mean Gaussian noise with an estimated covariance matrix Σ). Each vector of , is considered as an element of w and , respectively.

is the matrix of innovation process (a zero-mean Gaussian noise with an estimated covariance matrix Σ). Each vector of , is considered as an element of w and , respectively. is coefficients matrices, each corresponding to a specific lag r and m representing the order of AR model. The diagonal elements of

is coefficients matrices, each corresponding to a specific lag r and m representing the order of AR model. The diagonal elements of  measure the remaining error when the future values of wj are modeled simultaneously with all the time series values. To analyze the EEG signal, frequency decompositions are often of interest. Therefore, Geweke (Geweke, 1982) proposed using Fourier methods to examine G-causality in the spectral domain which is computed as follows. Equation (9) is computed by transforming Equation (7) to the frequency.

measure the remaining error when the future values of wj are modeled simultaneously with all the time series values. To analyze the EEG signal, frequency decompositions are often of interest. Therefore, Geweke (Geweke, 1982) proposed using Fourier methods to examine G-causality in the spectral domain which is computed as follows. Equation (9) is computed by transforming Equation (7) to the frequency.

By recasting Equation (9) into the transfer function format, we obtain ,

where the transfer function (prediction error filter representation) is given by Equation (10).

where the transfer function (prediction error filter representation) is given by Equation (10).

, for r=0,…, m; where

and I is the identity matrix.

and I is the identity matrix. Therefore,

, where denotes the coefficients that describes the relationship between the present of time series and the past of .

DTF represents the causal influence from channel j to channel i which is defined by Equation (12).

, where

is element of Hf.

is element of Hf. The normalized DTF is presented as Equation (13).

, whereby Hf is estimated using Equation (10).

To estimate A, Equation (7) should be multiplied by and after taking the expectation, the Yule Walker Equation is obtained as shown in Equation (14).

, where

and

and , denotes the transpose of

, denotes the transpose of .

.Note that

because the perturbation of the current time is unrelated to previous values of the process and s are kept outside the expectance operator because they are deterministic rather than statistical quantities. Partial correlation estimation using unbiased covariance was applied in this study (Schlögl, 2006).

because the perturbation of the current time is unrelated to previous values of the process and s are kept outside the expectance operator because they are deterministic rather than statistical quantities. Partial correlation estimation using unbiased covariance was applied in this study (Schlögl, 2006).After the normalized DTF

is computed for all EEG channels, the feature vector

is computed for all EEG channels, the feature vector is given by

is given by  , where the total number of extracted features is

, where the total number of extracted features is  .

.2.3. Generalized Partial directed coherence function

In multichannel EEG analysis, sometimes negative causality occurs at certain frequencies that has no physical interpretation. This problem can be overcome by using Partial Directed Coherence (PDC) function. The PDC describes the direction of information flow between multivariate data in frequency domain. The PDC is based on the decomposition of multivariate partial coherences computed using multivariate autoregressive models. It closely reflects Granger causality by paralleling the definition of Granger causality test estimators. This method allows factoring the classical coherence function (the frequency domain counterpart of correlation analysis) of a pair of structures into two directed coherences; one representing the feed-forward aspect and the other representing the feedback aspect of the interaction (Baccald & de Medicina, 2007). In calculating causality between pairs of electrodes, a negative connection at a certain frequency may not convey any physical information. Therefore, Partial Directed Coherence (PDC) was used to avoid this by modeling of connectivity using a multivariate autoregressive model (Baccald & de Medicina, 2007).

The partial directed coherence from series j to series i, at frequency f can be defined using Equation (15).

, where

has been defined in Equation (11). Also,

has been defined in Equation (11). Also,  takes values in the interval [0-1].

takes values in the interval [0-1]. The main difference between DTF and PDC

is related to normalization part as shown in Equations (13) and (15). DTF is normalized with respect to the structure that receives the signal whereas PDC is normalized with respect to the structure that sends the signal.

is related to normalization part as shown in Equations (13) and (15). DTF is normalized with respect to the structure that receives the signal whereas PDC is normalized with respect to the structure that sends the signal. After the normalized PDC is computed for all the EEG channels, the feature vector

is given by

is given by  , where the total number of extracted features is

, where the total number of extracted features is  .

. 2.4. Phase slope index

The PSI estimates the direction of information flow between the multivariate data in the frequency domain. The time delay of τ is considered for information to be propagated from the ith channel to the jth channel. The phase of cross spectrum between the ith channel and the jth channel is a factor of frequency and time delay (2πfτ). The PSI is the derivative of this phase with respect to f. In comparison to Granger causality, the PSI method is insensitive to instantaneous mixtures of independent sources. Furthermore, it gives meaningful results even if the phase spectrum is not linear since it has proper weights contributions from different frequencies (Nolte et al., 2008).

The phase slope index presents directional connectivity between pairs of electrodes in the frequency domain. The propagation time of information flow between channel i and j is denoted as τ. The time delay of τ could be estimated from phase of cross spectrum between channel i and j. Derivative of this phase with respect to f is called PSI, which is not sensitive to transient mixture of sources more stable than Granger causality method (Nolte et al., 2008).

PSI is estimated by applying the cross spectrum of wi and wj. However, in an alternative approach, the whole data set, for instance wi is divided into k1 segments of duration LT as shown in Equation (16).

, where

denotes the nkth sample of wi.

denotes the nkth sample of wi.Then cross spectral density of Hanning-windowed

is estimated for each frequency of f as defined in Equation (17).

is estimated for each frequency of f as defined in Equation (17).

, where

denotes the Fourier transform of the Hanning windowed using Equation (4).

denotes the Fourier transform of the Hanning windowed using Equation (4).After the cross spectral density is estimated, the complex coherency is computed using Equation (18).

Then PSI, is computed using Equation (19).

, where im denotes taking the imaginary part, is the frequency resolution and Fp is the set of frequencies over which the slope is summed. Typically, Fp contains all frequencies but restricted to a specified band in this study.

In order to see how ɸij corresponds to a meaningful estimate of the average slope it is convenient to rewrite Equation (19) as Equation (20).

, where

are frequency dependent weights.

are frequency dependent weights.For smooth phase spectra

Therefore, corresponds to a weighted average of the slope.

Finally, as for the Granger causality, it is convenient to normalize by an estimate of its standard deviation that is done using Equation (22).

.

The value of std( ) is estimated using the Jackknife method and is validated in simulations.

In this study, Fp denotes the EEG frequency bands. A filter bank of a number of 4 Hz bands was implemented.

As shown in Figure 4, the frequency band of [4-32] Hz was divided to 7 parts as follows:

[4-8]|[8-12]|[12-16]|[16-20]|[20-24]|[24-28]|[28-32] Hz.

PSI is computed for each part, as example in part b

, where bYãij=-bYãij . Also, bYãij denotes the information flow from channel i to channel j in frequency band of part b.

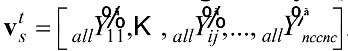

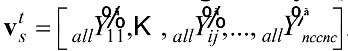

In addition,  denotes the PSI for all frequency bands and

denotes the PSI for all frequency bands and  is ex tracted for all channels. The feature vector extracted is given by

is ex tracted for all channels. The feature vector extracted is given by  , where

, where  and

and  .

.

After the feature vectors were extracted, some of the extracted features may be irrelevant or redundant and may have a negative effect on the accuracy of the classifiers. Therefore, a number of significant features should be selected. Feature ranking was performed using the class separability criteria (Guyon & Elisseeff, 2003; Reyes-Aldasoro & Bhalerao, 2006). Afterward, the most significant features were labeled and classified.

According to Figure 5, the valence and arousal levels are classified separately. The boundaries between different classes are determined from the subjects’ answers to the SAM questionnaire. The SAM answers are 2 dimensional labels that one dimension denotes the valence level and another one the arousal level. The SAM answers have 1 level of uncertainty, for instance denotes the neutral level (Valence=5). According to the SAM answers shown in Figure 6, emotion categories are formed using Valence≤3 (negative) or Valence≥7(positive), and Arousal≤3 (calm) or Arousal≥7 (excited). Therefore, binary labeling is done for valence and arousal levels detection. For example, the subjects with Valence≤3 or Valence≥7 are categorized as valence groups where subjects with Valence≤3 are considered as class 1 and subjects with Valence≥7 are labeled as class 2. The similar process was performed for labeling of arousal classes as well. Therefore the binary classes were configured as given by Equation (23).

, where cv denotes the valence groups labels and is the total number of subjects in this category. Similarly, ca denotes the arousal groups labels and is the number of subjects in this category.

The extracted features, , were labeled using for valence detection as and using for arousal detection as

and using for arousal detection as  . The labeled input for valence classifier

. The labeled input for valence classifier  and arousal classifier

and arousal classifier  are defined by Equation (24).

are defined by Equation (24).

denotes the PSI for all frequency bands and

denotes the PSI for all frequency bands and  is ex tracted for all channels. The feature vector extracted is given by

is ex tracted for all channels. The feature vector extracted is given by  , where

, where  and

and  .

.After the feature vectors were extracted, some of the extracted features may be irrelevant or redundant and may have a negative effect on the accuracy of the classifiers. Therefore, a number of significant features should be selected. Feature ranking was performed using the class separability criteria (Guyon & Elisseeff, 2003; Reyes-Aldasoro & Bhalerao, 2006). Afterward, the most significant features were labeled and classified.

According to Figure 5, the valence and arousal levels are classified separately. The boundaries between different classes are determined from the subjects’ answers to the SAM questionnaire. The SAM answers are 2 dimensional labels that one dimension denotes the valence level and another one the arousal level. The SAM answers have 1 level of uncertainty, for instance denotes the neutral level (Valence=5). According to the SAM answers shown in Figure 6, emotion categories are formed using Valence≤3 (negative) or Valence≥7(positive), and Arousal≤3 (calm) or Arousal≥7 (excited). Therefore, binary labeling is done for valence and arousal levels detection. For example, the subjects with Valence≤3 or Valence≥7 are categorized as valence groups where subjects with Valence≤3 are considered as class 1 and subjects with Valence≥7 are labeled as class 2. The similar process was performed for labeling of arousal classes as well. Therefore the binary classes were configured as given by Equation (23).

, where cv denotes the valence groups labels and is the total number of subjects in this category. Similarly, ca denotes the arousal groups labels and is the number of subjects in this category.

The extracted features, , were labeled using for valence detection as

and using for arousal detection as

and using for arousal detection as  . The labeled input for valence classifier

. The labeled input for valence classifier  and arousal classifier

and arousal classifier  are defined by Equation (24).

are defined by Equation (24).

, where t denotes the transpose of matrix, and is the number of output features.

2.5. Classification

A wide range of classifiers can be applied to derive the affective states. Since four classes of emotions are studied, various multiclass classification schemes can be used such as all-together, one-against-one, one versus rest, and hierarchical model. In this study, the Figure 5 model was applied.

The model is constructed using two binary classifiers of the same type: one classifier is trained to classify valence and the other is trained to classify arousal. In this model, the four-class classification is decomposed into two-level nested binary classifiers based on arousal-specific level and valence-specific level. Two types of classifiers are applied using the applied model on the extracted features: 1. K-Nearest Neighbor (KNN) and 2. Support Vector Machine (SVM). The study classifier scheme is based on two similar binary classifiers, one for discrimination of valance level (positive, negative) and the other one for discrimination of arousal level (calm, excited). The classification accuracy of this scheme was calculated using either K-Nearest Neighbor classifier (KNN) or the Support Vector Machine (SVM). The KNN classifier is a supervised learning algorithm to categorize objects based on the closest training examples in the feature space (Filipek et al., 1999). In contrast, the SVM classifier finds a separating hyperplane with the maximal margin between two classes of data (Shawe-Taylor & Cristianini, 2000). In this study, the features were mapped using Gaussian radial basis function into the kernel space with the sigma of the RBF kernels set to 3.5 for arousal and 6 for valence level detection.

3. Results

Four classes of emotions including negative and positive emotions from valence dimension and calm and highly exited emotions from arousal dimension were investigated. The classification accuracies using three different features and two classifiers are shown in Figure 7 and Figure 8. The results of KNN and SVM classifiers are based on leave-one-out method. Programming was done using MATLAB and “BioSig” toolbox to extract the connectivity features (Schlögl & Brunner, 2008).

Among the three implied methods, PSI feature yields better classification accuracy. The results show a significant improvement in the classification accuracy () by removing the effect of the internal factor through categorizing the subjects to stressed () and stress-free () groups. These results indicate that there are patterns of brain regions connectivity (wiring structures) during the perception of external stimuli that chronic stress can change them. To clarify the concept of connectivity between brain regions, the flux average of each EEG channel was evaluated using PSI. Sum of PSI of 2 s EEG signal in duration of 50 s was computed for each

2.5. Classification

A wide range of classifiers can be applied to derive the affective states. Since four classes of emotions are studied, various multiclass classification schemes can be used such as all-together, one-against-one, one versus rest, and hierarchical model. In this study, the Figure 5 model was applied.

The model is constructed using two binary classifiers of the same type: one classifier is trained to classify valence and the other is trained to classify arousal. In this model, the four-class classification is decomposed into two-level nested binary classifiers based on arousal-specific level and valence-specific level. Two types of classifiers are applied using the applied model on the extracted features: 1. K-Nearest Neighbor (KNN) and 2. Support Vector Machine (SVM). The study classifier scheme is based on two similar binary classifiers, one for discrimination of valance level (positive, negative) and the other one for discrimination of arousal level (calm, excited). The classification accuracy of this scheme was calculated using either K-Nearest Neighbor classifier (KNN) or the Support Vector Machine (SVM). The KNN classifier is a supervised learning algorithm to categorize objects based on the closest training examples in the feature space (Filipek et al., 1999). In contrast, the SVM classifier finds a separating hyperplane with the maximal margin between two classes of data (Shawe-Taylor & Cristianini, 2000). In this study, the features were mapped using Gaussian radial basis function into the kernel space with the sigma of the RBF kernels set to 3.5 for arousal and 6 for valence level detection.

3. Results

Four classes of emotions including negative and positive emotions from valence dimension and calm and highly exited emotions from arousal dimension were investigated. The classification accuracies using three different features and two classifiers are shown in Figure 7 and Figure 8. The results of KNN and SVM classifiers are based on leave-one-out method. Programming was done using MATLAB and “BioSig” toolbox to extract the connectivity features (Schlögl & Brunner, 2008).

Among the three implied methods, PSI feature yields better classification accuracy. The results show a significant improvement in the classification accuracy () by removing the effect of the internal factor through categorizing the subjects to stressed () and stress-free () groups. These results indicate that there are patterns of brain regions connectivity (wiring structures) during the perception of external stimuli that chronic stress can change them. To clarify the concept of connectivity between brain regions, the flux average of each EEG channel was evaluated using PSI. Sum of PSI of 2 s EEG signal in duration of 50 s was computed for each

channel. The patterns of the average PSI for all channels in positive and negative emotiTable 1. P-values of t test between stressed subjects vs. stress-free ones in positive and negative emotional stateshown in Figure 9 and Figure 10, respectively.

Statistical analysis of the average PSI results using t test shows significant changes on C3, C4, F4, P4, T8 for positive emotions and C3, F4, P3, P4, T8 for negative emotions. A 2-tailed unpaired t test considering unequal variance for the two groups was computed. Table 1 shows the acquired P values from t test. The P values show changes in average PSI on C4 dominates during positive emotions while changes in channel P3 dominates in negative emotions.

Regarding the present Table 1. P-values of t test between stressed subjects vs. stress-free ones in positive and negative emotional statesata in Figure 9 and Figure 10, there is no consideration on the arousal levels and its effect on the valence levels. The same comparison was done for the resting state (eyes close condition). Figure 11 shows the results of the averaged PSI for 5 minutes of EEG signals. Table 2 shows the P value of effective channels extracted using t test on unpaired data.

The results shown in Figure 11 indicate the possibility of using the connectivity features of EEG in the resting state for detection of chronic stress. To investigate such an idea, the subjects were classified to stressed and stress-free group based on these features. The classifi

Statistical analysis of the average PSI results using t test shows significant changes on C3, C4, F4, P4, T8 for positive emotions and C3, F4, P3, P4, T8 for negative emotions. A 2-tailed unpaired t test considering unequal variance for the two groups was computed. Table 1 shows the acquired P values from t test. The P values show changes in average PSI on C4 dominates during positive emotions while changes in channel P3 dominates in negative emotions.

Regarding the present Table 1. P-values of t test between stressed subjects vs. stress-free ones in positive and negative emotional statesata in Figure 9 and Figure 10, there is no consideration on the arousal levels and its effect on the valence levels. The same comparison was done for the resting state (eyes close condition). Figure 11 shows the results of the averaged PSI for 5 minutes of EEG signals. Table 2 shows the P value of effective channels extracted using t test on unpaired data.

The results shown in Figure 11 indicate the possibility of using the connectivity features of EEG in the resting state for detection of chronic stress. To investigate such an idea, the subjects were classified to stressed and stress-free group based on these features. The classifi

cation accuracy of the system for stress level detection applying the SVM and the KNN is shown in Figure 12.

The results shown in Figures 7, 8, and 12 emphasize that either in resting state or emotional states the patterns of connectivity among the brain regions are changed in response to stress level. Note that this study was limited to adults aged from 18 to 30 years old, so such results cannot be generalized to a younger or older population. In addition, the EEG signals below 4 Hz or above 32 Hz were not evaluated. The results are based on 8 brain regions that precision of results may be tolerated by applying more or less number of EEG electrodes.

4. Discussion

Chronic stress causes a series of chemical compounds releases and reactions in our bodies. Therefore, stress as an internal stimulus can potentially influence the responses to external stimulus. In this study changes in brain activity

The results shown in Figures 7, 8, and 12 emphasize that either in resting state or emotional states the patterns of connectivity among the brain regions are changed in response to stress level. Note that this study was limited to adults aged from 18 to 30 years old, so such results cannot be generalized to a younger or older population. In addition, the EEG signals below 4 Hz or above 32 Hz were not evaluated. The results are based on 8 brain regions that precision of results may be tolerated by applying more or less number of EEG electrodes.

4. Discussion

Chronic stress causes a series of chemical compounds releases and reactions in our bodies. Therefore, stress as an internal stimulus can potentially influence the responses to external stimulus. In this study changes in brain activity

in response to external emotional stimuli considering the subject’s stress level was investigated. The effective connectivity between eight brain regions based on the EEG signals were correlated to four emotional states. The classification accuracy of the emotion recognition system improves by separating the subjects to two different levels of stress. The effect of stress on connectivity pattern of brain regions was also tested in resting state. The results show that stress can change connectivity patterns among different brain regions either in resting state or emotional stimuli perception. However, impact of stress on changing the pattern of connectivity is subject-dependent.

Acknowledgments

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors. The authors would like to thank Mr. Michel Heijnen for his assistance in data collection and Dr. Björn Crüts for his advice on the EEG protocol.

Conflict of Interest

The author declared no conflicts of interest.

References

Aftanas, L. I., Varlamov, A. A., Pavlov, S. V., Makhnev, V. P., & Reva, N. V. (2001). Affective picture processing: event-related synchronization within individually defined human theta band is modulated by valence dimension. Neuroscience Letters, 303(2), 115-8. doi: 10.1016/s0304-3940(01)01703-7

Arnsten, A. F. T. (2009). Stress signalling pathways that impair prefrontal cortex structure and function. Nature Reviews Neuroscience, 10(6), 410-22. doi: 10.1038/nrn2648

Baccald, L. A., & De Medicina, F. (2007). Generalized partial directed coherence. Paper presented at 15th International Conference on the Digital Signal Processing, Cardiff, UK, 1-4 July 2007.

Baumgartner, T., Esslen, M., & Jäncke, L. (2006). From emotion perception to emotion experience: Emotions evoked by pictures and classical music. International Journal of Psychophysiology, 60(1), 34-43. doi: 10.1016/j.ijpsycho.2005.04.007

Bradley, M., Lang, P. J., University of Florida., & National Institute of Mental Health. (1999). The International affective digitized sounds (IADS): Stimuli, instruction manual and affective ratings. Gainesville, FL: NIMH Center for the Study of Emotion and Attention.

Coan, J. A., & Allen, J. J. B. (2004). Frontal EEG asymmetry as a moderator and mediator of emotion. Biological Psychology, 67(1-2), 7-50. doi: 10.1016/j.biopsycho.2004.03.002

Cohen, S., Kamarck, T., & Mermelstein, R. (1983). A global measure of perceived stress. Journal of Health and Social Behavior, 24(4), 385. doi: 10.2307/2136404

Cowie, R., & Cornelius, R. R. (2003). Describing the emotional states that are expressed in speech. Speech Communication, 40(1-2), 5–32. doi: 10.1016/s0167-6393(02)00071-7

Craig, A. D. (2003). Interoception: The sense of the physiological condition of the body. Current Opinion in Neurobiology, 13(4), 500-505. doi: /10.1016/s0959-4388(03)00090-4

Ekman, P. (1994). Moods, emotions and traits. In P. Ekman & R. J. Davidson (Eds.), From The Nature of Emotion: Fundamental Questions (Vol. 4, pp. 512). New York: Oxford University Press, USA.

Ekman, P. (1999). Basic emotions. In T. Dagleish & M. Power (Eds.), Handbook of Cognition and Emotion (pp. 45-60). Hoboken, New Jersey: John Wiley & Sons Ltd.

Farquharson, R. F. (1942). The Hypothalamus and central levels of autonomic function. American Journal of Psychiatry, 98(4), 625. doi: 10.1176/appi.ajp.98.4.625

Filipek, P. A., Accardo, P. J., Baranek, G. T., Cook, E. H. J., Dawson, G., Gordon, B., et al. (1999). The screening and diagnosis of autistic spectrum disorders. Journal of Autism and Developmental Disorders, 29(6), 439-484. doi: 10.1023/a:1021943802493

Frantzidis, C. A., Bratsas, C., Klados, M. A., Konstantinidis, E., Lithari, C. D., Vivas, A. B. et al. (2010). On the classification of emotional biosignals evoked while viewing affective pictures: an integrated data-mining-based approach for healthcare applications. IEEE Transactions on Information Technology in Biomedicine, 14(2), 309-18. doi: 10.1109/titb.2009.2038481

Geweke, J. (1982). The Measurement of linear dependence and feedback between multiple time series. Journal of the American Statistical Association, 77, 304-24. doi: 10.2307/2287238

Gross, J. J., & Levenson, R. W. (1995). Emotion elicitation using films. Cognition & Emotion, 9(1), 87-108. doi: 10.1080/02699939508408966

Guyon, I., & Elisseeff, A. (2003). An introduction to variable and feature selection. Journal of Machine Learning Research. 3(2003), 1157-82. doi: citeulike-article-id:167555

Kaminski, M., & Blinowska, K. (1991). A new method of the description of the information flow in the brain structures. Biological Cybernetics, 65(3), 203-210. doi: 10.1007/bf00198091

Kemp, A. H., Gray, M. A., Eide, P., Silberstein, R. B., & Nathan, P. J. (2002). Steady-state visually evoked potential topography during processing of emotional valence in healthy subjects. NeuroImage, 17(4), 1684-92. doi: 10.1006/nimg.2002.1298

Khosrowabadi, R., Hiok Chai, Q., Wahab, A., & Kai Keng, A. (2010). EEG-based emotion recognition using self-organizing map for boundary detection. Paper presented at The 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23-26 August 2010.

Kober, H., Barrett, L. F., Joseph, J., Bliss-Moreau, E., Lindquist, K., & Wager, T. D. (2008). Functional grouping and cortical-subcortical interactions in emotion: A meta-analysis of neuroimaging studies. NeuroImage, 42(2), 998-1031. doi: 10.1016/j.neuroimage.2008.03.059

Korzeniewska, A., Manczak, M., Kaminski, M., Blinowska, K. J., & Kasicki, S. (2003). Determination of information flow direction among brain structures by a modified directed transfer function (dDTF) method. Journal of Neuroscience Methods, 125(1-2), 195-207. doi: 10.1016/s0165-0270(03)00052-9

Kulish, V. V., Sourin, A. I., & Sourina, O. (2007). Fractal spectra and visualization of the brain activity evoked by olfactory stimuli. Paper presented at The 9th Asian Symposium on Visualization, Hong Kong, Chinese, 4-9 June 2007.

Lang, P. J. (1980). Behavioral treatment and bio-behavioral assessment: Computer applications. In J. Sidowski, J. Johnson & T. Williams (Eds.), Technology in Mental Health Care Delivery Systems (pp. 119-137). Norwood, NJ: Ablex Pub.

Lang, P. J., Bradley, M. M., & Cuthbert, B. N. (2005). International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Gainesville, FL: University of Florida.

Lewis, M. D., & Todd, R. M. (2007). The self-regulating brain: Cortical-subcortical feedback and the development of intelligent action. Cognitive Development, 22(4), 406–430. doi: 10.1016/j.cogdev.2007.08.004

Lundberg, U. (2008). Stress and (public) health. In K. Heggenhougen (Ed.), International Encyclopedia of Public Health (pp. 241-250). Oxford: Academic Press.

Nolte, G., Ziehe, A., Nikulin, V. V., Schlögl, A., Krämer, N., Brismar, T. et al. (2008). Robustly Estimating the flow direction of information in complex physical systems. Physical Review Letters, 100(23). doi: 10.1103/physrevlett.100.234101

Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia, 9(1), 97-113. doi: 10.1016/0028-3932(71)90067-4

Olofsson, J. K., Nordin, S., Sequeira, H., & Polich, J. (2008). Affective picture processing: An integrative review of ERP findings. Biological Psychology, 77(3), 247-65. doi: 10.1016/j.biopsycho.2007.11.006

Ortony, A., & Turner, T. J. (1990). What’s basic about basic emotions? Psychological Review, 97(3), 315-31. doi: 10.1037//0033-295x.97.3.315

Petrantonakis, P. C., & Hadjileontiadis, L. J. (2010). Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Transactions on Affective Computing, 1(2), 81-97. doi: 10.1109/t-affc.2010.7