Volume 13, Issue 3 (May & Jun 2022)

BCN 2022, 13(3): 285-294 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Goshvarpour A, Goshvarpour A, Abbasi A. A Predictive Model for Emotion Recognition Based on Individual Characteristics and Autonomic Changes. BCN 2022; 13 (3) :285-294

URL: http://bcn.iums.ac.ir/article-1-745-en.html

URL: http://bcn.iums.ac.ir/article-1-745-en.html

A Predictive Model for Emotion Recognition Based on Individual Characteristics and Autonomic Changes

1- Department of Biomedical Engineering, Faculty of Electrical Engineering, Imam Reza International University, Mashhad, Razavi Khorasan, Iran.

2- Department of Biomedical Engineering, Faculty of Electrical Engineering, Sahand University of Technology, Tabriz, Iran.

2- Department of Biomedical Engineering, Faculty of Electrical Engineering, Sahand University of Technology, Tabriz, Iran.

Full-Text [PDF 831 kb]

| Abstract (HTML)

Full-Text:

1. Introduction

Emotions play a crucial role in health, social relationships, and daily functions. Concerning the importance of emotions, the emotion recognition via physiological parameters (including Galvanic Skin Response (GSR), respiration, Electrocardiogram (ECG), blood pressure, or Electroencephalogram (EEG)) has been fascinated by several researchers in the field of affective computing (Frantzidis et al., 2010; Goshvarpour et al., 2015; Nardelli et al., 2015; Valenza et al., 2014). Among these, Autonomic Nervous System (ANS) activity is a fundamental component in many recent theories of emotion. Overall autonomic measures, Heart Rate (HR) (and also Heart Rate Variability (HRV)) is the most often reported measure (Kreibig, 2010). Numerous approaches such as standard features and nonlinear indices have been used in the literature to analyze the HRV signal quantitatively. However, the main focus was on the simple standard features (Chang, Zheng, & Wang, 2010; Choi & Woo, 2005; Greco, Valenza, Lanata, Rota, & Scilingo, 2014; Haag, Goronzy, Schaich,& Williams 2004; Jang, Park, Park, Kim, & Sohn, 2015; Katsis, Katertsidis, Ganiatsas, & Fotiadis, 2008; Katsis, Katertsidis, & Fotiadis, 2011; Kim, Bang, & Kim, 2004; Li & Chen, 2006; Liu, Conn, Sarkar, & Stone, 2008; Niu, Chen, & Chen, 2011; Picard, Vyzas, & Healey, 2001; Rainville, Bechara, Naqvi, & Damasio, 2006; Rani, Liu, Sarkar, & Vanman, 2006; Yannakakis & Hallam, 2008; Yoo, Lee, Park, Kim, Lee, & Jeong, 2005; Zhai & Barreto, 2006).

Also, different HRV patterns have been reported in the context of different emotion-related autonomic responses (Kreibig, 2010). Many studies have analyzed these differences in the physiological mechanism of emotional reactions as a function of individual variables such as age, gender, and linguality, as well as other factors like sleep duration (Bayrami et al., 2012; Chen, Liu, Z.- Wu, Ding, Dong, & Hirota 2015; Franzen, Buysse, Dahl, Thompson, & Siegle, 2009; Yoo, Gujar, Peter, Jolesz, & Walker, 2007). The appendix presents a short review of the literature. They mainly tended to evaluate one or two parameters separately, and the relation and interaction between these factors on emotional reactions were not simultaneously considered. For example, 1) Are the emotional responses of two genders with insufficient sleep the same? 2) If the subject’s age is also considered, what changes have been made in the emotional responses? 3) Which one has the maximum effect on the emotional autonomic changes? It is supposed that the individual information can jointly affect emotional conformation. Therefore, this relationship and interaction should be considered in the affect analytic system.

The present study aimed to evaluate the effects of age, gender, linguality, and sleep duration on the autonomic responses associated with emotional inductions simultaneously, and it attempted to offer a predictive model for these interactions. The rest of this manuscript is prepared as follows. Section 2 offers the material and methods used in this work. Section 3 reports the experimental results of the proposed procedure. Finally, section 4 presents the study’s conclusion.

2. Materials and Methods

Data collection

The ECG of 47 college students attending the Sahand University of Technology was collected. All participants were Iranian students. To elicit emotions in the participants, images from the International Affective Picture System (IAPS) were used (Lang, Bradley, & Cuthbert, 2005). Based on the dimensional structure of the emotional space, the images of the IAPS were chosen to correspond to the four classes of emotions (Goshvarpour et al., 2015): relaxation, happiness, sadness, and fear. Upon arrival in the laboratory, all participants were requested to read and sign a consent form, to participate in the experiment. All participants reported no history of neurological disease, cardiovascular, epileptic, or hypertension diseases. The subjects were asked not to eat caffeine, salt, or fat foods two hours before data recording and remained still during the experiment, particularly avoiding movements of their fingers, hands, and legs.

The procedure took about 15 minutes, and images were represented after two minutes of rest. In the initial baseline measurement, subjects were instructed to keep their eyes open and watch a blank screen. Then, 28 blocks of pictorial stimuli were randomly shown on the screen to prevent habituation in subjects. Furthermore, they were balanced among subjects. Each block consisted of five pictures from the same emotional class displayed for about 15 s with a 10-s blank screen period at the end. This process was done to ensure the stability of the emotion over time. The blank screen period was applied to allow the return of physiological fluctuations to the baseline and assure the regularity in the demonstration of different emotional images. The blank screen is followed by a white plus (for 3 s) to prompt the subjects to concentrate and look at the center of the screen and prepare them for the next block. They were also asked to self-assess their emotional states. Figure 1 demonstrates the protocol description.

.jpg)

All signals were recorded in the computational neuroscience laboratory of the Sahand University of Technology. A 16-channel PowerLab (manufactured by AD Instruments) with a sampling rate of 400 Hz and a digital notch filter was used to remove current line noise.

The emotional model

Finding a linear relationship between variables usually describes the observed data. In addition, it makes possible a reasonable prediction of new observations. In the previous studies, changes in HRV can serve as a valuable and effective tool to analyze affective states. Consequently, in the current study, the ratio of HRV changes during rest to each emotional state is considered a dependent (or response) variable to model the affective states.

It has been shown that (refer to appendix): first, women intensely experience emotions. Second, the older apply different strategies in emotion regulation and heightened positive emotions. Third, different brain functions are activated in bilinguals during emotional stimuli presentation. In addition, an augmented reactivity to negative emotions has been reported. Therefore, stronger emotional weights should be considered for women, older, bilinguals, and sleep-deprived participants.

Based on the results of individual characteristics and the HRV index, the evaluative model for emotion recognition can be recognized with a regression equation. Consequently, the evaluation index of affective autonomic changes can be calculated by Equation 1.

1. f=ax1+bx2+cx3+dx4+E

In this Equation, x1 represents the gender G) characteristics: 1 for men and 2 for women. x2 is the subjects’ age (A) range: 1 for subjects in the age range 19-22 years and 2 for subjects in the age range 22-25 years. x3 carries the linguality (L) information which is coded 1 if the subject is monolingual and 2 if the subject is bilingual. Sleep (S) is coded by x4: 1 for subjects with normal sleeping and 2 for sleep deprivation. The ratios of HRV changes during rest to each affective state are captured by f. Therefore, f1, f2, f3, and f4 are formed for happiness, relaxation, sadness, and fear affective states, respectively.

3. Results

Eighty percent of recording data were randomly nominated to calculate the coefficients of a, b, c, d, and e by a linear fitting of HRV data, and the rest (20%) for testing. Different combinations of individual characteristics are also considered in the model. To this end, the coefficients play an essential role. For instance, if we want to evaluate the role of age and gender (GA) on the model, the coefficients of c and d should be set to zero. As a result, the following conditions are considered in the model evaluation (Equation 2):

2 .

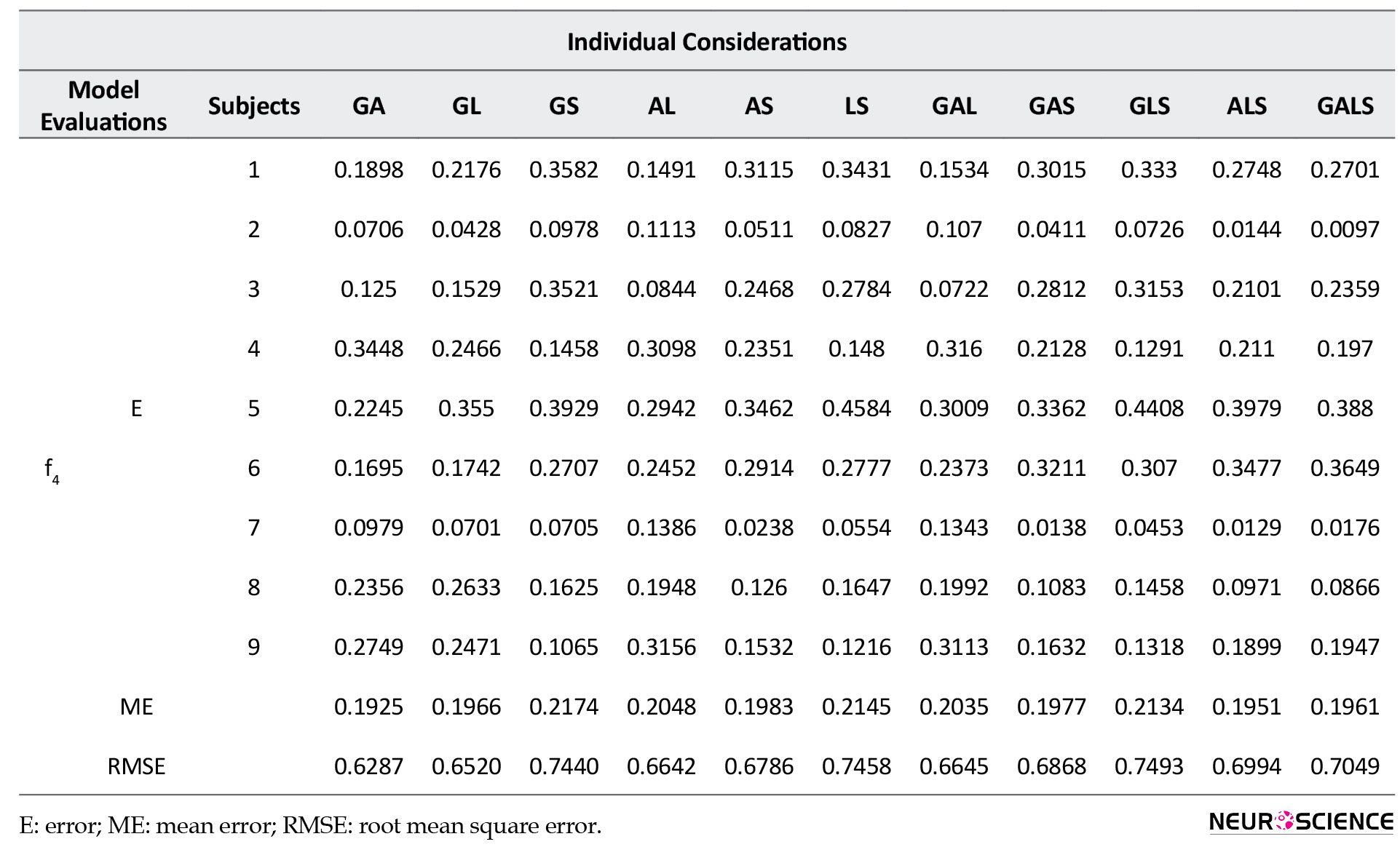

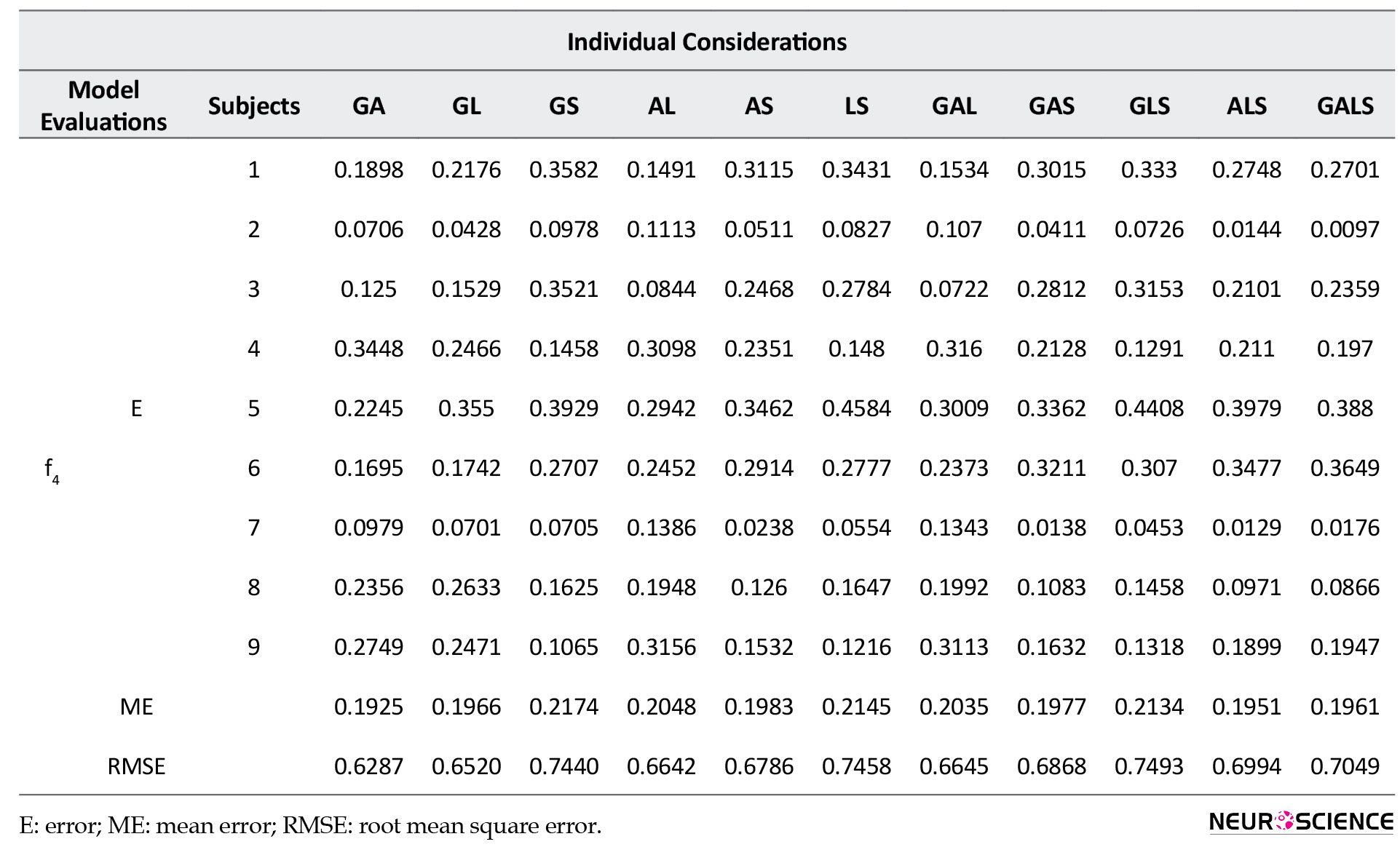

To evaluate the performance of the model, the mean error (difference between the real data and the estimated one) and the Root Mean Square Error (RMSE) are calculated. Table 1 outlines the results.

.jpg)

Different combinations of individual characteristics can serve to predict the HRV indices in different affective states (Table 1). Based on the mean error results, LS and GL combinations can track the HRV changes due to happy stimuli. While GA outperforms the others for relaxation and fear. In addition, ALS and GALS result in the best prediction of HRV indices for sad incentives. Different individual parameters are involved in the emotional state prediction. Different results are obtained considering subjects exclusively. The results of subject 2 are accurate in all emotional conditions.

4. Discussion

In the current study, a simple predictive model of emotion was presented based on an autonomic feature. For the first time, the importance of individual characteristics involvements and combinations was examined in the problem of emotion prediction based on an HRV parameter. For a two-dimensional emotion theory, four categories of affective states were introduced: happiness, relaxation, sadness, and fear. The HRV changes in the rest in relation to each affective state are considered a dependent variable. An affect predictive model was proposed based on the linear combinations of individual differences with acceptable performance.

The results of this study showed that different subjective characteristics are involved in predicting HRV indices of affective states. LS, GL, GA, ALS, and GALS are desired arrangements to predict HRV changes due to emotional provocations. Researchers can conclude that a close association is observed between gender and physiological changes in emotional states. This result was consistent with published articles extensively where the role of gender in emotion recognition was stated (Chen et al., 2015). However, the role of some indices like sleep quantity or linguality on autonomic indices affected by visual affective states has not been explored so far. Different results were obtained according to each subject. Different combinations of individual characteristics incorporate into the affect prediction. Previous studies confirm the role of subject differences in emotion perception (Donges, Kersting, & Suslow, 2012; Martin, Berry, Dobranski, Horne, & Dodgson, 1996). Different emotion perceptions are because of various individual characteristics, as well as the past experiences of emotions (Barrett, Mesquita, Ochsner, & Gross, 2007). Based on the role of several individual characteristics, a new perspective of the emotion predictive model was presented in the current study. However, more data are required to establish the role of individual characteristics in predicting autonomic emotional states.

Appendix

Gender differences and emotions

A review of articles on emotion suggests that men and women employ different strategies to control and express their emotions. Compared to men, women experience positive and negative emotions more intensely (Grossman & Wood, 1993), and they are more emotional (Grewal & Salovey, 2005). Dealing with frightening situations, women have reported more fear (Gordon & Riger, 1991). Also, some studies have found different emotional valence and arousal ratings between the two genders (Murnen & Stockton, 1997): more emotional arousal in men and higher rates of valence in women. Recent evidence has suggested higher brain activity in females compared to males in some Electroencephalogram (EEG) studies. Guntekin and Basar (2007) perceived a larger beta response in women when observing facial expression; however, it was independent of the type of emotion. In women, the greater electrodermal responses (Kring & Gordon, 1998), the more facial electromyographic reactions, and the higher heart rates (Bradley, Codispoti, Sabatinelli, & Lang, 2001) have been realized during unpleasant stimuli compared to men.

Age and emotional reactions

In previous works, there are some confirmations about the existence of close relations between age and the type of emotion that should be recognized. Specifically, anger, sadness, fear, happiness, and surprise are hardly identified in older adults compared to younger participants (Isaacowitz et al., 2007; Ruffman, Henry, Livingstone, & Phillips, 2008). However, they could correctly recognize positive stimuli. In contrast, despite higher recognition rates of negative motivations in younger adults, they are easily distracted by these types of emotions (Thomas & Hasher, 2006). Low arousal positive affect increased, and negative affect across both low- and high-arousal levels decreased in older adults; however, no age differences were observed in high arousal positive affect (Kessler & Staudinger, 2009). To explain the reason for such positive affections in elders, some scientists examined brain activities. They claimed that the changes in the functional organization of the brain are the main reason (Cacioppo, Bernston, Bechara, Tranel, & Hawkley, 2011). However, the literature suggests different strategies to regulate emotional reactions (Urry & Gross, 2010).

Bilingualism and emotion

There is a great interest in emotional information processing in bilinguals. It was believed that the first language is an emotional expressiveness language, whereas the second one is an emotional distance language (Dewaele, 2008; Pavlenko, 2002). Recalling emotional stimuli, the native language (Spanish) was compared with the second language (English) (Anooshian & Hertel, 1994). Results showed that emotional motivations were better recalled than the neutrals in the first language. However, considering the valence dimension, the authors reported altered emotional recalling in the native language (Aycicegi & Harris, 2004). The negative ones (except for taboo) were less recalled than the neutrals. Shorter reaction time in monolinguals (Altarriba, 2006) and stronger emotional weight in the first language of bilinguals (Dewaele, 2008; Pavlenko, 2002) have been reported.

There is evidence that different structures and functions of the brain (Kim, Relkin, Lee, & Hirsch, 1997) are activated in bilinguals during the presentation of emotional stimuli. From the perspective of physiological responses, the most attention is devoted to the skin conductivity (SC) responses of bilinguals (Caldwell–Harris & Aycicegi–Dinn, 2009; Harris, Aycicegi, & Gleason, 2003). Greater SC has appeared in monolinguals to the emotional stimuli (Harris, et al, 2003). However, the heart rates of Turkish-Persian and Kurdish-Persian bilinguals are evaluated (Bayrami et al., 2012). The authors found that in both groups of bilinguals, negative motivations triggered a greater heart rate in the native language than that of the second language.

Sleep duration and emotional responses

Short or long sleep duration causes undesirable effects on mood, cognition, physiological function, alertness, and memory (Taub, 1980; Taub et al., 1971). According to evidence, a close relationship is observed between emotions and sleep (Berger, Miller, Seifer, Cares, & Lebourgeois, 2012; Walker & Harvey, 2010). Disrupting emotional memories, decreasing emotion reactivity, weakening sensitivity to positive stimuli, and consolidating sensitivity to negative ones are some outcomes of sleep deficiency (Franzen et al., 2009; Gujar et al., 2011; Pilcher & Huffcutt, 1996; Sterpenich et al., 2007). To have optimal processing and evaluation of emotion, enough sleep is needed. Insufficient sleep may cause biases in processing the negative valence stimuli (Gujar et al., 2011). Notably, an augmented reactivity to negative emotions, including anger and fear, has been documented throughout the day without sleep (Gujar et al., 2011). Using functional magnetic resonance imaging, different functions of the brain (augmentation in amygdala reactivity) to negative emotional stimuli have been reported for a night of sleep deprivation (Yoo et al., 2007). By evaluating autonomic reactivity, researchers demonstrated a larger pupillary response to negative pictures in sleep deficiency (Franzen et al., 2009).

5. Conclusion

A new perspective of the emotion predictive model was presented based on the role of several individual characteristics, in the current study. For modeling, we evaluated different models as well as different values of parameters. However, it was desirable to choose the simplest and at the same time the most efficient model. Based on this, the proposed model was selected. However, this model may not work well for other HRV parameters. However, to establish the role of individual characteristics in the prediction of autonomic emotional states more data are needed. The number of participants should be greatly increased so that this model can be used more confidently. More samples (larger population) should have enough variety in terms of gender, age, bilingualism, and sleep quantity.

Ethical Considerations

Compliance with ethical guidelines

All ethical principles are considered in this article. The participants were informed of the purpose of the research and its implementation stages. They were also assured about the confidentiality of their information and were free to leave the study whenever they wished, and if desired, the research results would be available to them. A written informed consent has been obtained from the subjects. Principles of the .Helsinki Convention was also observed.

Funding

This research did not receive any grant from funding agencies in the public, commercial, or non-profit sectors.

Authors' contributions

Conceptualization and Funding acquisition and Resources: All authors; Investigation, Data analysis, Methodology, Data collection and Writing-original draft: Atefeh Goshvarpour and Ateke Goshvarpour; Writing – review & editing: All authors.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors gratefully acknowledge Computational Neuroscience Laboratory, where the data were collected, and all the subjects volunteered for the study.

References

Altarriba, J. (2006). Cognitive approaches to the study of emotion-laden and emotion words in monolingual and bilingual memory. In A. Pavlenko (Ed.), Bilingual minds: Emotional experience, expression and representation (pp. 232-256). United Kingdom: Multilingual Matters. https://books.google.com/books/about/Bilingual_Minds.html?id=x0YWC_g23isC

Anooshian, J., & Hertel, P. (1994). Emotionality in free recall: Language specificity in bilingual memory. Cognition and Emotion, 8(6), 503-514. [DOI:10.1080/02699939408408956]

Aycicegi, A., & Harris, C. (2004). Bilinguals recall and recognition of emotion words. Cognition and Emotion, 18(7), 977-987. [DOI:10.1080/02699930341000301]

Bayrami, M., Ashayeri, H., Modarresi, Y., Bakhshipur, A., & Farhangdoost, H. (2012). [Physiological evidence for perceptive difference of emotional words among native and second language Turkish and Kurdish bilinguals (Persian)]. Journal of Kermanshah University of Medical Sciences, 16(5), 411-420. https://brieflands.com/articles/jkums-77356.html

Barrett, L., Mesquita, B., Ochsner, K., & Gross, J. (2007). The Experience of Emotion. Annual Review of Psychology, 58, 373-403. [DOI:10.1146/annurev.psych.58.110405.085709] [PMID] [PMCID]

Berger, R., Miller, A., Seifer, R., Cares, S., & Lebourgeois, M. (2012). Acute sleep restriction effects on emotion responses in 30- to 36-month-old children. Journal of Sleep Research, 21(3), 235-246. [DOI:10.1111/j.1365-2869.2011.00962.x] [PMID] [PMCID]

Bradley, M., Codispoti, M., Sabatinelli, D., & Lang, P. (2001). Emotion and motivation II: Sex differences in picture processing. Emotion, 1(3), 300-319. [DOI:10.1037/1528-3542.1.3.300] [PMID]

Cacioppo, J., Bernston, G., Bechara, A., Tranel, D., & Hawkley, L. (2011). Could an aging brain contribute to subjective well being: The value added by a social neuroscience perspective. In A. Todorov, S. Fiske, & D. Prentice (Eds.), Social neuroscience: Toward understanding the underpinnings of the social mind (pp. 249-262). New York: Oxford University Press. [DOI:10.1093/acprof:oso/9780195316872.003.0017]

Caldwell-Harris, C., & Aycicegi-Dinn, A. (2009). Emotion and lying in a non-native language. International Journal of Psychophysiology, 71(3), 193-204. [DOI:10.1016/j.ijpsycho.2008.09.006] [PMID]

Chang, C. Y., Zheng, J. Y., & Wang, C. J. (2010). Based on support vector regression for emotion recognition using physiological signals. International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18-23 July 2010. [DOI:10.1109/IJCNN.2010.5596878]

Chen, L.-F., Liu, Z.-T., Wu, M., Ding, M., Dong, F.-Y., & Hirota, K. (2015). Emotion-age-gender-nationality based intention understanding in human-robot interaction using two-layer fuzzy support vector reggresion. International Journal of Social Robotics, 7(5), 709-729. [DOI:10.1007/s12369-015-0290-2]

Choi, A., & Woo, W. (2005). Physiological sensing and feature extraction for emotion recognition by exploiting acupuncture spots. In J. Tao, T. Tan, & R. W. Picard (Eds.), 1st International Conference in Affective Computing and Intelligent Interaction (pp. 590-597). Berlin: Springer-Verlag. [DOI:10.1007/11573548_76]

Dewaele, J. M. (2008). The emotional weight of I love you in multilinguals’ languages. Journal of Pragmatics, 40(10), 1753-1780. [DOI:10.1016/j.pragma.2008.03.002]

Donges, U.-S., Kersting, A., & Suslow, T. (2012). Women’s greater ability to perceive happy facial emotion automatically: Gender differences in affective priming. Plos One, 7(7), e41745. [DOI:10.1371/journal.pone.0041745] [PMID] [PMCID]

Frantzidis, C., Bratsas, C., Papadelis, C., Konstantinidis, E., Pappas, C., & D Bamidis, P. (2010). Toward emotion aware computing: An integrated approach using multichannel neurophysiological recordings and affective visual stimuli. IEEE Transactions on Information Technology in Biomedicine, 14(3), 589-597. [DOI:10.1109/TITB.2010.2041553] [PMID]

Franzen, P., Buysse, D., Dahl, R., Thompson, W., & Siegle, G. (2009). Sleep deprivation alters pupillary reactivity to emotional stimuli in healthy young adults. Biological Psychology, 80(3), 300-305. [DOI:10.1016/j.biopsycho.2008.10.010] [PMID] [PMCID]

Gordon, M., & Riger, S. (1991). The female fear: The social costs of rape. Chicago: University of Illinois Press. https://books.google.com/books/about/The_Female_Fear.html?id=4N6i2tfpp5sC

Goshvarpour, A., Abbasi, A., & Goshvarpour, A. (2015). Affective visual stimuli: Characterization of the picture sequences impacts by means of nonlinear approaches. Basic and Clinical Neuroscience, 6(4), 209-221. [PMCID]

Greco, A., Valenza, G., Lanata, A., Rota, G., & Scilingo, E. (2014). Electrodermal activity in bipolar patients during affective elicitation. IEEE Journal of Biomedical and health Informatics, 18(6), 1865-1873. [DOI:10.1109/JBHI.2014.2300940] [PMID]

Grewal, D., & Salovey, P. (2005). Feeling smart: The science of emotional intelligence. American Scientist, 93(4), 330-339. [DOI:10.1511/2005.4.330]

Grossman, M., & Wood, W. (1993). Sex differences in intensity of emotional experience: A social role interpretation. Journal of Personality and Social Psychology, 65(5), 1010-1022. [DOI:10.1037//0022-3514.65.5.1010] [PMID]

Gujar, N., Mcdonald, S., Nishida, M., & Walker, M. (2011). A role for REM sleep in recalibrating the sensitivity of the human brain to specific emotions. Cerebral Cortex, 21(1), 115-123. [DOI:10.1093/cercor/bhq064] [PMID] [PMCID]

Guntekin, B., & Basar, E. (2007). Gender differences influence brain’s beta oscillatory responses in recognition of facial expressions. Neuroscience Letter, 424(4), 94-99. [DOI:10.1016/j.neulet.2007.07.052] [PMID]

Haag, A., Goronzy, S., Schaich, P., & Williams, J. (2004). Emotion recognition using bio-sensors: First steps towards an automatic system. In Tutorial and research workshop on affective dialogue systems (pp. 36-48). Berlin: Springer. [DOI:10.1007/978-3-540-24842-2_4]

Harris, C., Aycicegi, A., & Gleason, J. (2003). Taboo words and reprimands elicit greater autonomic reactivity in a first language than in a second language. Applied Psycholinguistics, 24(4), 561-579. [DOI:10.1017/S0142716403000286]

Isaacowitz, D., Löckenhoff, C., Lane, R., Wright, R., Sechrest, L., Riedel, R., et al. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology and Aging, 22(1), 147-159. [DOI:10.1037/0882-7974.22.1.147] [PMID]

Jang, E. H., Park, B. J., Park, M. S., Kim, S. H., & Sohn, J. H. (2015). Analysis of physiological signals for recognition of boredom, pain, and surprise emotions. Journal of Physiological Anthropology, 34(1), 25. [DOI:10.1186/s40101-015-0063-5] [PMID] [PMCID]

Katsis, C., Katertsidis, N., & Fotiadis, D. (2011). An Integrated System Based on Physiological Signals for the Assessment of Affective States in Patients with Anxiety Disorders. Biomedical Signal Processing and Control, 6(3), 261-268. [DOI:10.1016/j.bspc.2010.12.001]

Katsis, C., Katertsidis, N., Ganiatsas, G., & Fotiadis, D. (2008). Toward emotion recognition in car-racing drivers: a biosignal processing approach. IEEE Transaction on Systems, Man, and Cybernetics, Part A: Systems and Humans, 38(3), 502-512. [DOI:10.1109/TSMCA.2008.918624]

Kessler, E. M., & Staudinger, U. (2009). Affective experience in adulthood and old age: The role of affective arousal and perceived affect regulation. Psychology and Aging, 24(2), 349-362. [DOI:10.1037/a0015352] [PMID]

Kim, K., Bang, S., & Kim, S. (2004). Emotion recognition system using short-term monitoring of physiological signals. Medical and Biological Engineering and Computing, 42(3), 419-427. [DOI:10.1007/BF02344719] [PMID]

Kim, K., Relkin, N., Lee, K., & Hirsch, J. (1997). Distinct cortical areas associated with native and second languages. Nature, 388(6638), 171-174. [PMID]

Kreibig, S. (2010). Autonomic nervous system activity in emotion: A review. Biological Psychology, 84(3), 394-421. [DOI:10.1016/j.biopsycho.2010.03.010] [PMID]

Kring, A., & Gordon, A. (1998). Sex differences in emotion: Expression, experience, and physiology. Journal of Personality and Social Psychology, 74(3), 686-703. [DOI:10.1037/0022-3514.74.3.686] [PMID]

Lang, P., Bradley, M., & Cuthbert, B. (2005). International affective picture system (IAPS): digitized photographs, instruction manual and affective ratings, Technical Report A-6. Gainesville, FL: University of Florida. [DOI:10.1037/t66667-000]

Li, L., & Chen, J. H. (2006, November). Emotion recognition using physiological signals. In International conference on artificial reality and telexistence (pp. 437-446). Berlin: Springer. [DOI:10.1007/11941354_44]

Liu, C., Conn, K., Sarkar, N., & Stone, W. (2008). Physiology-based affect recognition for computer-assisted intervention of children with autism spectrum disorder. International Journal of Human-Computer Studies, 66(9), 662-677. [DOI:10.1016/j.ijhsc.2008.04.003]

Martin, R., Berry, G., Dobranski, T., Horne, M., & Dodgson, P. (1996). Emotion perception threshold: Individual differences in emotional sensitivity. Journal of Research in Personality, 30(2), 290-305. [DOI:10.1006/jrpe.1996.0019]

Murnen, S., & Stockton, M. (1997). Gender and self-reported sexual arousal in response to sexual stimulation: A meta-analytic review. Sex Roles, 37(3-4), 135-153. [DOI:10.1023/A:1025639609402]

Nardelli, M., Valenza, G., Greco, A., Lanata, A., & Scilingo, E. (2015). Recognizing emotions induced by affective sounds through heart rate variability. IEEE Transactions on Affective Computing, 6(4), 385-394. [DOI:10.1109/TAFFC.2015.2432810]

Niu, X., Chen, L., & Chen, Q. (2011). Research on genetic algorithm based on emotion recognition using physiological signals. In International Conference on Computational Problem-Solving (ICCP) (pp. 614-618). Chengdu: IEEE. [DOI:10.1109/ICCPS.2011.6092256]

Pavlenko, A. (2002). Bilingualism and emotions. Multilingualism, 21(1), 45-78. [DOI:10.1515/mult.2002.004]

Picard, R., Vyzas, E., & Healey, J. (2001). Toward machine emotional intelligence: analysis of affective physiological state. IEEE Transaction on Pattern Analysis and Machine Intelligence, 23(10), 1175-1191. [DOI:10.1109/34.954607]

Pilcher, J., & Huffcutt, A. (1996). Effects of sleep deprivation on performance: A meta-analysis. Sleep, 19(4), 318-326. [DOI:10.1093/sleep/19.4.318] [PMID]

Rainville, P., Bechara, A., Naqvi, N., & Damasio, A. (2006). Basic emotions are associated with distinct patterns of cardiorespiratory activity. International Journal of Psychophysiology, 61(1), 5-18. [DOI:10.1016/j.ijpsycho.2005.10.024] [PMID]

Rani, P., Liu, C., Sarkar, N., & Vanman, E. (2006). An empirical study of machine learning techniques for affect recognition in human-robot interaction. Pattern Analysis and Applications, 9(1), 58-69. [DOI:10.1007/s10044-006-0025-y]

Ruffman, T., Henry, J., Livingstone, V., & Phillips, L. (2008). A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience & Biobehavioral Review, 32(4), 863-881. [DOI:10.1016/j.neubiorev.2008.01.001] [PMID]

Sterpenich, V., Albouy, G., Boly, M., Vandewalle, G., Darsaud, A., Balteau, E., et al. (2007). Sleep-related hippocampo-cortical interplay during emotional memory recollection. PLoS Biology, 5(11), e282. [DOI:10.1371/journal.pbio.0050282] [PMID] [PMCID]

Taub, J. (1980). Effects of ad lib extended-delayed sleep on sensorimotor performance, memory and sleepiness in the young adult. Physiology & Behavior, 25(1), 77-87. [DOI:10.1016/0031-9384(80)90185-7]

Taub, J., Globus, G., Phoebus, E., & Drury, R. (1971). Extended sleep and performance. Nature, 233(5315), 142-143. [DOI:10.1038/233142a0] [PMID]

Thomas, R., & Hasher, L. (2006). The influence of emotional valence on age differences in early processing and memory. Psychology and Aging, 21(4), 821-825. [DOI:10.1037/0882-7974.21.4.821] [PMID] [PMCID]

Urry, H., & Gross, J. (2010). Emotion regulation in older age. Current Directions in Psychological Science, 19(6), 352-357. [DOI:10.1177/0963721410388395]

Valenza, G., Citi, L., Lanata, A., Scilingi, E., & Barbieri, R. (2014). Revealing real-time emotional responses: A personalized assessment based on heartbeat dynamics. Scientific Reports, 4, 4998. [DOI:10.1038/srep04998] [PMID] [PMCID]

Walker, M., & Harvey, A. (2010). Obligate symbiosis: Sleep and affect. Sleep Medicine Reviews, 14(4), 215-217. [DOI:10.1016/j.smrv.2010.02.003] [PMID]

Yannakakis, G., & Hallam, J. (2008). Entertainment modeling through physiology in physical play. International Journal of Human-Computer Studies, 66(10), 741-755. [DOI:10.1016/j.ijhcs.2008.06.004]

Yoo, S., Lee, C., Park, Y., Kim, N., Lee, B., & Jeong, K. (2005). Neural network based emotion estimation using heart rate variability and skin resistance. In L. Wang, K. Chen, & Y.S. Ong (Eds.), 1st International Conference in Advances in Natural Computation (pp. 818-824). Berlin: Springer-Verlag. [DOI:10.1007/11539087_110]

Yoo, S. S., Gujar, N., Peter, H., Jolesz, F., & Walker, M. (2007). The human emotional brain without sleep - a prefrontal amygdala disconnect. Current Biology: CB, 17(20), R877-R878. [DOI:10.1016/j.cub.2007.08.007] [PMID]

Zhai, J., & Barreto, A. (2006). Stress detection in computer users based on digital signal processing of noninvasive physiological variables. 28th Annual International Conference Engineering in Medicine and Biology Society (pp. 1355-1358). New York City: IEEE. [DOI:10.1109/IEMBS.2006.259421]

Emotions play a crucial role in health, social relationships, and daily functions. Concerning the importance of emotions, the emotion recognition via physiological parameters (including Galvanic Skin Response (GSR), respiration, Electrocardiogram (ECG), blood pressure, or Electroencephalogram (EEG)) has been fascinated by several researchers in the field of affective computing (Frantzidis et al., 2010; Goshvarpour et al., 2015; Nardelli et al., 2015; Valenza et al., 2014). Among these, Autonomic Nervous System (ANS) activity is a fundamental component in many recent theories of emotion. Overall autonomic measures, Heart Rate (HR) (and also Heart Rate Variability (HRV)) is the most often reported measure (Kreibig, 2010). Numerous approaches such as standard features and nonlinear indices have been used in the literature to analyze the HRV signal quantitatively. However, the main focus was on the simple standard features (Chang, Zheng, & Wang, 2010; Choi & Woo, 2005; Greco, Valenza, Lanata, Rota, & Scilingo, 2014; Haag, Goronzy, Schaich,& Williams 2004; Jang, Park, Park, Kim, & Sohn, 2015; Katsis, Katertsidis, Ganiatsas, & Fotiadis, 2008; Katsis, Katertsidis, & Fotiadis, 2011; Kim, Bang, & Kim, 2004; Li & Chen, 2006; Liu, Conn, Sarkar, & Stone, 2008; Niu, Chen, & Chen, 2011; Picard, Vyzas, & Healey, 2001; Rainville, Bechara, Naqvi, & Damasio, 2006; Rani, Liu, Sarkar, & Vanman, 2006; Yannakakis & Hallam, 2008; Yoo, Lee, Park, Kim, Lee, & Jeong, 2005; Zhai & Barreto, 2006).

Also, different HRV patterns have been reported in the context of different emotion-related autonomic responses (Kreibig, 2010). Many studies have analyzed these differences in the physiological mechanism of emotional reactions as a function of individual variables such as age, gender, and linguality, as well as other factors like sleep duration (Bayrami et al., 2012; Chen, Liu, Z.- Wu, Ding, Dong, & Hirota 2015; Franzen, Buysse, Dahl, Thompson, & Siegle, 2009; Yoo, Gujar, Peter, Jolesz, & Walker, 2007). The appendix presents a short review of the literature. They mainly tended to evaluate one or two parameters separately, and the relation and interaction between these factors on emotional reactions were not simultaneously considered. For example, 1) Are the emotional responses of two genders with insufficient sleep the same? 2) If the subject’s age is also considered, what changes have been made in the emotional responses? 3) Which one has the maximum effect on the emotional autonomic changes? It is supposed that the individual information can jointly affect emotional conformation. Therefore, this relationship and interaction should be considered in the affect analytic system.

The present study aimed to evaluate the effects of age, gender, linguality, and sleep duration on the autonomic responses associated with emotional inductions simultaneously, and it attempted to offer a predictive model for these interactions. The rest of this manuscript is prepared as follows. Section 2 offers the material and methods used in this work. Section 3 reports the experimental results of the proposed procedure. Finally, section 4 presents the study’s conclusion.

2. Materials and Methods

Data collection

The ECG of 47 college students attending the Sahand University of Technology was collected. All participants were Iranian students. To elicit emotions in the participants, images from the International Affective Picture System (IAPS) were used (Lang, Bradley, & Cuthbert, 2005). Based on the dimensional structure of the emotional space, the images of the IAPS were chosen to correspond to the four classes of emotions (Goshvarpour et al., 2015): relaxation, happiness, sadness, and fear. Upon arrival in the laboratory, all participants were requested to read and sign a consent form, to participate in the experiment. All participants reported no history of neurological disease, cardiovascular, epileptic, or hypertension diseases. The subjects were asked not to eat caffeine, salt, or fat foods two hours before data recording and remained still during the experiment, particularly avoiding movements of their fingers, hands, and legs.

The procedure took about 15 minutes, and images were represented after two minutes of rest. In the initial baseline measurement, subjects were instructed to keep their eyes open and watch a blank screen. Then, 28 blocks of pictorial stimuli were randomly shown on the screen to prevent habituation in subjects. Furthermore, they were balanced among subjects. Each block consisted of five pictures from the same emotional class displayed for about 15 s with a 10-s blank screen period at the end. This process was done to ensure the stability of the emotion over time. The blank screen period was applied to allow the return of physiological fluctuations to the baseline and assure the regularity in the demonstration of different emotional images. The blank screen is followed by a white plus (for 3 s) to prompt the subjects to concentrate and look at the center of the screen and prepare them for the next block. They were also asked to self-assess their emotional states. Figure 1 demonstrates the protocol description.

.jpg)

All signals were recorded in the computational neuroscience laboratory of the Sahand University of Technology. A 16-channel PowerLab (manufactured by AD Instruments) with a sampling rate of 400 Hz and a digital notch filter was used to remove current line noise.

The emotional model

Finding a linear relationship between variables usually describes the observed data. In addition, it makes possible a reasonable prediction of new observations. In the previous studies, changes in HRV can serve as a valuable and effective tool to analyze affective states. Consequently, in the current study, the ratio of HRV changes during rest to each emotional state is considered a dependent (or response) variable to model the affective states.

It has been shown that (refer to appendix): first, women intensely experience emotions. Second, the older apply different strategies in emotion regulation and heightened positive emotions. Third, different brain functions are activated in bilinguals during emotional stimuli presentation. In addition, an augmented reactivity to negative emotions has been reported. Therefore, stronger emotional weights should be considered for women, older, bilinguals, and sleep-deprived participants.

Based on the results of individual characteristics and the HRV index, the evaluative model for emotion recognition can be recognized with a regression equation. Consequently, the evaluation index of affective autonomic changes can be calculated by Equation 1.

1. f=ax1+bx2+cx3+dx4+E

In this Equation, x1 represents the gender G) characteristics: 1 for men and 2 for women. x2 is the subjects’ age (A) range: 1 for subjects in the age range 19-22 years and 2 for subjects in the age range 22-25 years. x3 carries the linguality (L) information which is coded 1 if the subject is monolingual and 2 if the subject is bilingual. Sleep (S) is coded by x4: 1 for subjects with normal sleeping and 2 for sleep deprivation. The ratios of HRV changes during rest to each affective state are captured by f. Therefore, f1, f2, f3, and f4 are formed for happiness, relaxation, sadness, and fear affective states, respectively.

3. Results

Eighty percent of recording data were randomly nominated to calculate the coefficients of a, b, c, d, and e by a linear fitting of HRV data, and the rest (20%) for testing. Different combinations of individual characteristics are also considered in the model. To this end, the coefficients play an essential role. For instance, if we want to evaluate the role of age and gender (GA) on the model, the coefficients of c and d should be set to zero. As a result, the following conditions are considered in the model evaluation (Equation 2):

2 .

To evaluate the performance of the model, the mean error (difference between the real data and the estimated one) and the Root Mean Square Error (RMSE) are calculated. Table 1 outlines the results.

.jpg)

Different combinations of individual characteristics can serve to predict the HRV indices in different affective states (Table 1). Based on the mean error results, LS and GL combinations can track the HRV changes due to happy stimuli. While GA outperforms the others for relaxation and fear. In addition, ALS and GALS result in the best prediction of HRV indices for sad incentives. Different individual parameters are involved in the emotional state prediction. Different results are obtained considering subjects exclusively. The results of subject 2 are accurate in all emotional conditions.

4. Discussion

In the current study, a simple predictive model of emotion was presented based on an autonomic feature. For the first time, the importance of individual characteristics involvements and combinations was examined in the problem of emotion prediction based on an HRV parameter. For a two-dimensional emotion theory, four categories of affective states were introduced: happiness, relaxation, sadness, and fear. The HRV changes in the rest in relation to each affective state are considered a dependent variable. An affect predictive model was proposed based on the linear combinations of individual differences with acceptable performance.

The results of this study showed that different subjective characteristics are involved in predicting HRV indices of affective states. LS, GL, GA, ALS, and GALS are desired arrangements to predict HRV changes due to emotional provocations. Researchers can conclude that a close association is observed between gender and physiological changes in emotional states. This result was consistent with published articles extensively where the role of gender in emotion recognition was stated (Chen et al., 2015). However, the role of some indices like sleep quantity or linguality on autonomic indices affected by visual affective states has not been explored so far. Different results were obtained according to each subject. Different combinations of individual characteristics incorporate into the affect prediction. Previous studies confirm the role of subject differences in emotion perception (Donges, Kersting, & Suslow, 2012; Martin, Berry, Dobranski, Horne, & Dodgson, 1996). Different emotion perceptions are because of various individual characteristics, as well as the past experiences of emotions (Barrett, Mesquita, Ochsner, & Gross, 2007). Based on the role of several individual characteristics, a new perspective of the emotion predictive model was presented in the current study. However, more data are required to establish the role of individual characteristics in predicting autonomic emotional states.

Appendix

Gender differences and emotions

A review of articles on emotion suggests that men and women employ different strategies to control and express their emotions. Compared to men, women experience positive and negative emotions more intensely (Grossman & Wood, 1993), and they are more emotional (Grewal & Salovey, 2005). Dealing with frightening situations, women have reported more fear (Gordon & Riger, 1991). Also, some studies have found different emotional valence and arousal ratings between the two genders (Murnen & Stockton, 1997): more emotional arousal in men and higher rates of valence in women. Recent evidence has suggested higher brain activity in females compared to males in some Electroencephalogram (EEG) studies. Guntekin and Basar (2007) perceived a larger beta response in women when observing facial expression; however, it was independent of the type of emotion. In women, the greater electrodermal responses (Kring & Gordon, 1998), the more facial electromyographic reactions, and the higher heart rates (Bradley, Codispoti, Sabatinelli, & Lang, 2001) have been realized during unpleasant stimuli compared to men.

Age and emotional reactions

In previous works, there are some confirmations about the existence of close relations between age and the type of emotion that should be recognized. Specifically, anger, sadness, fear, happiness, and surprise are hardly identified in older adults compared to younger participants (Isaacowitz et al., 2007; Ruffman, Henry, Livingstone, & Phillips, 2008). However, they could correctly recognize positive stimuli. In contrast, despite higher recognition rates of negative motivations in younger adults, they are easily distracted by these types of emotions (Thomas & Hasher, 2006). Low arousal positive affect increased, and negative affect across both low- and high-arousal levels decreased in older adults; however, no age differences were observed in high arousal positive affect (Kessler & Staudinger, 2009). To explain the reason for such positive affections in elders, some scientists examined brain activities. They claimed that the changes in the functional organization of the brain are the main reason (Cacioppo, Bernston, Bechara, Tranel, & Hawkley, 2011). However, the literature suggests different strategies to regulate emotional reactions (Urry & Gross, 2010).

Bilingualism and emotion

There is a great interest in emotional information processing in bilinguals. It was believed that the first language is an emotional expressiveness language, whereas the second one is an emotional distance language (Dewaele, 2008; Pavlenko, 2002). Recalling emotional stimuli, the native language (Spanish) was compared with the second language (English) (Anooshian & Hertel, 1994). Results showed that emotional motivations were better recalled than the neutrals in the first language. However, considering the valence dimension, the authors reported altered emotional recalling in the native language (Aycicegi & Harris, 2004). The negative ones (except for taboo) were less recalled than the neutrals. Shorter reaction time in monolinguals (Altarriba, 2006) and stronger emotional weight in the first language of bilinguals (Dewaele, 2008; Pavlenko, 2002) have been reported.

There is evidence that different structures and functions of the brain (Kim, Relkin, Lee, & Hirsch, 1997) are activated in bilinguals during the presentation of emotional stimuli. From the perspective of physiological responses, the most attention is devoted to the skin conductivity (SC) responses of bilinguals (Caldwell–Harris & Aycicegi–Dinn, 2009; Harris, Aycicegi, & Gleason, 2003). Greater SC has appeared in monolinguals to the emotional stimuli (Harris, et al, 2003). However, the heart rates of Turkish-Persian and Kurdish-Persian bilinguals are evaluated (Bayrami et al., 2012). The authors found that in both groups of bilinguals, negative motivations triggered a greater heart rate in the native language than that of the second language.

Sleep duration and emotional responses

Short or long sleep duration causes undesirable effects on mood, cognition, physiological function, alertness, and memory (Taub, 1980; Taub et al., 1971). According to evidence, a close relationship is observed between emotions and sleep (Berger, Miller, Seifer, Cares, & Lebourgeois, 2012; Walker & Harvey, 2010). Disrupting emotional memories, decreasing emotion reactivity, weakening sensitivity to positive stimuli, and consolidating sensitivity to negative ones are some outcomes of sleep deficiency (Franzen et al., 2009; Gujar et al., 2011; Pilcher & Huffcutt, 1996; Sterpenich et al., 2007). To have optimal processing and evaluation of emotion, enough sleep is needed. Insufficient sleep may cause biases in processing the negative valence stimuli (Gujar et al., 2011). Notably, an augmented reactivity to negative emotions, including anger and fear, has been documented throughout the day without sleep (Gujar et al., 2011). Using functional magnetic resonance imaging, different functions of the brain (augmentation in amygdala reactivity) to negative emotional stimuli have been reported for a night of sleep deprivation (Yoo et al., 2007). By evaluating autonomic reactivity, researchers demonstrated a larger pupillary response to negative pictures in sleep deficiency (Franzen et al., 2009).

5. Conclusion

A new perspective of the emotion predictive model was presented based on the role of several individual characteristics, in the current study. For modeling, we evaluated different models as well as different values of parameters. However, it was desirable to choose the simplest and at the same time the most efficient model. Based on this, the proposed model was selected. However, this model may not work well for other HRV parameters. However, to establish the role of individual characteristics in the prediction of autonomic emotional states more data are needed. The number of participants should be greatly increased so that this model can be used more confidently. More samples (larger population) should have enough variety in terms of gender, age, bilingualism, and sleep quantity.

Ethical Considerations

Compliance with ethical guidelines

All ethical principles are considered in this article. The participants were informed of the purpose of the research and its implementation stages. They were also assured about the confidentiality of their information and were free to leave the study whenever they wished, and if desired, the research results would be available to them. A written informed consent has been obtained from the subjects. Principles of the .Helsinki Convention was also observed.

Funding

This research did not receive any grant from funding agencies in the public, commercial, or non-profit sectors.

Authors' contributions

Conceptualization and Funding acquisition and Resources: All authors; Investigation, Data analysis, Methodology, Data collection and Writing-original draft: Atefeh Goshvarpour and Ateke Goshvarpour; Writing – review & editing: All authors.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors gratefully acknowledge Computational Neuroscience Laboratory, where the data were collected, and all the subjects volunteered for the study.

References

Altarriba, J. (2006). Cognitive approaches to the study of emotion-laden and emotion words in monolingual and bilingual memory. In A. Pavlenko (Ed.), Bilingual minds: Emotional experience, expression and representation (pp. 232-256). United Kingdom: Multilingual Matters. https://books.google.com/books/about/Bilingual_Minds.html?id=x0YWC_g23isC

Anooshian, J., & Hertel, P. (1994). Emotionality in free recall: Language specificity in bilingual memory. Cognition and Emotion, 8(6), 503-514. [DOI:10.1080/02699939408408956]

Aycicegi, A., & Harris, C. (2004). Bilinguals recall and recognition of emotion words. Cognition and Emotion, 18(7), 977-987. [DOI:10.1080/02699930341000301]

Bayrami, M., Ashayeri, H., Modarresi, Y., Bakhshipur, A., & Farhangdoost, H. (2012). [Physiological evidence for perceptive difference of emotional words among native and second language Turkish and Kurdish bilinguals (Persian)]. Journal of Kermanshah University of Medical Sciences, 16(5), 411-420. https://brieflands.com/articles/jkums-77356.html

Barrett, L., Mesquita, B., Ochsner, K., & Gross, J. (2007). The Experience of Emotion. Annual Review of Psychology, 58, 373-403. [DOI:10.1146/annurev.psych.58.110405.085709] [PMID] [PMCID]

Berger, R., Miller, A., Seifer, R., Cares, S., & Lebourgeois, M. (2012). Acute sleep restriction effects on emotion responses in 30- to 36-month-old children. Journal of Sleep Research, 21(3), 235-246. [DOI:10.1111/j.1365-2869.2011.00962.x] [PMID] [PMCID]

Bradley, M., Codispoti, M., Sabatinelli, D., & Lang, P. (2001). Emotion and motivation II: Sex differences in picture processing. Emotion, 1(3), 300-319. [DOI:10.1037/1528-3542.1.3.300] [PMID]

Cacioppo, J., Bernston, G., Bechara, A., Tranel, D., & Hawkley, L. (2011). Could an aging brain contribute to subjective well being: The value added by a social neuroscience perspective. In A. Todorov, S. Fiske, & D. Prentice (Eds.), Social neuroscience: Toward understanding the underpinnings of the social mind (pp. 249-262). New York: Oxford University Press. [DOI:10.1093/acprof:oso/9780195316872.003.0017]

Caldwell-Harris, C., & Aycicegi-Dinn, A. (2009). Emotion and lying in a non-native language. International Journal of Psychophysiology, 71(3), 193-204. [DOI:10.1016/j.ijpsycho.2008.09.006] [PMID]

Chang, C. Y., Zheng, J. Y., & Wang, C. J. (2010). Based on support vector regression for emotion recognition using physiological signals. International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18-23 July 2010. [DOI:10.1109/IJCNN.2010.5596878]

Chen, L.-F., Liu, Z.-T., Wu, M., Ding, M., Dong, F.-Y., & Hirota, K. (2015). Emotion-age-gender-nationality based intention understanding in human-robot interaction using two-layer fuzzy support vector reggresion. International Journal of Social Robotics, 7(5), 709-729. [DOI:10.1007/s12369-015-0290-2]

Choi, A., & Woo, W. (2005). Physiological sensing and feature extraction for emotion recognition by exploiting acupuncture spots. In J. Tao, T. Tan, & R. W. Picard (Eds.), 1st International Conference in Affective Computing and Intelligent Interaction (pp. 590-597). Berlin: Springer-Verlag. [DOI:10.1007/11573548_76]

Dewaele, J. M. (2008). The emotional weight of I love you in multilinguals’ languages. Journal of Pragmatics, 40(10), 1753-1780. [DOI:10.1016/j.pragma.2008.03.002]

Donges, U.-S., Kersting, A., & Suslow, T. (2012). Women’s greater ability to perceive happy facial emotion automatically: Gender differences in affective priming. Plos One, 7(7), e41745. [DOI:10.1371/journal.pone.0041745] [PMID] [PMCID]

Frantzidis, C., Bratsas, C., Papadelis, C., Konstantinidis, E., Pappas, C., & D Bamidis, P. (2010). Toward emotion aware computing: An integrated approach using multichannel neurophysiological recordings and affective visual stimuli. IEEE Transactions on Information Technology in Biomedicine, 14(3), 589-597. [DOI:10.1109/TITB.2010.2041553] [PMID]

Franzen, P., Buysse, D., Dahl, R., Thompson, W., & Siegle, G. (2009). Sleep deprivation alters pupillary reactivity to emotional stimuli in healthy young adults. Biological Psychology, 80(3), 300-305. [DOI:10.1016/j.biopsycho.2008.10.010] [PMID] [PMCID]

Gordon, M., & Riger, S. (1991). The female fear: The social costs of rape. Chicago: University of Illinois Press. https://books.google.com/books/about/The_Female_Fear.html?id=4N6i2tfpp5sC

Goshvarpour, A., Abbasi, A., & Goshvarpour, A. (2015). Affective visual stimuli: Characterization of the picture sequences impacts by means of nonlinear approaches. Basic and Clinical Neuroscience, 6(4), 209-221. [PMCID]

Greco, A., Valenza, G., Lanata, A., Rota, G., & Scilingo, E. (2014). Electrodermal activity in bipolar patients during affective elicitation. IEEE Journal of Biomedical and health Informatics, 18(6), 1865-1873. [DOI:10.1109/JBHI.2014.2300940] [PMID]

Grewal, D., & Salovey, P. (2005). Feeling smart: The science of emotional intelligence. American Scientist, 93(4), 330-339. [DOI:10.1511/2005.4.330]

Grossman, M., & Wood, W. (1993). Sex differences in intensity of emotional experience: A social role interpretation. Journal of Personality and Social Psychology, 65(5), 1010-1022. [DOI:10.1037//0022-3514.65.5.1010] [PMID]

Gujar, N., Mcdonald, S., Nishida, M., & Walker, M. (2011). A role for REM sleep in recalibrating the sensitivity of the human brain to specific emotions. Cerebral Cortex, 21(1), 115-123. [DOI:10.1093/cercor/bhq064] [PMID] [PMCID]

Guntekin, B., & Basar, E. (2007). Gender differences influence brain’s beta oscillatory responses in recognition of facial expressions. Neuroscience Letter, 424(4), 94-99. [DOI:10.1016/j.neulet.2007.07.052] [PMID]

Haag, A., Goronzy, S., Schaich, P., & Williams, J. (2004). Emotion recognition using bio-sensors: First steps towards an automatic system. In Tutorial and research workshop on affective dialogue systems (pp. 36-48). Berlin: Springer. [DOI:10.1007/978-3-540-24842-2_4]

Harris, C., Aycicegi, A., & Gleason, J. (2003). Taboo words and reprimands elicit greater autonomic reactivity in a first language than in a second language. Applied Psycholinguistics, 24(4), 561-579. [DOI:10.1017/S0142716403000286]

Isaacowitz, D., Löckenhoff, C., Lane, R., Wright, R., Sechrest, L., Riedel, R., et al. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology and Aging, 22(1), 147-159. [DOI:10.1037/0882-7974.22.1.147] [PMID]

Jang, E. H., Park, B. J., Park, M. S., Kim, S. H., & Sohn, J. H. (2015). Analysis of physiological signals for recognition of boredom, pain, and surprise emotions. Journal of Physiological Anthropology, 34(1), 25. [DOI:10.1186/s40101-015-0063-5] [PMID] [PMCID]

Katsis, C., Katertsidis, N., & Fotiadis, D. (2011). An Integrated System Based on Physiological Signals for the Assessment of Affective States in Patients with Anxiety Disorders. Biomedical Signal Processing and Control, 6(3), 261-268. [DOI:10.1016/j.bspc.2010.12.001]

Katsis, C., Katertsidis, N., Ganiatsas, G., & Fotiadis, D. (2008). Toward emotion recognition in car-racing drivers: a biosignal processing approach. IEEE Transaction on Systems, Man, and Cybernetics, Part A: Systems and Humans, 38(3), 502-512. [DOI:10.1109/TSMCA.2008.918624]

Kessler, E. M., & Staudinger, U. (2009). Affective experience in adulthood and old age: The role of affective arousal and perceived affect regulation. Psychology and Aging, 24(2), 349-362. [DOI:10.1037/a0015352] [PMID]

Kim, K., Bang, S., & Kim, S. (2004). Emotion recognition system using short-term monitoring of physiological signals. Medical and Biological Engineering and Computing, 42(3), 419-427. [DOI:10.1007/BF02344719] [PMID]

Kim, K., Relkin, N., Lee, K., & Hirsch, J. (1997). Distinct cortical areas associated with native and second languages. Nature, 388(6638), 171-174. [PMID]

Kreibig, S. (2010). Autonomic nervous system activity in emotion: A review. Biological Psychology, 84(3), 394-421. [DOI:10.1016/j.biopsycho.2010.03.010] [PMID]

Kring, A., & Gordon, A. (1998). Sex differences in emotion: Expression, experience, and physiology. Journal of Personality and Social Psychology, 74(3), 686-703. [DOI:10.1037/0022-3514.74.3.686] [PMID]

Lang, P., Bradley, M., & Cuthbert, B. (2005). International affective picture system (IAPS): digitized photographs, instruction manual and affective ratings, Technical Report A-6. Gainesville, FL: University of Florida. [DOI:10.1037/t66667-000]

Li, L., & Chen, J. H. (2006, November). Emotion recognition using physiological signals. In International conference on artificial reality and telexistence (pp. 437-446). Berlin: Springer. [DOI:10.1007/11941354_44]

Liu, C., Conn, K., Sarkar, N., & Stone, W. (2008). Physiology-based affect recognition for computer-assisted intervention of children with autism spectrum disorder. International Journal of Human-Computer Studies, 66(9), 662-677. [DOI:10.1016/j.ijhsc.2008.04.003]

Martin, R., Berry, G., Dobranski, T., Horne, M., & Dodgson, P. (1996). Emotion perception threshold: Individual differences in emotional sensitivity. Journal of Research in Personality, 30(2), 290-305. [DOI:10.1006/jrpe.1996.0019]

Murnen, S., & Stockton, M. (1997). Gender and self-reported sexual arousal in response to sexual stimulation: A meta-analytic review. Sex Roles, 37(3-4), 135-153. [DOI:10.1023/A:1025639609402]

Nardelli, M., Valenza, G., Greco, A., Lanata, A., & Scilingo, E. (2015). Recognizing emotions induced by affective sounds through heart rate variability. IEEE Transactions on Affective Computing, 6(4), 385-394. [DOI:10.1109/TAFFC.2015.2432810]

Niu, X., Chen, L., & Chen, Q. (2011). Research on genetic algorithm based on emotion recognition using physiological signals. In International Conference on Computational Problem-Solving (ICCP) (pp. 614-618). Chengdu: IEEE. [DOI:10.1109/ICCPS.2011.6092256]

Pavlenko, A. (2002). Bilingualism and emotions. Multilingualism, 21(1), 45-78. [DOI:10.1515/mult.2002.004]

Picard, R., Vyzas, E., & Healey, J. (2001). Toward machine emotional intelligence: analysis of affective physiological state. IEEE Transaction on Pattern Analysis and Machine Intelligence, 23(10), 1175-1191. [DOI:10.1109/34.954607]

Pilcher, J., & Huffcutt, A. (1996). Effects of sleep deprivation on performance: A meta-analysis. Sleep, 19(4), 318-326. [DOI:10.1093/sleep/19.4.318] [PMID]

Rainville, P., Bechara, A., Naqvi, N., & Damasio, A. (2006). Basic emotions are associated with distinct patterns of cardiorespiratory activity. International Journal of Psychophysiology, 61(1), 5-18. [DOI:10.1016/j.ijpsycho.2005.10.024] [PMID]

Rani, P., Liu, C., Sarkar, N., & Vanman, E. (2006). An empirical study of machine learning techniques for affect recognition in human-robot interaction. Pattern Analysis and Applications, 9(1), 58-69. [DOI:10.1007/s10044-006-0025-y]

Ruffman, T., Henry, J., Livingstone, V., & Phillips, L. (2008). A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience & Biobehavioral Review, 32(4), 863-881. [DOI:10.1016/j.neubiorev.2008.01.001] [PMID]

Sterpenich, V., Albouy, G., Boly, M., Vandewalle, G., Darsaud, A., Balteau, E., et al. (2007). Sleep-related hippocampo-cortical interplay during emotional memory recollection. PLoS Biology, 5(11), e282. [DOI:10.1371/journal.pbio.0050282] [PMID] [PMCID]

Taub, J. (1980). Effects of ad lib extended-delayed sleep on sensorimotor performance, memory and sleepiness in the young adult. Physiology & Behavior, 25(1), 77-87. [DOI:10.1016/0031-9384(80)90185-7]

Taub, J., Globus, G., Phoebus, E., & Drury, R. (1971). Extended sleep and performance. Nature, 233(5315), 142-143. [DOI:10.1038/233142a0] [PMID]

Thomas, R., & Hasher, L. (2006). The influence of emotional valence on age differences in early processing and memory. Psychology and Aging, 21(4), 821-825. [DOI:10.1037/0882-7974.21.4.821] [PMID] [PMCID]

Urry, H., & Gross, J. (2010). Emotion regulation in older age. Current Directions in Psychological Science, 19(6), 352-357. [DOI:10.1177/0963721410388395]

Valenza, G., Citi, L., Lanata, A., Scilingi, E., & Barbieri, R. (2014). Revealing real-time emotional responses: A personalized assessment based on heartbeat dynamics. Scientific Reports, 4, 4998. [DOI:10.1038/srep04998] [PMID] [PMCID]

Walker, M., & Harvey, A. (2010). Obligate symbiosis: Sleep and affect. Sleep Medicine Reviews, 14(4), 215-217. [DOI:10.1016/j.smrv.2010.02.003] [PMID]

Yannakakis, G., & Hallam, J. (2008). Entertainment modeling through physiology in physical play. International Journal of Human-Computer Studies, 66(10), 741-755. [DOI:10.1016/j.ijhcs.2008.06.004]

Yoo, S., Lee, C., Park, Y., Kim, N., Lee, B., & Jeong, K. (2005). Neural network based emotion estimation using heart rate variability and skin resistance. In L. Wang, K. Chen, & Y.S. Ong (Eds.), 1st International Conference in Advances in Natural Computation (pp. 818-824). Berlin: Springer-Verlag. [DOI:10.1007/11539087_110]

Yoo, S. S., Gujar, N., Peter, H., Jolesz, F., & Walker, M. (2007). The human emotional brain without sleep - a prefrontal amygdala disconnect. Current Biology: CB, 17(20), R877-R878. [DOI:10.1016/j.cub.2007.08.007] [PMID]

Zhai, J., & Barreto, A. (2006). Stress detection in computer users based on digital signal processing of noninvasive physiological variables. 28th Annual International Conference Engineering in Medicine and Biology Society (pp. 1355-1358). New York City: IEEE. [DOI:10.1109/IEMBS.2006.259421]

Type of Study: Original |

Subject:

Computational Neuroscience

Received: 2016/03/15 | Accepted: 2020/10/11 | Published: 2022/05/1

Received: 2016/03/15 | Accepted: 2020/10/11 | Published: 2022/05/1

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |