Volume 15, Issue 3 (May & Jun 2024)

BCN 2024, 15(3): 393-402 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Vafaei E, Rahatabad F N, Setarehdan S K, Azadfallah P. Feature Extraction With Stacked Autoencoders for EEG Channel Reduction in Emotion Recognition. BCN 2024; 15 (3) :393-402

URL: http://bcn.iums.ac.ir/article-1-2646-en.html

URL: http://bcn.iums.ac.ir/article-1-2646-en.html

Elnaz Vafaei1

, Fereidoun Nowshiravan Rahatabad *1

, Fereidoun Nowshiravan Rahatabad *1

, Seyed Kamaledin Setarehdan2

, Seyed Kamaledin Setarehdan2

, Parviz Azadfallah3

, Parviz Azadfallah3

, Fereidoun Nowshiravan Rahatabad *1

, Fereidoun Nowshiravan Rahatabad *1

, Seyed Kamaledin Setarehdan2

, Seyed Kamaledin Setarehdan2

, Parviz Azadfallah3

, Parviz Azadfallah3

1- Department of Biomedical Engineering, Faculty of Medical Sciences and Technologies, Science and Research Branch, Islamic Azad University, Tehran, Iran.

2- School of Electrical and Computer Engineering, University of Tehran, Tehran, Iran.

3- Faculty of Humanities, Tarbiat Modares University, Tehran, Iran.

2- School of Electrical and Computer Engineering, University of Tehran, Tehran, Iran.

3- Faculty of Humanities, Tarbiat Modares University, Tehran, Iran.

Keywords: Deep learning, Stacked auto-encoder, Channel reduction, Electroencephalogram (EEG) analysis, Emotion

Full-Text [PDF 1504 kb]

| Abstract (HTML)

Full-Text:

1. Introduction

Emotions are recognized as one of the most important parameters in daily human activities. The most important effect of this parameter is the creation of healthy relationships in social environments (Wei et al., 2020). Identifying emotion is the first step to discovering the effective human connection in the face of the environment, which has become one of the most up-to-date subjects to control the positive and negative states of human beings (Saleh Khajeh Hosseini et al., 2022). Emotions can be identified qualitatively by assessing facial expressions (Schirmer, 2017). The study of brain signals is a quantitative method in that brain-computer interface systems have successfully achieved a high level of classification of emotional states through machine learning applications.

Brain signals are produced by the central neuron system (Topic & Russo, 2021). These signals are performed non-invasively on the surface of the skull with measuring devices, such as electroencephalograms (EEG). An electroencephalograph is a device that measures the pulses produced in the center of the head (Li et al., 2018). It has a high temporal resolution that can reflect the strength and position of brain activity. The signals are measured by electrodes mounted on the scalp based on the 10-20 electrode system. A channel is obtained from the voltage difference between two electrodes, and the set of channels together creates the EEG recording. The EEG signal is recorded (Candra et al., 2017) by several channels that, depending on the accuracy of the measurement, the number of channels can be selected as 24, 32, 64, 128, and 256. Identification of emotions from EEG signals requires several important factors, including the features and number of channels that are used during the recording (Candra et al., 2017). As the number of channels increases, the accuracy of the measurement is enhanced; however, a large number of channels on the head can delay the signal recording process, which is inconvenient for both the physician and the patient. In addition, with increasing the number of channels, the costs of signal recording increase. Therefore, reducing the data record time and its costs would be possible by decreasing the number of channels and maintaining the quality of the record result. Jana and Mukherjee, depicted an efficient seizure prediction technique using a convolutional neural network with optimizing the EEG channels (Jana & Mukherjee, 2021).

Features that are extracted for emotion recognition are the most important steps in the EEG signal processing. These steps consist of EEG signal preprocessing, including artifacts of electromyography and electrooculography signals. Many scientists are interested in automated feature learning that deep learning neural networks provide acceptable results. Deep learning networks are one of the up-to-date topics in the field of machine learning in EEG signal analysis. Deep networks are an unsupervised and supervised learning method. These networks have provided acceptable results in reducing the input space of large databases, such as brain signal data (Yin et al., 2017). Stacked autoencoder networks (SAE) are one of the deep learning methods that can automatically extract complex nonlinear abstracted features from a signal. Jose et al. (2021) developed the structure of stacked autoencoders to extract low-level and high-level features in deep layers. These features are a good representation of the signal, which is considered a good indication to evaluate the importance of the presence or absence of channels (Candra et al., 2017; Yin et al., 2017). Therefore, SAE can be effective in reducing the number of channels based on feature fusion. For EEG channel reduction, Candra et al. applied the weave feature to classify emotion and achieved 76.8% accuracy for valence and 74.3% for arousal emotion (Candra et al., 2017).

In this study, the optimal features of EEG signals are extracted using stacked autoencoder networks. A deep learning method is proposed for emotion recognition for important feature extraction from EEG signals. The linear or nonlinear combination of temporal, frequency, and linear features is extracted with SAEs. A channel reduction method is also used to improve an emotion classification for classification in two dimensions arousal and valance. Accordingly, a combination of features by SAEs is proposed as an EEG-emotion feature to classify low/high states of valence and arousal dimensions. The evaluation determines the importance of each channel based on the characteristics obtained from the SAE networks. This approach can reduce the number of channels and time to prepare the process of recording and during signal recording, this proposed method is described in the next section.

This study consists of four sections which are explained below. In the second section, the structure of the autoencoder neural network is completely described. The third section, discusses methods for classifying EEG data based on standard EEG features related to emotion recognition according to two dimensions, arousal, and valance, as well as features extracted from the autoencoder network are discussed. Results and discussion sections are presented in sections 4 and 5 in which the results of the experiments are reported. Meanwhile, the results of the channel reduction algorithm on classification applying support vector machine (SVM) algorithm were discussed along with channel location that is related to valence and arousal dimensions.

2. Materials and Methods

This section of the paper has five main parts. In the first part, the database which is used in this study has been explained. Subsequently, EEG data processing, including pre-processing and processing, is mentioned. The third part describes the SAE network and then the technique for reducing the number of channels is stated. In the end, the performance evaluation criteria that can assess the performance of the proposed method are mentioned.

DEAP database

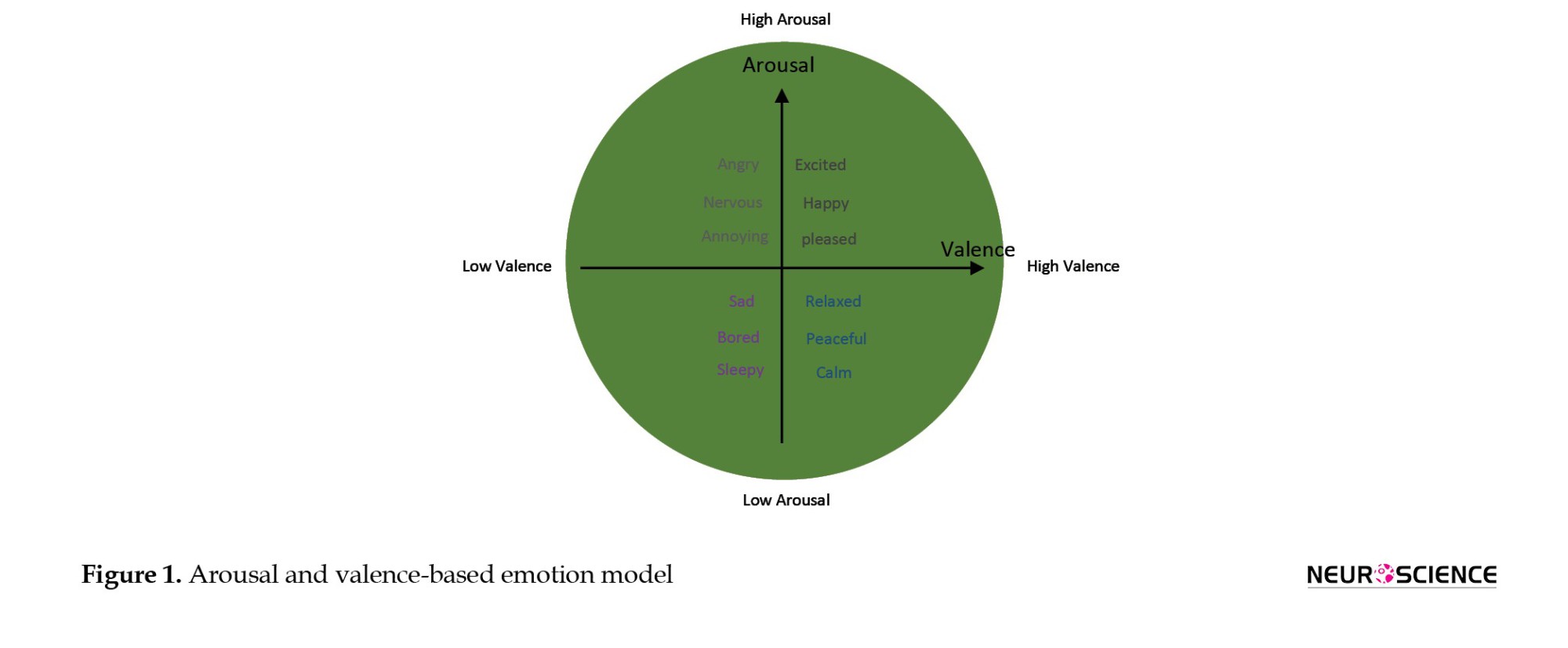

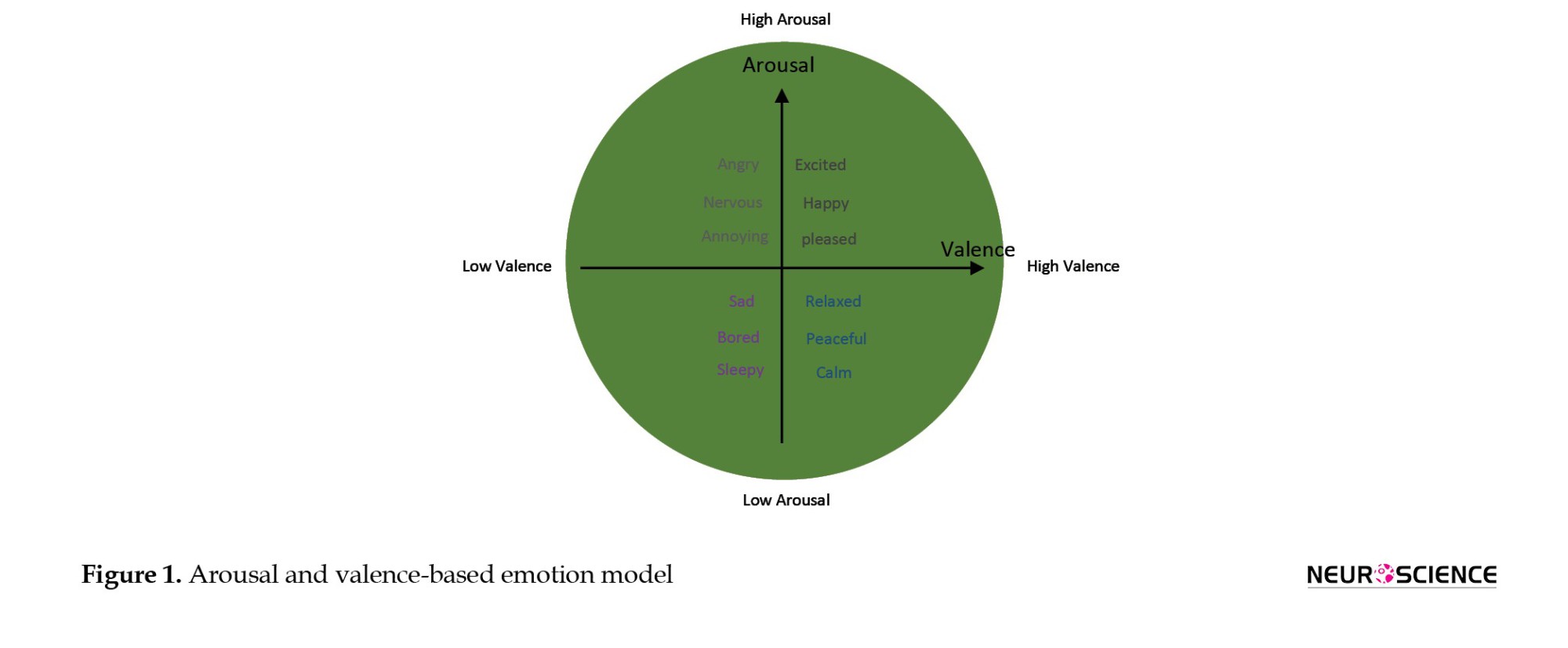

In this study, the DEAP physiological database has been used, which was collected by Koelstra et al. in 2012 to analyze emotion states. One of the recorded electrophysiological signals in the database is the EEG signal which consists of 32 electrodes according to the international 10–20 electrodes placement system. The EEG signal was recorded from 32 participants with a mean age of 28 years, 16 of whom were female. The data were not gender biased. Emotional stimuli, which induced 4 types of emotion for each subject, were done with 40 types of 1-min music videos. Next, the participants were asked to rate their emotions with emotional labels using the self-assessment Mankins questionnaire on valence and arousal dimensions. The selection of videos was based on 4 classes of emotion as follows: High arousal-high valence, high arousal-low valence, low arousal-high valence, and low arousal-low valence. The sampling frequency at the time of EEG signal recording was 256 Hz, which was then reduced to 128 Hz by the down-sampling method. A baseline 5-min signal was recorded for each participant, with a 3-second interval between each video to relieve the individual’s emotional state (Koelstra et al., 2012). The model of arousal and valence dimension is illustrated in Figure 1.

EEG data processing

The main objective of the present study is the classification of human emotions and emotional states. Artifacts and noises, including electromyography and electrooculography signals, are removed by the independent component analysis method (Ashtiyani et al., 2008). Based on previous studies in emotion recognition, EEG signal has three main feature categories that are categorized into time, frequency, and time-frequency features that are considered in this study (Chen et al., 2020). The most common features used in emotion recognition studies are power, mean, standard deviation, zero-crossing rate, entropy, fractal dimension, and correlation dimension which are shown in Table 1 (Wang & Wang, 2021; Yu & Wang, 2022). We used these features to train the SAEs with 1-s, 2-s, 4-s, and 8-s windows with 50% overlap (w). All features were normalized between zero and one. These features are the inputs of stacked autoencoders that are combined to represent the best-abstracted features. The sampling frequency was 128 Hz; therefore, the EEG signal consisted of 128 samples in one second. Considering “m” as the duration of the signal based on seconds, 10 features (based on Table 1), and 32 channels, the size of the input is equal to “n=(32×10×m×50%)/w,” where n is the dimension of the extracted features in the input.

Stacked autoencoder for feature extraction

The autoencoder networks are one of the deep learning methods in that structure that is symmetrical. There are three layers, including the input layer, hidden layer, and output layer that are named encoder and decoder parts. The output of the first encoder acts as the input of the second autoencoder network and this process will be continued to make a SAE. The features that are extracted from the DEAP database are the extension of the SAE. The network at the SAEs level uses unlabeled data based on an unsupervised approach (Chai et al., 2016). The flowchart of the proposed method that we applied is illustrated in Figure 2.

The last decoder is removed and the weights and biases are the final abstracted features that we need. Fine-tuning function when classifying emotions that set SAE parameters is the most important part of emotion classification. This stage is trained with labeled data to train all layers at one time (Yin et al., 2017) (Equations 1, 2, 3, and 4).

In Equation 1, x∈Rn is the vector of the input (feature selected from raw EEG). h∈Rp is the vector of the hidden layer, and P is the dimension of abstracted features. Meanwhile, σ shows the sigmoid function and W∈Rp×n is a weight matrix, b∈Rp is a bias vector that is shown in Equation 2. In Equation 3, x'∈Rn is the next layer that the dimension of x' is equal x. The weights of the hidden layer reconstruct the output or x'. The square error cost function in Equation 4, is E where the E is minimized when the input and the reconstructed input are the most similar. The weights and biases of the last hidden layer are the features extracted from the SAE network that are calculated with the fine-tuning method by minimizing the reconstruction loss.

Classification

The features obtained from the SAE networks are used to classify emotion classes. K nearest neighbor (KNN) classifier, naive Bayesian classifier (BN), and SVM classifiers are considered as these classifiers are widely used in the field of emotion recognition (Yu & Wang, 2022). An architecture of the presented model is illustrated in Figure 1, including the extraction of primary features from the raw data of EEG signal and, applying SAEs to obtain abstracted features and emotion classifiers from optimal features.

Channel reduction

In various studies, all the channels of EEG signals are used for emotion recognition. However, some of them indicate that all channels have no significance (Candra et al., 2017). Using all 32 channels is not comfortable for doctors and patients during EEG signal recording. Therefore, emotion recognition that uses a minimum number of channels has many advantages, namely reducing the computational time and increasing the time efficiency. The requirement of a channel reduction method is seen for the development of emotion classification trends.

In the proposed method, each channel is important and the importance of all of them is evaluated individually based on the accuracy of the classifier in the absence of that channel. The accuracy of emotion classification when all 32 channels are used has been calculated. Then, each channel was ignored and the average of the network was recalculated with the remaining channels. Therefore, when the average of the accuracy is not decreased, the ignored channel is unimportant and the execution of the method continues. Table 2 illustrates a channel reduction algorithm.

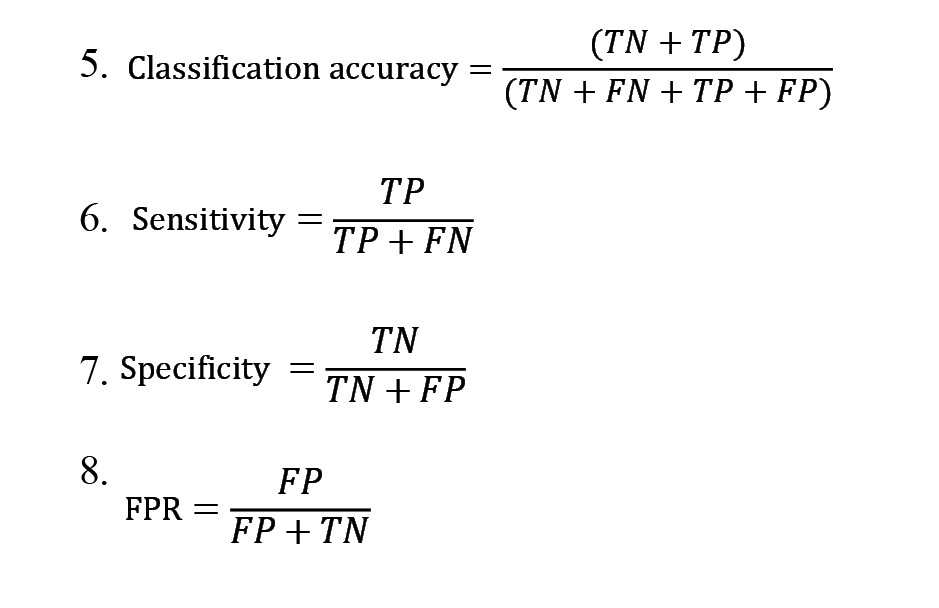

Performance evaluation metrics

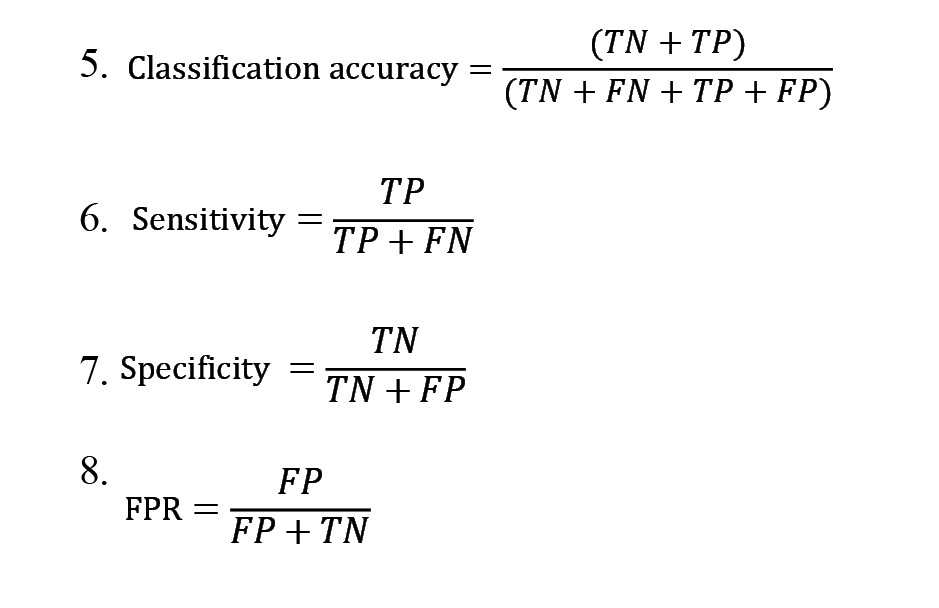

There are criteria for evaluating the performance of the method provided. These criteria should properly evaluate the presence or absence of each channel. Classification accuracy, sensitivity, specificity, and false-positive rate (FPR) criteria are considered the methods that are calculated using Equations 5, 6, 7 and 8, respectively, in which “TP” stands for true positive, “FP” is false positive, “FN” stands for a false negative, and “TN” is true negative (Bajpai et al., 2021).

3. Results

In this study, an emotion recognition method using EEG signals is proposed. SAE network is one of most practical the deep learning techniques, which is used for important feature extraction and feature fusion hidden in raw EEG signals for classification. Recording brain signals with an EEG system using 32 channels cannot be comfortable for doctors and patients. Thus, a channel reduction technique with maintaining the quality of signal recording and the main feature of brain signals is needed to reduce the number of channels for emotion recognition.

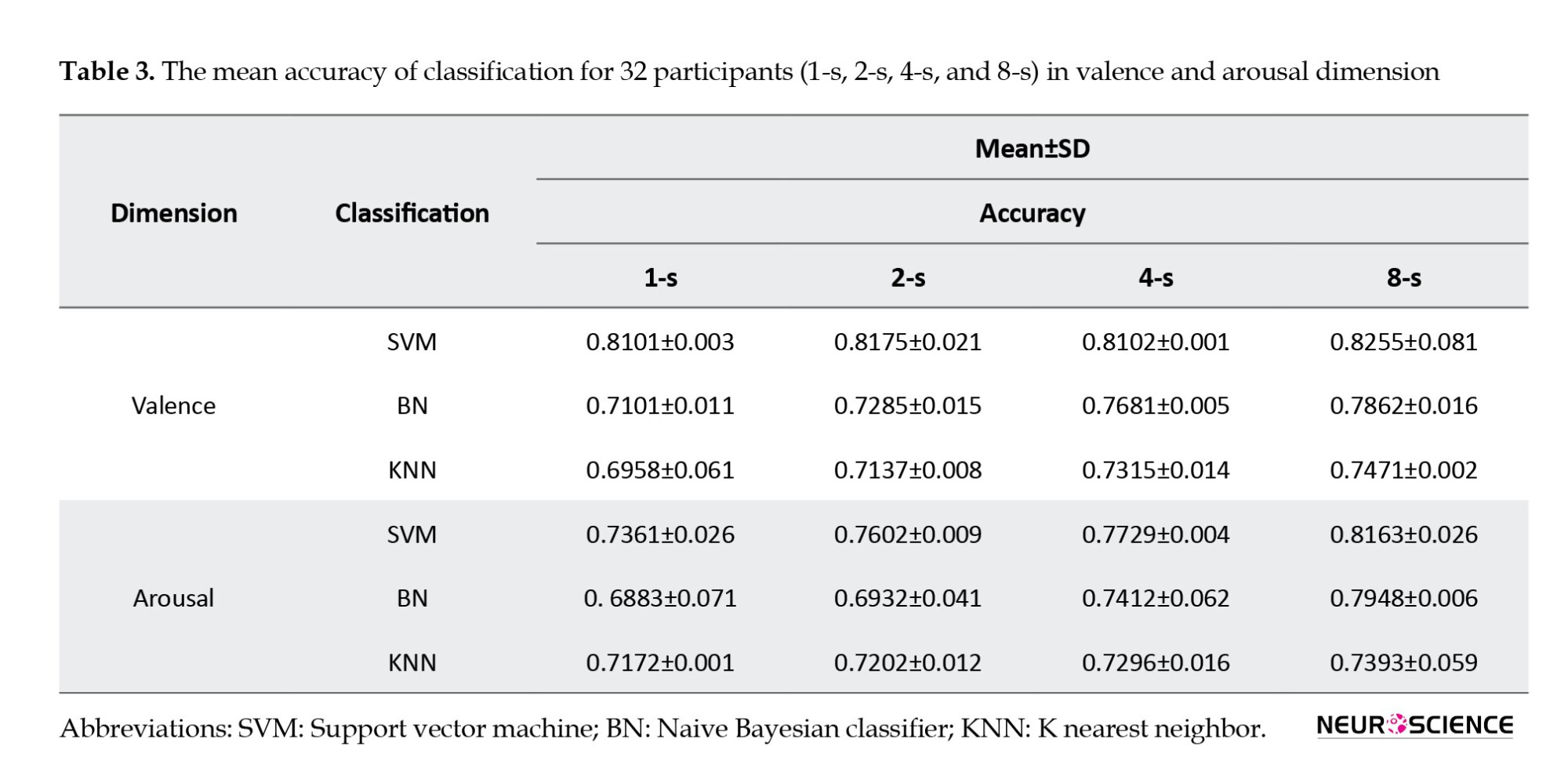

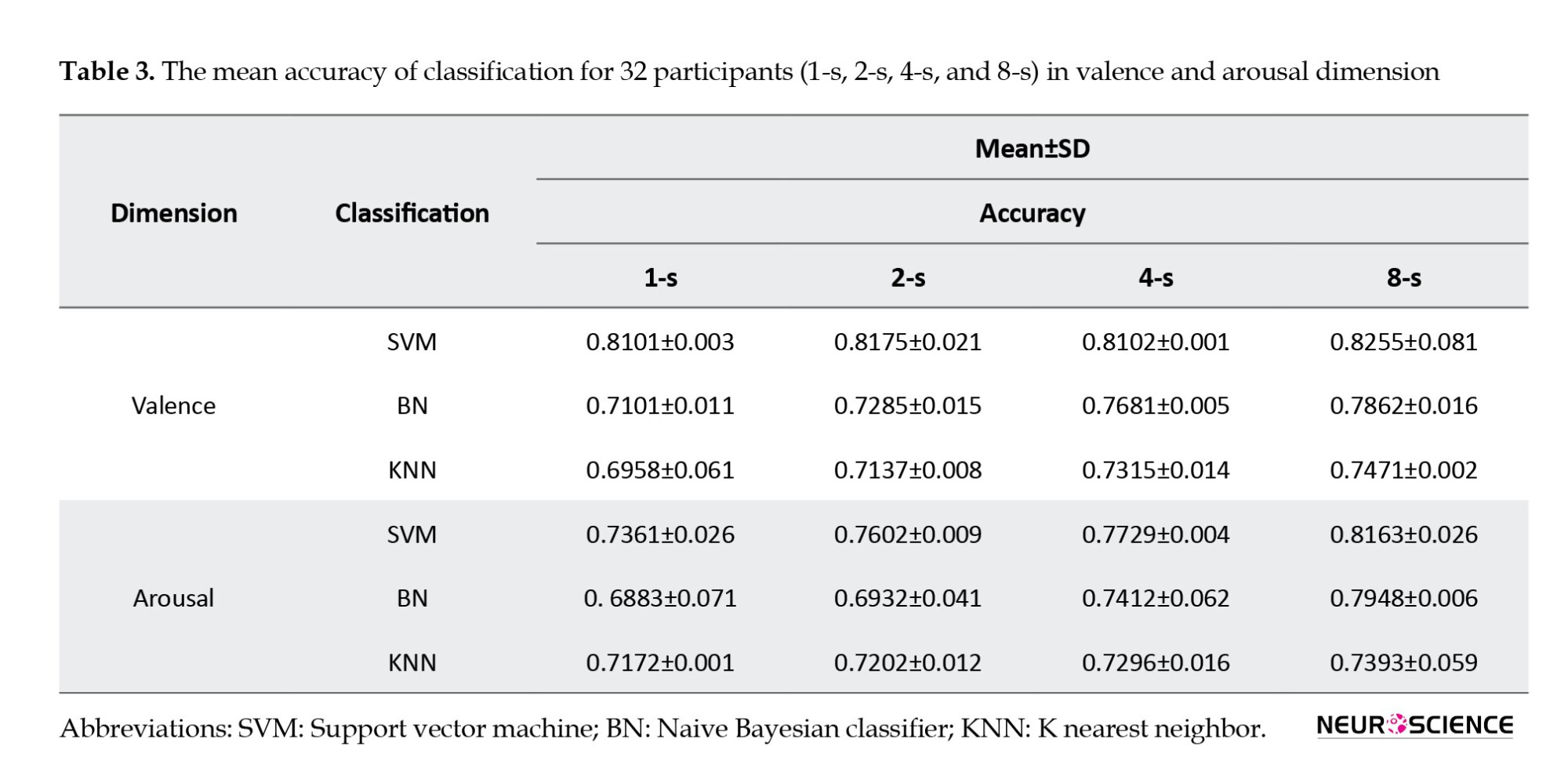

In this section, the results obtained from the network are presented in the form of classification accuracy, sensitivity, specificity, and FPR. The 1-s, 2-s, 4-s, and 8-s windows with 32 channels are considered for extracting features and training the SAEs. Based on the results, the accuracy of classification has been enhanced when the network uses an 8-s window to train data. Therefore, the optimal duration is considered the 8-s window as shown in Table 3.

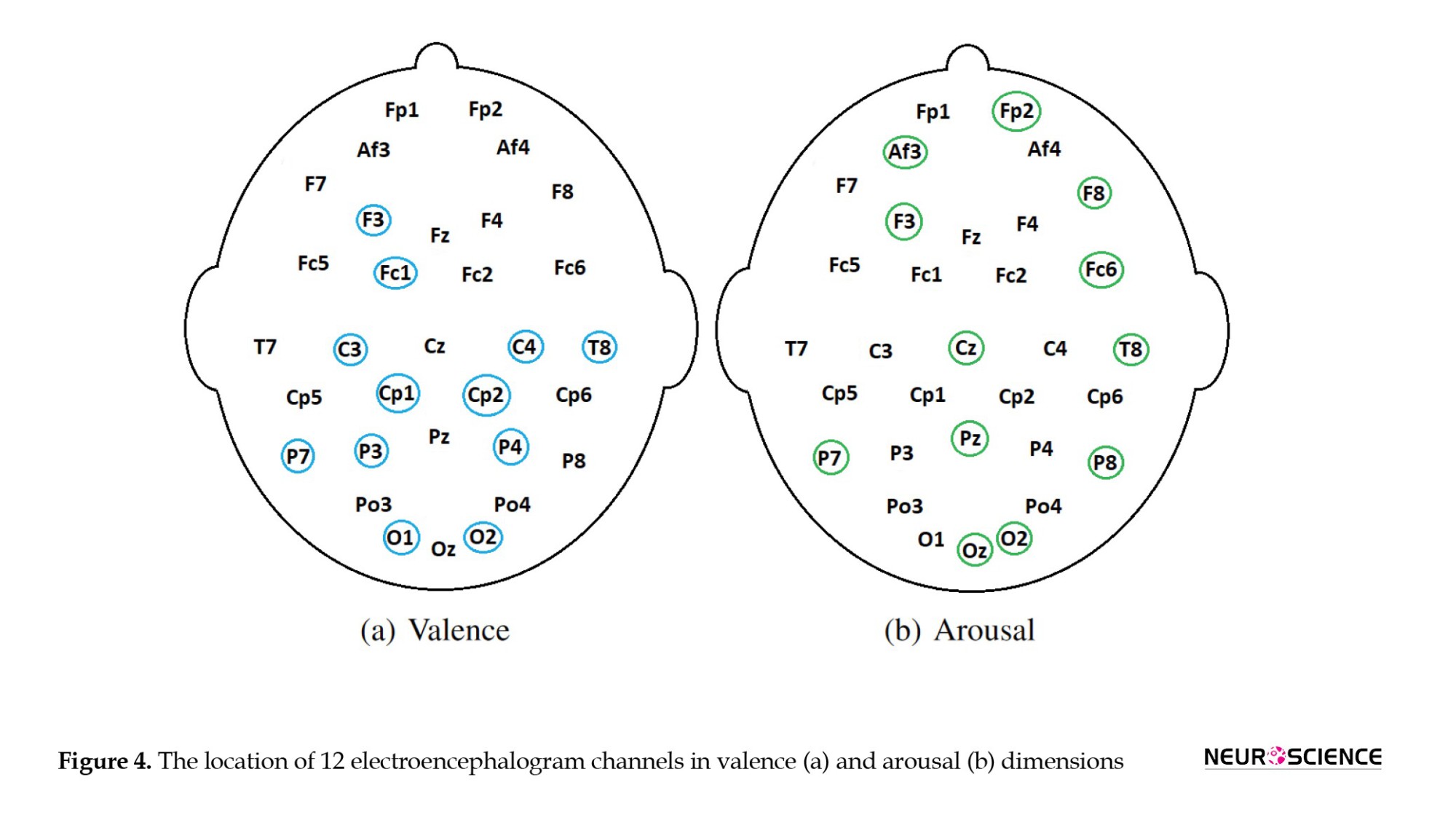

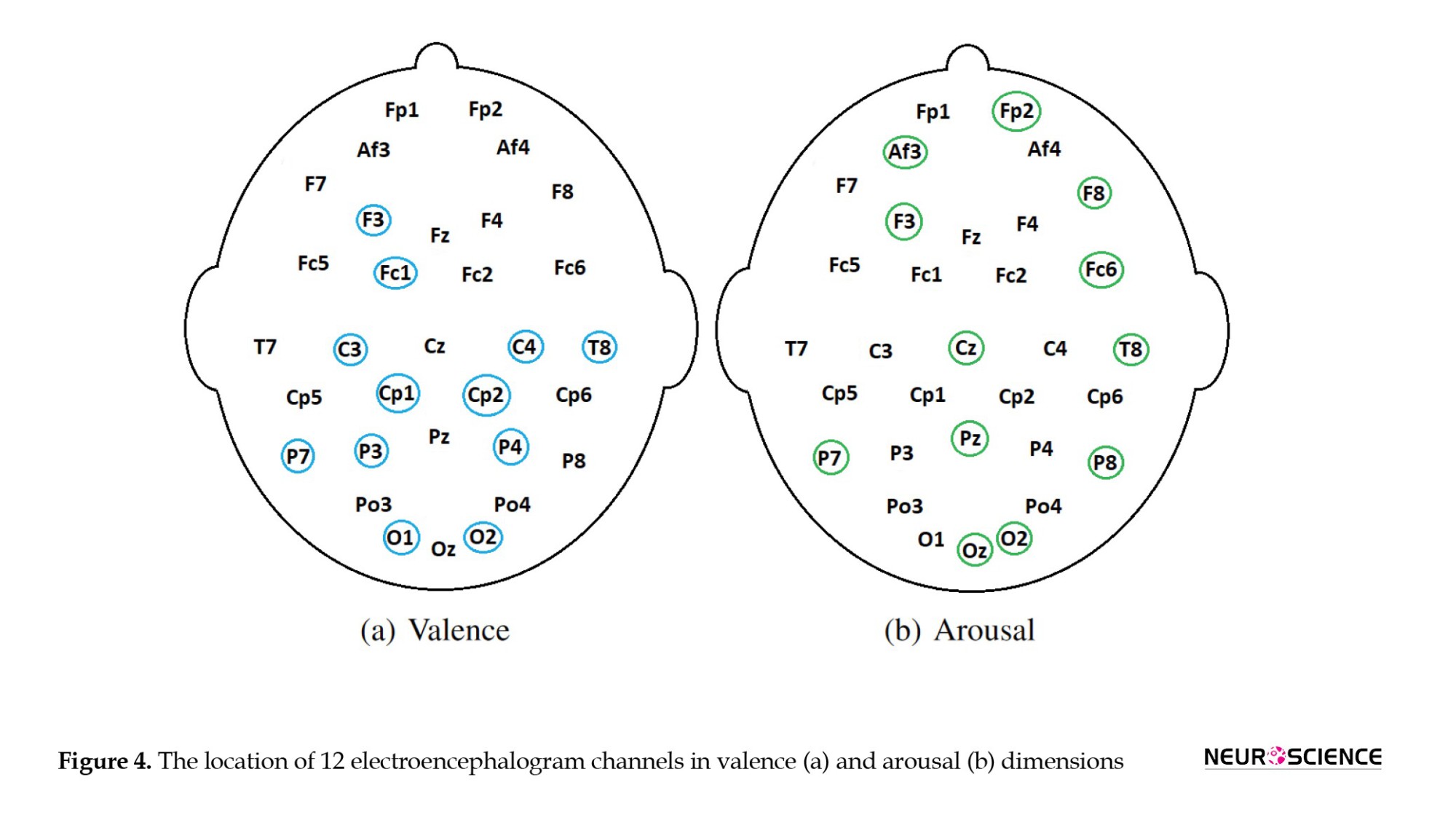

According to the proposed method, 10 channels, including Fp1, Fp2, Af3, F8, F4, Fz, Cp5, Pz, Po3, and Po4 channels in valence dimension and Af4, F7, Fc1, C3, Cp5, Cp2, P3, P4, Po4, and O1 channels in arousal dimension have been reduced when the network is in the first iteration. Hence, only 22 significant channels remained. The next time, 4 channels Af4, F7, Fc6, and P8 in valence dimension and Fp1, Fz, T7, and Cp6 in arousal dimension have been reduced and only 18 channels are available. In the third iteration, 6 channels Fc5, Fc2, T7, Cz, Cp6, and Oz in valence dimension and F4, Fc5, Fc2, C4, Cp1 and Po3 in arousal dimension have been reduced and only F3, FC1, C3, C4, T8, CP1, CP2, P7, P3, P4, O1, and O2 channels in valence dimension and Fp2, AF3, F8, F3, FC6, Cz, T8, P7, Pz, P8, O2, and Oz channels in arousal dimension are considered the 12 most important channels. In the fourth iteration, when each channel is reduced, the accuracy is significantly decreased (Table 4). Therefore, based on the calculated accuracy in ultimate stage, the channel reduction process is ended and only 12 channels are named F3, FC1, C3, C4, T8, CP1, CP2, P7, P3, P4, O1, and O2 channels in valence dimension and Fp2, AF3, F8, F3, FC6, Cz, T8, P7, Pz, P8, O2, and Oz channels in arousal dimension are used to emotion classification. SVM classifier has obtained higher accuracy than other classifiers. The achieved SVM classification accuracy, sensitivity, specificity, and FPR are 75.7%, 89%, 76.33%, 0.1475 in valence dimension and 74.4%, 84 %, 73.65 %, 0.1348 in arousal dimension, respectively, using the sample duration of 8-s EEG data with 6 channels.

The accuracy value of maximum and minimum in valence dimension with a steeper graph is greater than the arousal dimension (Figure 3). Among the classifiers selected in this study, the SVM network has reported the highest accuracy in two dimensions, arousal and valence. In the valance dimension, the accuracy of the SVM classification starts from 0.8255±0.081 and stops the algorithm with the accuracy of 0.7578±0.026, and in the arousal dimension, it starts from 0.8163±0.026 and stops with the accuracy of 0.7445±0.026.

The locations of the 12 EEG channels obtained from valence and arousal dimensions before and after channel reduction are shown in Figure 4. Accordingly, valence and arousal dimensions involve a different combination of EEG channels. In valence dimension, channels F3, FC1, C3, C4, T8, CP1, CP2, P7, P3, P4, O1, and O2 have remained. These channels are more related to the middle left and right hemispheres. However, in the valence dimension, channels Fp2, AF3, F8, F3, FC6, Cz, T8, P7, Pz, P8, O2, and Oz are considered which involve frontal and parietal lobes of the brain.

The result of the accuracy comparison of SVM, KNN, BN classifiers, and feature extraction methods of principal component analysis and nonlinear principal component analysis with 8-s windows are shown in Table 5. The results illustrate that the feature extraction method by the stacked autoencoder has reported the highest accuracy compared with BN and KNN for 12 channels.

4. Discussion

This paper proposed an efficient emotion recognition method using EEG signals, which can classify emotional states. A deep learning network was used in this study to extract complex linear and nonlinear features from EEG data. A channel reduction technique is applied to emotion recognition using the minimum number of channels of the EEG signal. This algorithm provides a non-invasive easy-use method to diagnose emotion states. The SVM classifier has reported the highest accuracy compared to BN and KNN classifiers. EEG emotion recognition using the SAE feature selection method has been demonstrated with an accuracy of 75.7% for valence and 74.4% for arousal. SAE has the advantage of the ability to extract low-level features from the input layer and high-level features in deep layers (Jose et al., 2021).

5. Conclusion

By using SAE networks, the EEG channels can be reduced from 32 to 12 with less than a 9% reduction in accuracy in both valence and arousal dimensions. This process can reduce the number of channels and simplify the process of recording the signal so that the important parameters in the EEG signal are completely preserved. The proposed method of emotion recognition only uses EEG signals of 12 channels. Although channel selection algorithms are in general based on features extracted from the EEG signals, this approach could report the most efficient results among the state-of-the-art emotion recognition methods.

Study Limitations

The limitations of this study include primary feature selection, detection of various cognitive disorders, and investigation of EEG feature categories separately. In future studies, this method can be used to classify other medical data. Moreover, the primary extracted features can be automatically extracted by neural networks.

Ethical Considerations

Compliance with ethical guidelines

All ethical principles are considered in this article. The participants were informed of the purpose of the research and its implementation stages. They were also assured about the confidentiality of their information and were free to leave the study whenever they wished, and if desired, the research results would be available to them. A written consent has been obtained from the subjects. Principles of the Helsinki Convention was also observed.

Funding

The paper was extracted from the PhD dissertation of Elnaz Vafaei, approved by the Department of Biomedical Engineering, Faculty of Medical Sciences and Technologies, Science and Research Branch, Islamic Azad University, Tehran, Iran.

Authors' contributions

All authors equally contributed to preparing this article.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors are grateful for the support of Faculty of Medical Sciences and Technologies, Islamic Azad University, Science and Research Branch.

References

Emotions are recognized as one of the most important parameters in daily human activities. The most important effect of this parameter is the creation of healthy relationships in social environments (Wei et al., 2020). Identifying emotion is the first step to discovering the effective human connection in the face of the environment, which has become one of the most up-to-date subjects to control the positive and negative states of human beings (Saleh Khajeh Hosseini et al., 2022). Emotions can be identified qualitatively by assessing facial expressions (Schirmer, 2017). The study of brain signals is a quantitative method in that brain-computer interface systems have successfully achieved a high level of classification of emotional states through machine learning applications.

Brain signals are produced by the central neuron system (Topic & Russo, 2021). These signals are performed non-invasively on the surface of the skull with measuring devices, such as electroencephalograms (EEG). An electroencephalograph is a device that measures the pulses produced in the center of the head (Li et al., 2018). It has a high temporal resolution that can reflect the strength and position of brain activity. The signals are measured by electrodes mounted on the scalp based on the 10-20 electrode system. A channel is obtained from the voltage difference between two electrodes, and the set of channels together creates the EEG recording. The EEG signal is recorded (Candra et al., 2017) by several channels that, depending on the accuracy of the measurement, the number of channels can be selected as 24, 32, 64, 128, and 256. Identification of emotions from EEG signals requires several important factors, including the features and number of channels that are used during the recording (Candra et al., 2017). As the number of channels increases, the accuracy of the measurement is enhanced; however, a large number of channels on the head can delay the signal recording process, which is inconvenient for both the physician and the patient. In addition, with increasing the number of channels, the costs of signal recording increase. Therefore, reducing the data record time and its costs would be possible by decreasing the number of channels and maintaining the quality of the record result. Jana and Mukherjee, depicted an efficient seizure prediction technique using a convolutional neural network with optimizing the EEG channels (Jana & Mukherjee, 2021).

Features that are extracted for emotion recognition are the most important steps in the EEG signal processing. These steps consist of EEG signal preprocessing, including artifacts of electromyography and electrooculography signals. Many scientists are interested in automated feature learning that deep learning neural networks provide acceptable results. Deep learning networks are one of the up-to-date topics in the field of machine learning in EEG signal analysis. Deep networks are an unsupervised and supervised learning method. These networks have provided acceptable results in reducing the input space of large databases, such as brain signal data (Yin et al., 2017). Stacked autoencoder networks (SAE) are one of the deep learning methods that can automatically extract complex nonlinear abstracted features from a signal. Jose et al. (2021) developed the structure of stacked autoencoders to extract low-level and high-level features in deep layers. These features are a good representation of the signal, which is considered a good indication to evaluate the importance of the presence or absence of channels (Candra et al., 2017; Yin et al., 2017). Therefore, SAE can be effective in reducing the number of channels based on feature fusion. For EEG channel reduction, Candra et al. applied the weave feature to classify emotion and achieved 76.8% accuracy for valence and 74.3% for arousal emotion (Candra et al., 2017).

In this study, the optimal features of EEG signals are extracted using stacked autoencoder networks. A deep learning method is proposed for emotion recognition for important feature extraction from EEG signals. The linear or nonlinear combination of temporal, frequency, and linear features is extracted with SAEs. A channel reduction method is also used to improve an emotion classification for classification in two dimensions arousal and valance. Accordingly, a combination of features by SAEs is proposed as an EEG-emotion feature to classify low/high states of valence and arousal dimensions. The evaluation determines the importance of each channel based on the characteristics obtained from the SAE networks. This approach can reduce the number of channels and time to prepare the process of recording and during signal recording, this proposed method is described in the next section.

This study consists of four sections which are explained below. In the second section, the structure of the autoencoder neural network is completely described. The third section, discusses methods for classifying EEG data based on standard EEG features related to emotion recognition according to two dimensions, arousal, and valance, as well as features extracted from the autoencoder network are discussed. Results and discussion sections are presented in sections 4 and 5 in which the results of the experiments are reported. Meanwhile, the results of the channel reduction algorithm on classification applying support vector machine (SVM) algorithm were discussed along with channel location that is related to valence and arousal dimensions.

2. Materials and Methods

This section of the paper has five main parts. In the first part, the database which is used in this study has been explained. Subsequently, EEG data processing, including pre-processing and processing, is mentioned. The third part describes the SAE network and then the technique for reducing the number of channels is stated. In the end, the performance evaluation criteria that can assess the performance of the proposed method are mentioned.

DEAP database

In this study, the DEAP physiological database has been used, which was collected by Koelstra et al. in 2012 to analyze emotion states. One of the recorded electrophysiological signals in the database is the EEG signal which consists of 32 electrodes according to the international 10–20 electrodes placement system. The EEG signal was recorded from 32 participants with a mean age of 28 years, 16 of whom were female. The data were not gender biased. Emotional stimuli, which induced 4 types of emotion for each subject, were done with 40 types of 1-min music videos. Next, the participants were asked to rate their emotions with emotional labels using the self-assessment Mankins questionnaire on valence and arousal dimensions. The selection of videos was based on 4 classes of emotion as follows: High arousal-high valence, high arousal-low valence, low arousal-high valence, and low arousal-low valence. The sampling frequency at the time of EEG signal recording was 256 Hz, which was then reduced to 128 Hz by the down-sampling method. A baseline 5-min signal was recorded for each participant, with a 3-second interval between each video to relieve the individual’s emotional state (Koelstra et al., 2012). The model of arousal and valence dimension is illustrated in Figure 1.

EEG data processing

The main objective of the present study is the classification of human emotions and emotional states. Artifacts and noises, including electromyography and electrooculography signals, are removed by the independent component analysis method (Ashtiyani et al., 2008). Based on previous studies in emotion recognition, EEG signal has three main feature categories that are categorized into time, frequency, and time-frequency features that are considered in this study (Chen et al., 2020). The most common features used in emotion recognition studies are power, mean, standard deviation, zero-crossing rate, entropy, fractal dimension, and correlation dimension which are shown in Table 1 (Wang & Wang, 2021; Yu & Wang, 2022). We used these features to train the SAEs with 1-s, 2-s, 4-s, and 8-s windows with 50% overlap (w). All features were normalized between zero and one. These features are the inputs of stacked autoencoders that are combined to represent the best-abstracted features. The sampling frequency was 128 Hz; therefore, the EEG signal consisted of 128 samples in one second. Considering “m” as the duration of the signal based on seconds, 10 features (based on Table 1), and 32 channels, the size of the input is equal to “n=(32×10×m×50%)/w,” where n is the dimension of the extracted features in the input.

Stacked autoencoder for feature extraction

The autoencoder networks are one of the deep learning methods in that structure that is symmetrical. There are three layers, including the input layer, hidden layer, and output layer that are named encoder and decoder parts. The output of the first encoder acts as the input of the second autoencoder network and this process will be continued to make a SAE. The features that are extracted from the DEAP database are the extension of the SAE. The network at the SAEs level uses unlabeled data based on an unsupervised approach (Chai et al., 2016). The flowchart of the proposed method that we applied is illustrated in Figure 2.

The last decoder is removed and the weights and biases are the final abstracted features that we need. Fine-tuning function when classifying emotions that set SAE parameters is the most important part of emotion classification. This stage is trained with labeled data to train all layers at one time (Yin et al., 2017) (Equations 1, 2, 3, and 4).

In Equation 1, x∈Rn is the vector of the input (feature selected from raw EEG). h∈Rp is the vector of the hidden layer, and P is the dimension of abstracted features. Meanwhile, σ shows the sigmoid function and W∈Rp×n is a weight matrix, b∈Rp is a bias vector that is shown in Equation 2. In Equation 3, x'∈Rn is the next layer that the dimension of x' is equal x. The weights of the hidden layer reconstruct the output or x'. The square error cost function in Equation 4, is E where the E is minimized when the input and the reconstructed input are the most similar. The weights and biases of the last hidden layer are the features extracted from the SAE network that are calculated with the fine-tuning method by minimizing the reconstruction loss.

Classification

The features obtained from the SAE networks are used to classify emotion classes. K nearest neighbor (KNN) classifier, naive Bayesian classifier (BN), and SVM classifiers are considered as these classifiers are widely used in the field of emotion recognition (Yu & Wang, 2022). An architecture of the presented model is illustrated in Figure 1, including the extraction of primary features from the raw data of EEG signal and, applying SAEs to obtain abstracted features and emotion classifiers from optimal features.

Channel reduction

In various studies, all the channels of EEG signals are used for emotion recognition. However, some of them indicate that all channels have no significance (Candra et al., 2017). Using all 32 channels is not comfortable for doctors and patients during EEG signal recording. Therefore, emotion recognition that uses a minimum number of channels has many advantages, namely reducing the computational time and increasing the time efficiency. The requirement of a channel reduction method is seen for the development of emotion classification trends.

In the proposed method, each channel is important and the importance of all of them is evaluated individually based on the accuracy of the classifier in the absence of that channel. The accuracy of emotion classification when all 32 channels are used has been calculated. Then, each channel was ignored and the average of the network was recalculated with the remaining channels. Therefore, when the average of the accuracy is not decreased, the ignored channel is unimportant and the execution of the method continues. Table 2 illustrates a channel reduction algorithm.

Performance evaluation metrics

There are criteria for evaluating the performance of the method provided. These criteria should properly evaluate the presence or absence of each channel. Classification accuracy, sensitivity, specificity, and false-positive rate (FPR) criteria are considered the methods that are calculated using Equations 5, 6, 7 and 8, respectively, in which “TP” stands for true positive, “FP” is false positive, “FN” stands for a false negative, and “TN” is true negative (Bajpai et al., 2021).

3. Results

In this study, an emotion recognition method using EEG signals is proposed. SAE network is one of most practical the deep learning techniques, which is used for important feature extraction and feature fusion hidden in raw EEG signals for classification. Recording brain signals with an EEG system using 32 channels cannot be comfortable for doctors and patients. Thus, a channel reduction technique with maintaining the quality of signal recording and the main feature of brain signals is needed to reduce the number of channels for emotion recognition.

In this section, the results obtained from the network are presented in the form of classification accuracy, sensitivity, specificity, and FPR. The 1-s, 2-s, 4-s, and 8-s windows with 32 channels are considered for extracting features and training the SAEs. Based on the results, the accuracy of classification has been enhanced when the network uses an 8-s window to train data. Therefore, the optimal duration is considered the 8-s window as shown in Table 3.

According to the proposed method, 10 channels, including Fp1, Fp2, Af3, F8, F4, Fz, Cp5, Pz, Po3, and Po4 channels in valence dimension and Af4, F7, Fc1, C3, Cp5, Cp2, P3, P4, Po4, and O1 channels in arousal dimension have been reduced when the network is in the first iteration. Hence, only 22 significant channels remained. The next time, 4 channels Af4, F7, Fc6, and P8 in valence dimension and Fp1, Fz, T7, and Cp6 in arousal dimension have been reduced and only 18 channels are available. In the third iteration, 6 channels Fc5, Fc2, T7, Cz, Cp6, and Oz in valence dimension and F4, Fc5, Fc2, C4, Cp1 and Po3 in arousal dimension have been reduced and only F3, FC1, C3, C4, T8, CP1, CP2, P7, P3, P4, O1, and O2 channels in valence dimension and Fp2, AF3, F8, F3, FC6, Cz, T8, P7, Pz, P8, O2, and Oz channels in arousal dimension are considered the 12 most important channels. In the fourth iteration, when each channel is reduced, the accuracy is significantly decreased (Table 4). Therefore, based on the calculated accuracy in ultimate stage, the channel reduction process is ended and only 12 channels are named F3, FC1, C3, C4, T8, CP1, CP2, P7, P3, P4, O1, and O2 channels in valence dimension and Fp2, AF3, F8, F3, FC6, Cz, T8, P7, Pz, P8, O2, and Oz channels in arousal dimension are used to emotion classification. SVM classifier has obtained higher accuracy than other classifiers. The achieved SVM classification accuracy, sensitivity, specificity, and FPR are 75.7%, 89%, 76.33%, 0.1475 in valence dimension and 74.4%, 84 %, 73.65 %, 0.1348 in arousal dimension, respectively, using the sample duration of 8-s EEG data with 6 channels.

The accuracy value of maximum and minimum in valence dimension with a steeper graph is greater than the arousal dimension (Figure 3). Among the classifiers selected in this study, the SVM network has reported the highest accuracy in two dimensions, arousal and valence. In the valance dimension, the accuracy of the SVM classification starts from 0.8255±0.081 and stops the algorithm with the accuracy of 0.7578±0.026, and in the arousal dimension, it starts from 0.8163±0.026 and stops with the accuracy of 0.7445±0.026.

The locations of the 12 EEG channels obtained from valence and arousal dimensions before and after channel reduction are shown in Figure 4. Accordingly, valence and arousal dimensions involve a different combination of EEG channels. In valence dimension, channels F3, FC1, C3, C4, T8, CP1, CP2, P7, P3, P4, O1, and O2 have remained. These channels are more related to the middle left and right hemispheres. However, in the valence dimension, channels Fp2, AF3, F8, F3, FC6, Cz, T8, P7, Pz, P8, O2, and Oz are considered which involve frontal and parietal lobes of the brain.

The result of the accuracy comparison of SVM, KNN, BN classifiers, and feature extraction methods of principal component analysis and nonlinear principal component analysis with 8-s windows are shown in Table 5. The results illustrate that the feature extraction method by the stacked autoencoder has reported the highest accuracy compared with BN and KNN for 12 channels.

4. Discussion

This paper proposed an efficient emotion recognition method using EEG signals, which can classify emotional states. A deep learning network was used in this study to extract complex linear and nonlinear features from EEG data. A channel reduction technique is applied to emotion recognition using the minimum number of channels of the EEG signal. This algorithm provides a non-invasive easy-use method to diagnose emotion states. The SVM classifier has reported the highest accuracy compared to BN and KNN classifiers. EEG emotion recognition using the SAE feature selection method has been demonstrated with an accuracy of 75.7% for valence and 74.4% for arousal. SAE has the advantage of the ability to extract low-level features from the input layer and high-level features in deep layers (Jose et al., 2021).

5. Conclusion

By using SAE networks, the EEG channels can be reduced from 32 to 12 with less than a 9% reduction in accuracy in both valence and arousal dimensions. This process can reduce the number of channels and simplify the process of recording the signal so that the important parameters in the EEG signal are completely preserved. The proposed method of emotion recognition only uses EEG signals of 12 channels. Although channel selection algorithms are in general based on features extracted from the EEG signals, this approach could report the most efficient results among the state-of-the-art emotion recognition methods.

Study Limitations

The limitations of this study include primary feature selection, detection of various cognitive disorders, and investigation of EEG feature categories separately. In future studies, this method can be used to classify other medical data. Moreover, the primary extracted features can be automatically extracted by neural networks.

Ethical Considerations

Compliance with ethical guidelines

All ethical principles are considered in this article. The participants were informed of the purpose of the research and its implementation stages. They were also assured about the confidentiality of their information and were free to leave the study whenever they wished, and if desired, the research results would be available to them. A written consent has been obtained from the subjects. Principles of the Helsinki Convention was also observed.

Funding

The paper was extracted from the PhD dissertation of Elnaz Vafaei, approved by the Department of Biomedical Engineering, Faculty of Medical Sciences and Technologies, Science and Research Branch, Islamic Azad University, Tehran, Iran.

Authors' contributions

All authors equally contributed to preparing this article.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors are grateful for the support of Faculty of Medical Sciences and Technologies, Islamic Azad University, Science and Research Branch.

References

Ashtiyani, M., Asadi, S., & Moradi Birgani, P. (2008). ICA-Based EEG Classification Using Fuzzy C-mean Algorithm. Paper presented at: 2008 3rd International Conference on Information and Communication Technologies: From Theory to Applications, Damascus, Syria, 07-11 April 2008. [DOI:10.1109/ICTTA.2008.4530056]

Bajpai, R., Yuvaraj, R., & Prince, A. A. (2021). Automated EEG pathology detection based on different convolutional neural network models: Deep learning approach. Computers in Biology and Medicine, 133, 104434. [DOI:10.1016/j.compbiomed.2021.104434] [PMID]

Candra, H., Yuwono, M., Chai, R., Nguyen, H. T., & Su, S. (2017). EEG emotion recognition using reduced channel wavelet entropy and average wavelet coefficient features with normal Mutual Information method. Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference, 2017, 463–466. [DOI:10.1109/EMBC.2017.8036862] [PMID]

Chai, X., Wang, Q., Zhao, Y., Liu, X., Bai, O., & Li, Y. (2016). Unsupervised domain adaptation techniques based on auto-encoder for non-stationary EEG-based emotion recognition. Computers in Biology and Medicine, 79, 205–214. [DOI:10.1016/j.compbiomed.2016.10.019] [PMID]

Chen, G., Zhang, X., Sun, Y., & Zhang, J. (2020). Emotion feature analysis and recognition based on reconstructed EEG sources. IEEE Access, 8, 11907 - 11916. [DOI:10.1109/ACCESS.2020.2966144]

Jana, R., & Mukherjee, I. (2021). Deep learning based efficient epileptic seizure prediction with EEG channel optimization. Biomedical Signal Processing and Control, 68, 102767. [DOI:10.1016/j.bspc.2021.102767]

Jose, J. P., Sundaram, M., & Jaffino, G. (2021). Adaptive rag-bull rider: A modified self-adaptive optimization algorithm for epileptic seizure detection with deep stacked autoencoder using electroencephalogram. Biomedical Signal Processing and Control, 64, 102322. [DOI:10.1016/j.bspc.2020.102322]

Koelstra, S., Muhl, C., Soleymani, M., Lee, J. S., Yazdani, A., & Ebrahimi, T., et al. (2012). DEAP: A database for emotion analysis; using physiological signals. IEEE Transactions on Affective Computing, 3(1), 18 - 31. [DOI:10.1109/T-AFFC.2011.15]

Li, J., Zhang, Z., & He, H. (2018). Hierarchical Convolutional Neural Networks for EEG-based emotion recognition. Cognitive Computation, 10, 368–380. [Link]

Khajeh Hosseini, M. S., Pourmir Firoozabadi, M., Badie, K., & Azad Fallah, P. (2023). Electroencephalograph emotion classification using a novel adaptive ensemble classifier considering personality traits. Basic and Clinical Neuroscience, 14(5), 687–700. [PMID]

Schirmer, A., & Adolphs, R. (2017). Emotion perception from face, voice, and touch: Comparisons and convergence. Trends in Cognitive Sciences, 21(3), 216–228. [DOI:10.1016/j.tics.2017.01.001] [PMID] [PMCID]

Topic, A., & Russo, M. (2021). Emotion recognition based on EEG feature maps through deep learning network. Engineering Science and Technology, an International Journal, 24(6), 1442-1454. [DOI:10.1016/j.jestch.2021.03.012]

Wang, J., & Wang, M. (2021). Review of the emotional feature extraction and classification using EEG signals. Cognitive Robotics, 1, 29-40. [DOI:10.1016/j.cogr.2021.04.001]

Wei, C., Chen, L. l., Song, Z. Z., Lou, X. G., & Li, D. D. (2020). EEG-based emotion recognition using simple recurrent units network and ensemble learning. Biomedical Signal Processing and Control, 58, 101756. [DOI:10.1016/j.bspc.2019.101756]

Type of Study: Original |

Subject:

Cognitive Neuroscience

Received: 2023/01/8 | Accepted: 2023/02/20 | Published: 2024/05/1

Received: 2023/01/8 | Accepted: 2023/02/20 | Published: 2024/05/1

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |