Volume 14, Issue 5 (September & October 2023)

BCN 2023, 14(5): 687-700 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Khajeh Hosseini M S, Firoozabadi M P, Badie K, Azad Fallah P. Electroencephalograph Emotion Classification Using a Novel Adaptive Ensemble Classifier Considering Personality Traits. BCN 2023; 14 (5) :687-700

URL: http://bcn.iums.ac.ir/article-1-2360-en.html

URL: http://bcn.iums.ac.ir/article-1-2360-en.html

Mohammad Saleh Khajeh Hosseini1

, Mohammad Pourmir Firoozabadi *2

, Mohammad Pourmir Firoozabadi *2

, Kambiz Badie3

, Kambiz Badie3

, Parviz Azad Fallah4

, Parviz Azad Fallah4

, Mohammad Pourmir Firoozabadi *2

, Mohammad Pourmir Firoozabadi *2

, Kambiz Badie3

, Kambiz Badie3

, Parviz Azad Fallah4

, Parviz Azad Fallah4

1- Department of Biomedical Engineering, Faculty of Medical Sciences and Technologies, Science and Research Branch, Islamic Azad University, Tehran, Iran.

2- Department of Medical Physics, Faculty of Medicine, Tarbiat Modares University, Tehran, Iran.

3- Department of Content & E-Services Research, Faculty of IT Research,University of Tehran, Tehran, Iran.

4- Department of Psychology, Faculty of Humanities, Tarbiat Modares University, Tehran, Iran.

2- Department of Medical Physics, Faculty of Medicine, Tarbiat Modares University, Tehran, Iran.

3- Department of Content & E-Services Research, Faculty of IT Research,University of Tehran, Tehran, Iran.

4- Department of Psychology, Faculty of Humanities, Tarbiat Modares University, Tehran, Iran.

Full-Text [PDF 1184 kb]

| Abstract (HTML)

Full-Text:

1. Introduction

Emotions play a critical role in human interactions and are a mental state associated with a wide variety of behaviors and feelings. Since emotions are affected by many subject-dependent factors, it is one of the most complicated research fields in psychology. One of the interesting topics in the study of emotion is the human-machine (HM) interaction (Alarcão, 2017). An emotion-enabled HM interface can match itself with the user’s feelings and show a proportional response. Emotions can be reflected through facial expressions, voice intonation, body language, physiological signals, etc (Craik, 2019; Einalou, 2017). Several physiological signals can be used to measure emotions. Electroencephalogram (EEG) is the time-series measure of the physiological signal of the brain. EEG has been used in many studies on emotion research. It has a high temporal resolution, low cost, and high correlation with emotions (As, 2021; Sammler, 2007). Emotions in a two-dimensional space are divided into two levels of valence–arousal (VA). Human emotions are obtained by decoding facial expressions and changing speech and behavior and neurophysiological signals resulting from emotional changes, which can enhance this process by integrating the individual’s emotional states and interacting with the HM (Tyng et al., 2017; Wu, 2020; Seifi, 2018). On the one hand, in the study of human emotional states, emotions can be classified into several discrete states by considering the VA levels. The degrees VA dimensions have two-dimensional cores in the affection state (Hanjalic & Xu, 2005). In these two dimensions, a capability can systematically define multiple emotional states. In addition, emotional changes are related to the activities of a part of the brain called the limbic system, which controls external behaviors and internal cognitive patterns. Zhang and Lee show a link between the valence dimension, and the activation level of the anterior parietal cortex, arousal dimension, and the right supramarginal gyrus (Zhang & Lee, 2013). Therefore, these relationships suggest that physiological neuronal signals beneath the subcortex can extract emotional cues, and neuroimaging techniques are necessarily needed. Based on many studies, EEG has become an effective solution and a crucial manner due to high temporal resolution and reliable repeatability for effective computing (Yoon & Chung, 2013; Eslamieh, 2018). Due to the development in the field of biomedical engineering in the development of recording equipment, EEG as a non-invasive method can be easily measured and recorded through portable and wireless sensors.

Time, frequency, and spatial features can be accurately extracted from EEG-filtered signals, and these features have been a suitable criterion to distinguish human emotional states in many studies (Asa, 2021). These features can be used as training data for many neural networks, such as deep learning for data classifiers (Brunner et al., 2011). Emotion classification studies are categorized into two groups, offline classification and online classification. In the recently reported study, Atkinson & Campos (2016) revealed that a hybrid EEG emotion classifier based on data minimization and combined with the support vector machine (SVM) network can improve emotion recognition process and reported an accuracy of 73.14% in the valence dimension and 73.06% in the arousal dimension. Verma et al. (2011) used the discrete wavelet transform technique to extract the desired features in emotion recognition. These extracted features were used in the classification of emotions by SVM and k-nearest neighbor algorithm (KNN) networks and the highest network accuracy was reported to be 81.45%. Yin et al. (2016) developed a suitable method for classifying emotions based on a deep learning model in stacked autoencoder networks to improve the deep learning network performance (Fürbass, 2020). They reported acceptable results with the highest accuracy of the dimensions of arousal and valence equal to 84.18% and 83.04%, respectively.

Mahmoud and Lee (2015) used a method based on the late positive potential to extract brain signal features using the SVM and K-nearest neighbor (KNN) classifiers to study emotions. Jirayucharoensak et al. (2014) illustrated a new approach to recognize EEG-based emotion by developing the stacked autoencoder network. To increase the static stability of EEG signals, variables are used by the principal component analysis (PCA) method. SVM and naive Bayes methods are used to investigate valence and arousal dimensions. According to recent studies, machine learning is used as a tool to detect emotions based on the features extracted from EEG signals. However, in these studies, online evaluation of an approach to designing a classifier has received less attention. For example, validating a method in a data classifier is very convenient and reliable for an offline mode, but cannot be invoked in an online or real-time process.

In 2017, Tyng et al., (2017) used an autoencoder for features extracted from the EEG signal, and their results were reported based on the emotion classifier online. Namely, the emotion EEG dataset (SEED) was divided into two groups of training and testing, which were collected in a non-overlapping manner and the average accuracy reached 77.88%. Wang et al. (2022) also designed an emotion classifier using the hierarchical Bayesian model and reported acceptable results. Yin et al. (2017) developed an online emotion classifier to derive stable features of the EEG signal. Gao et al. conducted their studies on a reliable classifier to find common patterns in EEG signal features (Gao, 2020). When recording a signal, if the number of participants is limited, this recording may provide incorrect information to the researcher. It can also report information more accurately when classifying data, whether generic or specific subjects, based on online and static methods, which makes the feature extraction model more efficient. However, the EEG signal is a time-based data set, information is regulated based on the body’s physiological system, and the importance of features in the central nervous system is determined by an inherent dynamic.

In this study, we present an adaptive classification method to enhance emotion recognition systems based on an online approach that can be used in an online process for each participant (Greco, 2017). This classifier can be a suitable alternative to the generic classification, especially when the number of participants is limited. This study is based on the Guyon et al. (2002) and Ashtiyani et al., 2008 studies considering the (recursive feature elimination) RFE feature algorithm. This approach is for all features through the classification margin of an SVM binary network (Shu et al., 2018; Khordechi, 2008). In this study, we have generalized the RFE algorithm to dynamic (D)-RFE, which considers the optimal EEG signal features by analyzing data and features in real-time and in the historical trend.

In this paper, firstly the EEG signal database is introduced and the required preprocessions are performed on this database. In the following, feature extraction methods will be described in detail. In the second part, the proposed classification algorithm will be stated. In the third part, the results obtained from the algorithm and model parameters as well as feature selection and its effect on the classifier performance will be expressed. In the discussion section, the results will be compared with conventional methods. Finally, this method will be expressed based on the performance and results of the proposed method and its limitations and advantages.

2. Materials and Methods

Eysenck personality questionnaire (EPQ)

As depicted in Figure 1, the Eysenck personality questionnaire (EPQ) is primarily based on two biologically independent dimensions of nature, N and E, including a third, P.

N- Neuroticism/Stability: Neuroticism represents the threshold of excitability of the nervous system. Neurotic or unstable people are unable to control their emotional reactions. On the other side, emotionally stable people can control their emotions.

E- Extraversion/Introversion: Extraversion is characterized by high positive affect. According to Eysenck’s arousal theory, extroverts are chronically under-aroused and need external stimulation to bring them up. On the other side, introverts are chronically over-aroused and need quiet to bring them down.

P- Psychoticism/Socialisation: Psychoticism is associated with liability.

L- Lie scale: To measure people lying to control their scores.

Due to the continuous nature of dimensions in the Eysenck questionnaire and based on recorded scores, four categories of personality are defined:

Introverts: Score lower than 11 on the extroversion dimension.

Extroverts: Score higher than 12 on the extroversion dimension.

Stables: Score lower than 15 on the neurotic dimension. U stables: Score higher than 15 on the neurotic dimension.

Subjects

A total of 270 healthy volunteers aged 19–30 years (Mean±SD 25.01±3.13 years), participated in this study. Three groups of volunteers were selected based on the highest score obtained by the Eysenck questionnaire. Each group included 12 healthy men and women. The unstable-introvert group tends to depression at 17–28 years of age (Mean±SD 25.0±3.11 years). Unstable-extrovert group tends to Mania at 18–29 years of age (Mean±SD 25.21±3.07 years) and the normal group at 17–29 years of age (Mean±SD 26.32±3.12 years). Table 1 presents the results of screening participants by EPQ. A total of 48% of the population was men and 52% of the population was women.

Stimuli

Emotions can be mapped into AV dimensions. Based on AV dimensions and to maximize differentiation of induced emotions, three basic emotions, sadness, happiness, and neutral, were selected. These emotions are consistent with participant groups. Among several types of emotional stimulation, such as music, text, and voice, we chose pictures and movie clips due to their desirable properties. Pictures were selected from the Geneva Affective Picture database with the maximum AV scores for the mentioned emotions. Forty individuals who did not engage in the final trial were requested to assess the movie clips by positive and negative affect scale (PANAS). Among 36 movie clips, 12 movie clips that received the highest positive and negative affect scale (PANAS) ratings were selected. Chosen pictures and movie clips were categorized as sadness, happiness, and neutral. Due to the continuous nature of dimensions in Eysenck’s questionnaire and based on recorded scores, four categories of personality are defined.

Experimental protocol

Participants filled out the informed consent EPQ and then sat on a resting chair in front of a monitor in a quiet room. EEG electrodes were installed on frontal positions (Fp1, Fp2, F3, F4), and the impedance of each electrode was checked below 5 k-ohm. To get quality data, the subjects were asked to keep control of their movement during the experiment. EEG was recorded using a thought technology Procomp infinite system and the sample rate was 256 Hz. The software platform was the national instrument, LabVIEW version 2019. The EEG signal was recorded in open eyes and closed eyes for 2 minutes. This record was used to calculate the signal features in the frontal section in the rest state. All participants then completed the three types of emotion induction trials randomly. Each trial consisted of 30 pictures and a movie clip at the end. Participants were asked to mark the peak of induced emotions by pressing the space key on the computer keyboard. At the end of the trials, participants were asked to complete EPQ. Figure 2 shows the stimulation procedures.

Signal processing

Recorded EEG is affected by several noises and artifacts. Independent component analysis (ICA) algorithm extracts statistically independent components from a mixture of sources. The adaptive mixture of independent component analysis (AMICA) calculates the maximum likelihood estimation for a mixture model of the independent component. AMICA has shown successful results on EMG artifacts; therefore, among the denoising methods based on independent component analysis (ICA), AMICA was chosen to remove EMG and blinking artifacts. In this project, recorded EEG was manually checked for EMG noise or motion artifact, and AMICA was applied on each noise-affected part of the EEG signal. A simple threshold filter was applied to remove blink artifacts.

Feature extraction is one of the essential success factors in classification problems. This study uses several emotion-specific feature types, wavelet, nonlinear dynamical analysis, and power spectrum. In this study, a 2-s hamming time window with 50% overlap was applied to extract all features.

Wavelet transform

Wavelet transform is one of the best time-frequency analysis methods in EEG signal processing [16]. Wavelet transform decomposes a signal into a family of wavelet functions. The wavelet function is correlated with the shape of the signal. This correlation forms wavelet coefficients. On the other hand, wavelet coefficients are used to measure the correlation of wavelet function and EEG signal at the time of occurrence and frequency resolution level. In this study, we applied for the fourth-order Daubechies wavelet function due to its optimal time-frequency properties on EEG signals. Resolution levels are almost correspondent with standard EEG frequency bands.

Relative wavelet energy was computed for each level by Equation 1:

1. Pl=El ⁄Et

Where El is the energy at level l and computed as corresponding wavelet coefficients. Et is the total energy and is computed as the sum of energy at all levels.

Then the wavelet entropy was calculated by Equation 2:

Pl is the relative energy at level l

Wavelet entropy and relative wavelet energy were used as time-frequency domain features.

Power spectrum

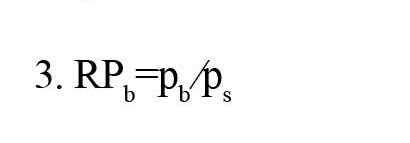

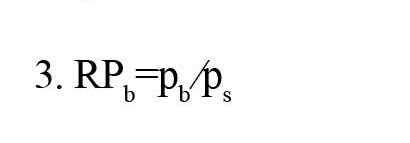

Power spectrum features can be used to investigate the dynamics of EEG signals. The relative powers [17] of each EEG sub-band can be used to monitor the dynamic of emotion changes. To calculate the power spectral density, the EEG signal was divided into 256 epochs with the Hamming windowing method then extended to 512 epochs by zero-padding technique, and to calculate the power spectral density, the 512-point Fourier transform was applied. The relative power was calculated for the frequency sub-bands, theta (4–7 Hz), alpha (8–13 Hz), beta (b 13–30 Hz), and gamma (c 31–50 Hz). The relative power was calculated using Equation 3:

Where RPb is the relative power at frequency band b. ps is the power in frequency band b and pb is the signal power.

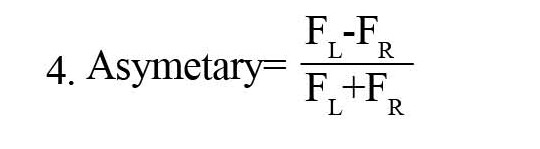

Asymmetry features

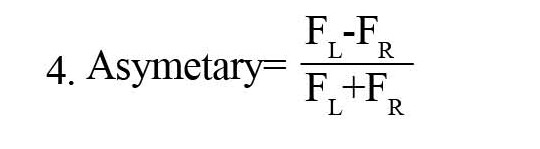

As mentioned in the literature, asymmetric activity in frontal EEG can be used to measure affective tendency. Hence, asymmetry in each previously mentioned feature for the left and right hemispheres was calculated as below (Equation 4):

Whereas FL and FR are feature vectors for the left and right hemispheres, respectively.

Concept drift in feature space

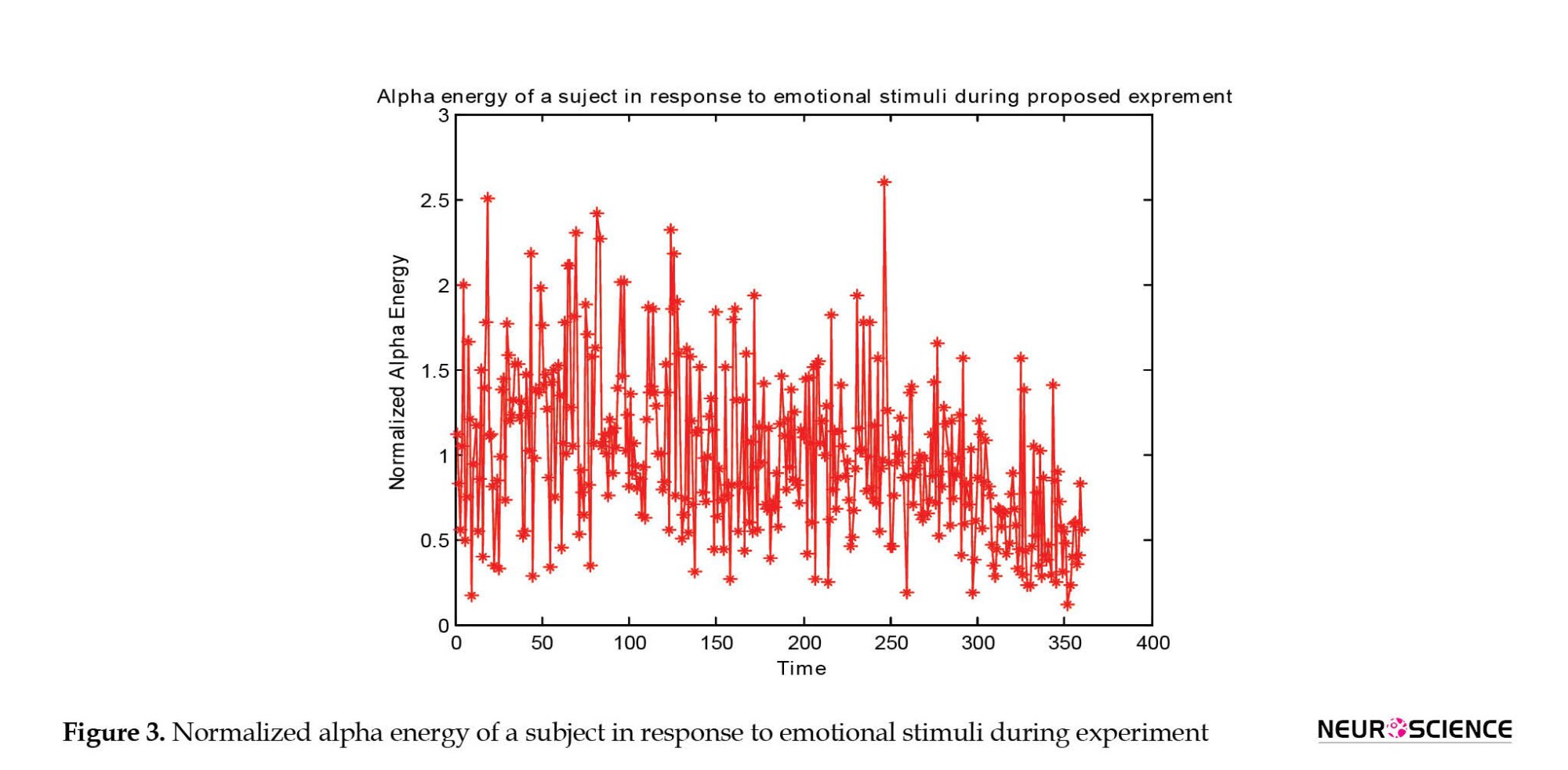

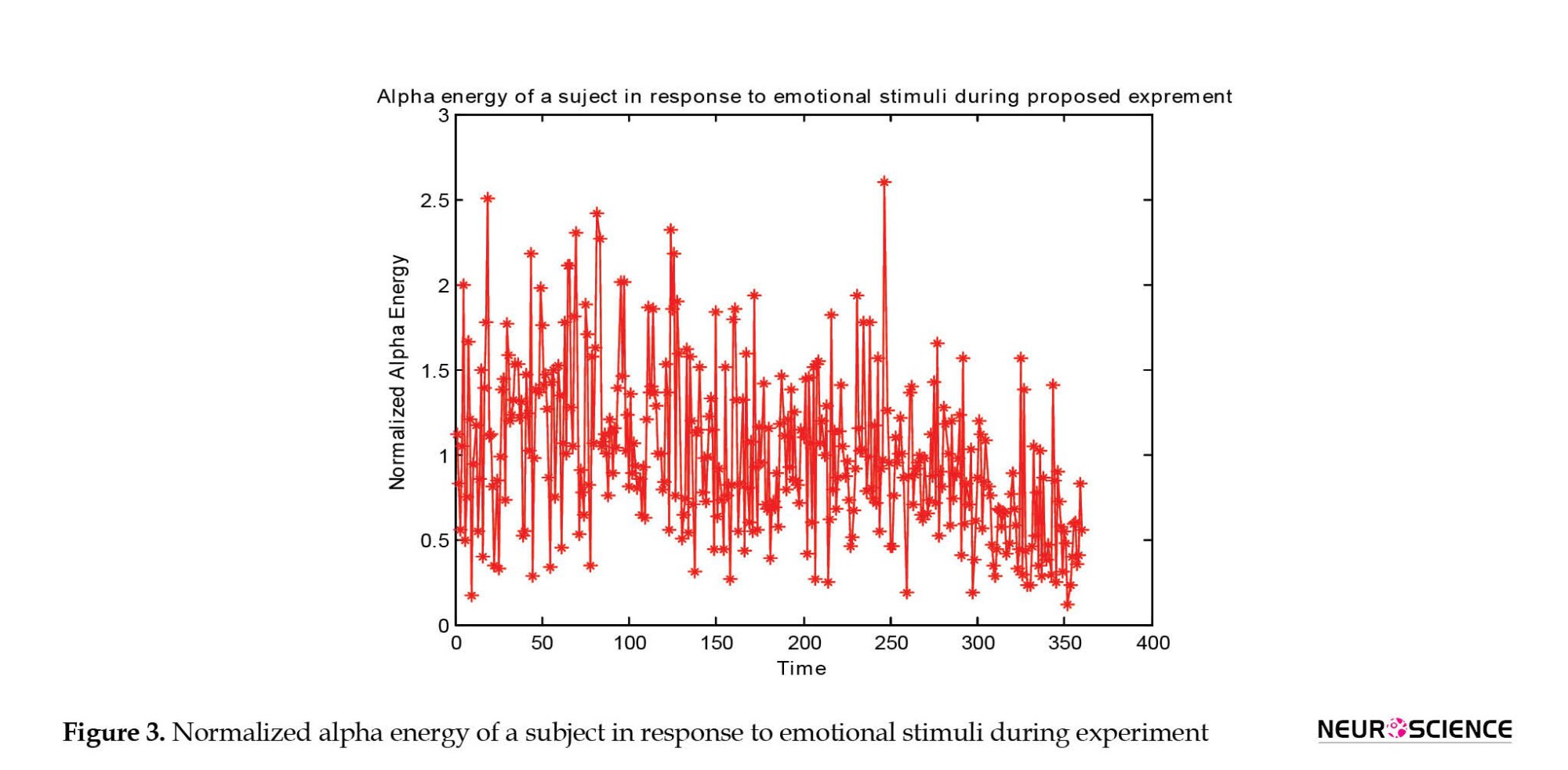

Several studies showed that emotions are time-varying and affected by external and internal factors in the body. This causes problems because the predictions become less accurate over time. These are traditionally online learning problems, given the change expected in the data over time. Dynamic or online algorithms can track time-varying behavior and are more robust against dynamically changing or non-stationary environments. Figure 3 shows the extracted alpha energy of a subject during the proposed experiment. As seen extracted feature has a time-varying property (Asghar, 2019). In the next section, we propose an adaptive classifier that learns concept drift during the experiment. This can be achieved by designing supervised techniques in such a way that conceptual changes are considered.

3. Adaptive ensemble classification

This section describes the proposed algorithm presented in this study. The proposed algorithm can simultaneously provide the following features, which have not been considered in previous studies in the field of emotion classification: 1) Learning this algorithm for each group is online, while most groups are trained offline. Behavior change in data is not considered in conventional methods; 2) The data-driven algorithm shows higher accuracy and greater compatibility compared to the functional group study; 3) Determining weights by weight adaptation in classifier training (i), this algorithm incorporates a dynamic adaptation of the classifier weights based on accuracy. This method assigns weights based on the highest network accuracy, which incorrectly controls network performance; 4) Classifier parameters are considered adaptive (ii), faster adaptation is considered a criterion for the classifier; 5) Insert a dynamic model in the training section (iii), add a new classifier to the network and remove classifiers that do not play a role in the network; 6) The model is pruned based on the lowest network accuracy; therefore, previous accurate classifications can be maintained, which leads to training with unusual 7) Data; and 8) Excessive control can affect the evaluation of the model in the training phase. This study was conducted to provide a method for fine-tuning the classifiers that effectively consider network stability. Fine-tuning is done by assembling the output of the classifier and adjusting the classifier adaptively.

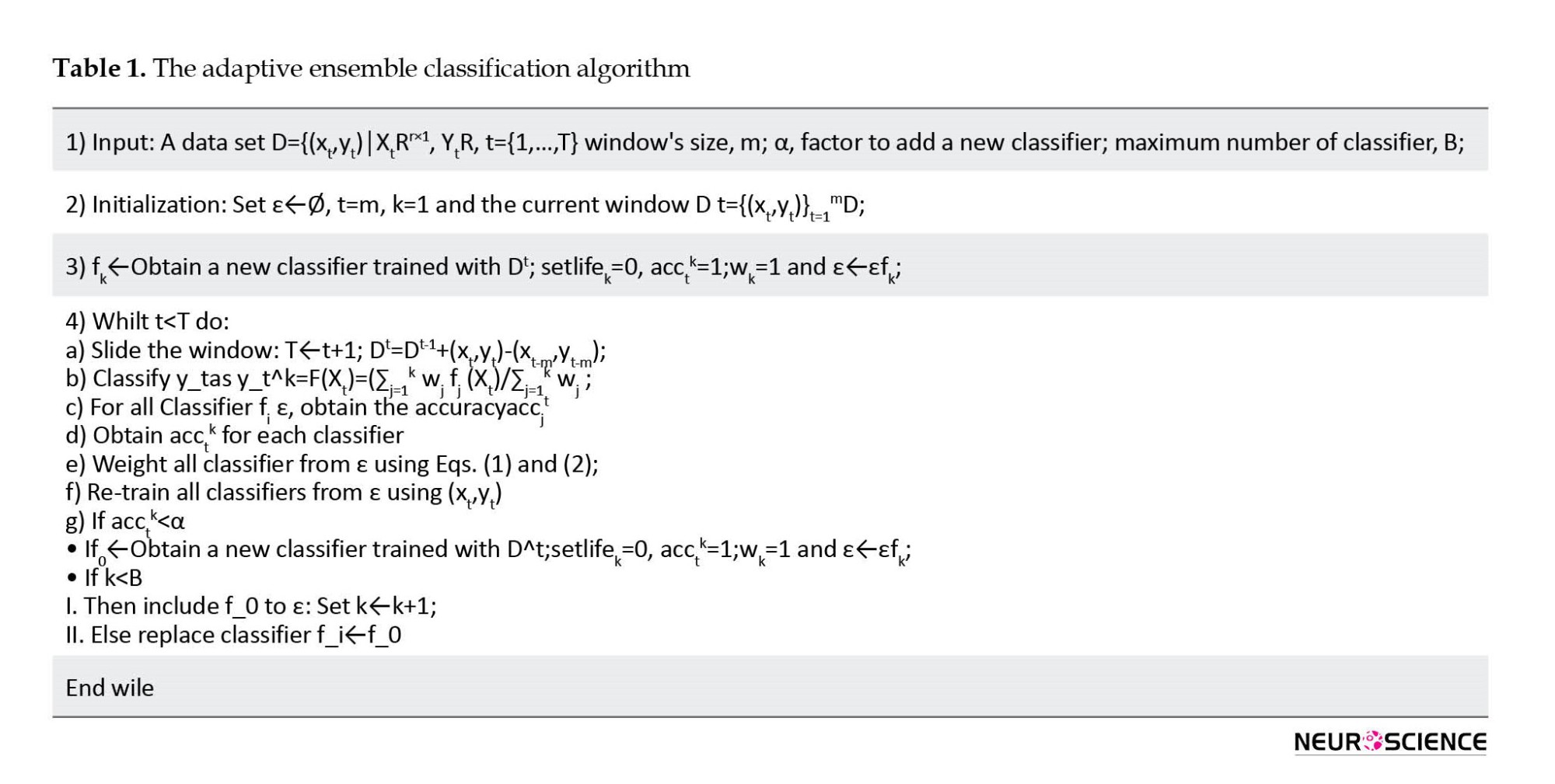

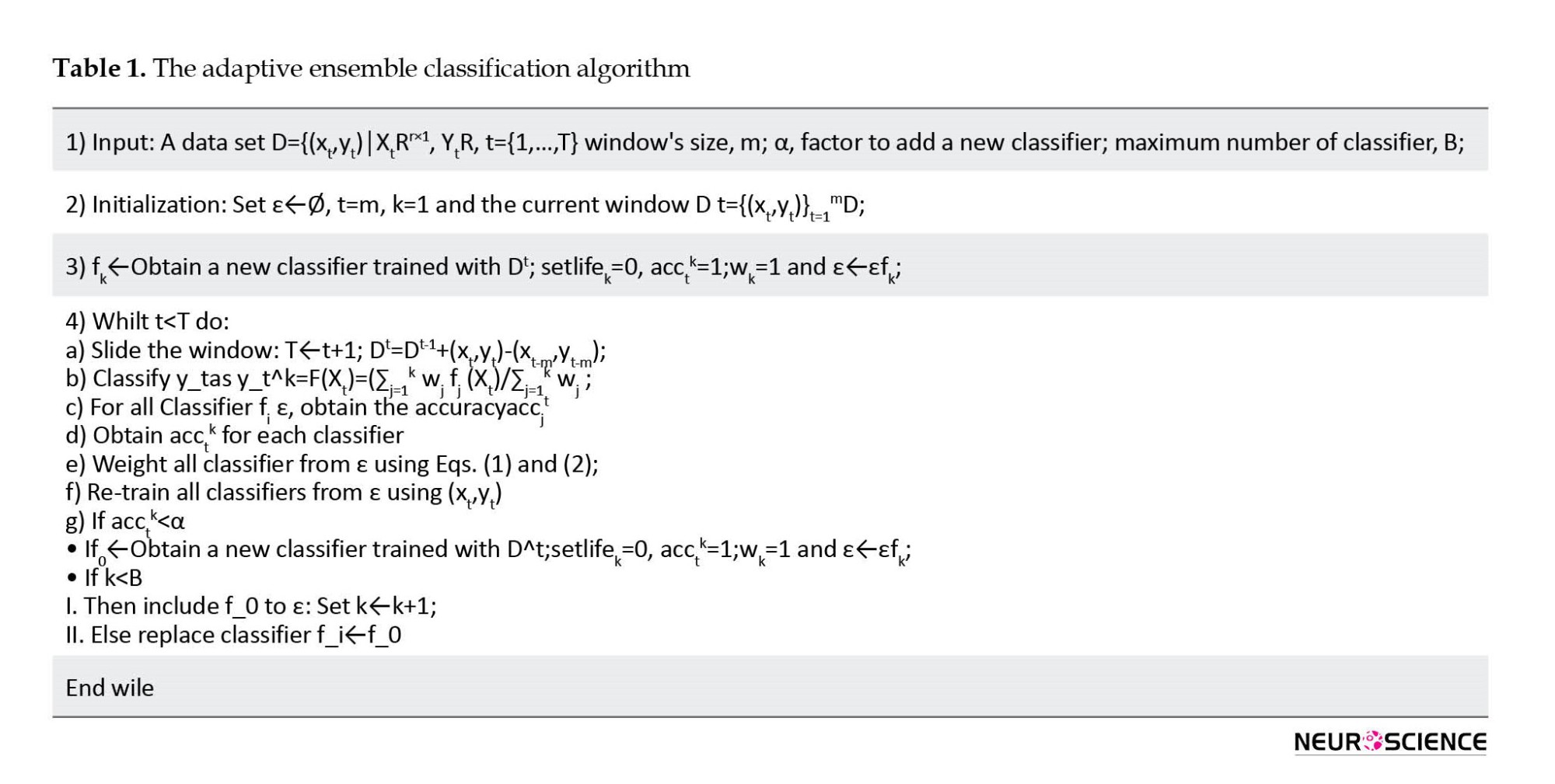

This algorithm is a set that is designed based on the dynamic algorithm process and its approach is based on the sliding window model. This means that a window is considered fixed and when a new instance is added that instance is added to the window and the previous instance is deleted. This is a new model in data training that removes data when it is not satisfactory and adds new data. Table 1 presents the proposed online ensemble classification method.

4. Results

EEG sub-band selection

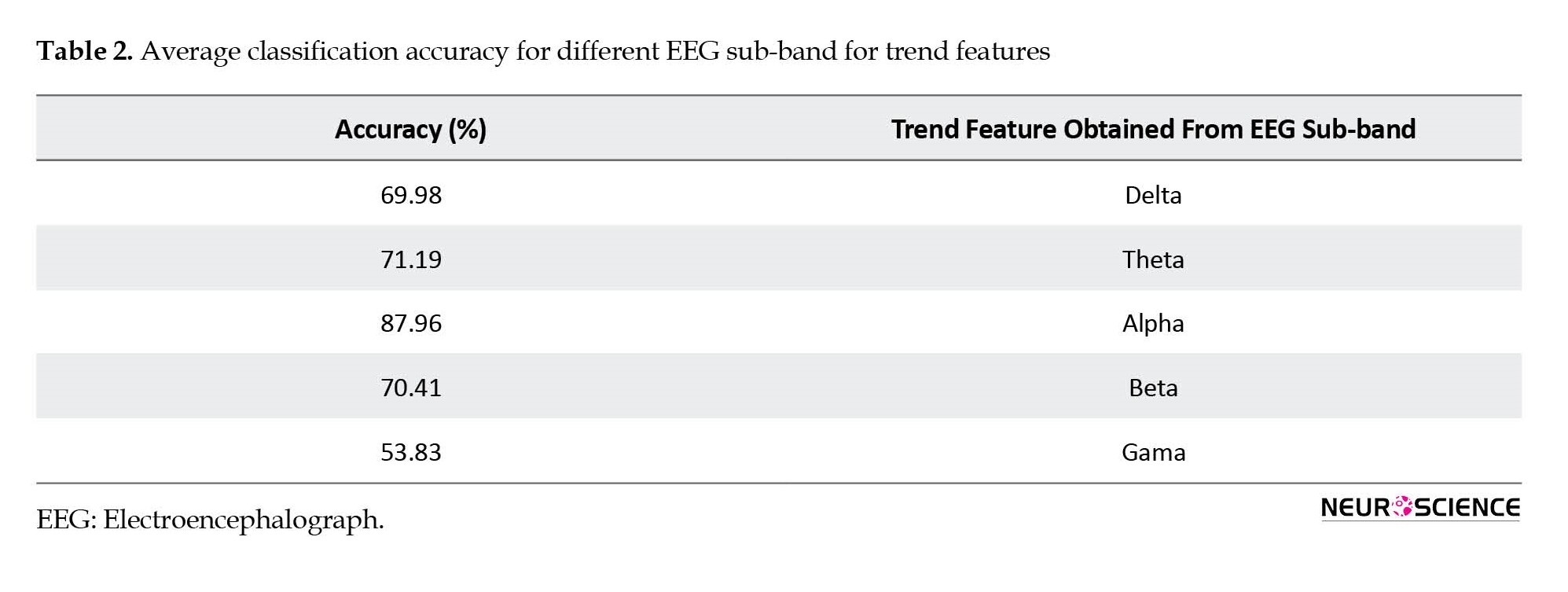

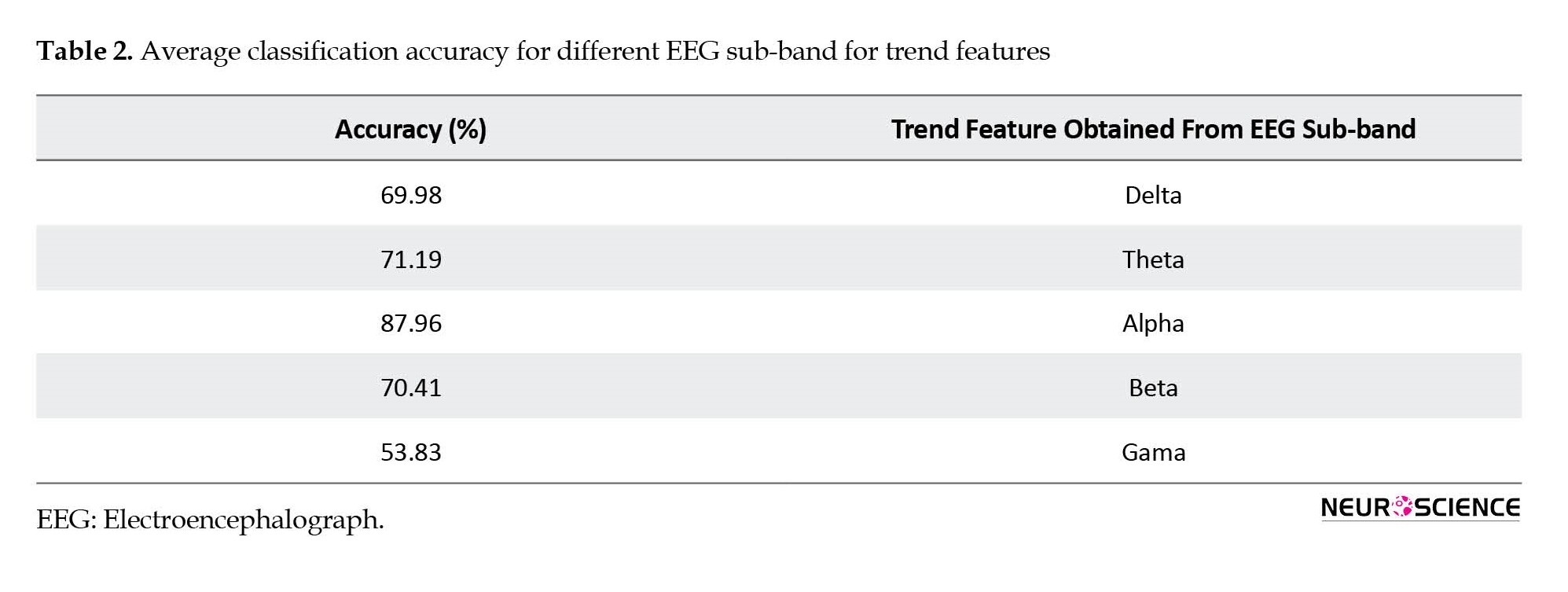

Table 2 presents the accuracy of the proposed classifier in this study for different EEG sub-bands between personality groups. The accuracy was obtained by 10-fold cross-validation. The results showed that the highest average accuracy belongs to the alpha sub-band.

Trend features

As classification results show, the obtained accuracy for the previous features is rarely acceptable. Therefore, we examined more conceptual features to improve the results. According to the goal of the experiment and the scope of the study, affective responses change gradually during the experiment. However, extracted features always have irregular rising and falling, which may be unrelated to the emotional task. As affective responses are time-dependent, extracted features are also time-dependent. Thus the trend of features can be used as a new time-dependent feature which may lead to better classification results. To compute the trend of features, linear and exponential regression models were fitted to features. Table 2 presents the average classification accuracy for the extracted trend features. Principal component analysis was applied to feature space.

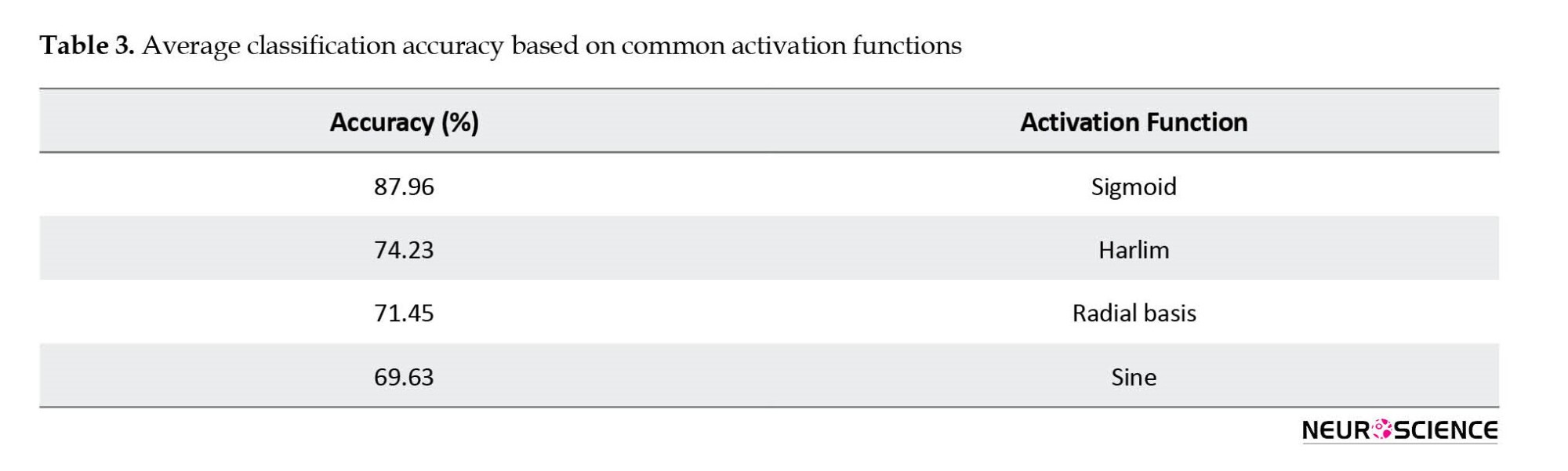

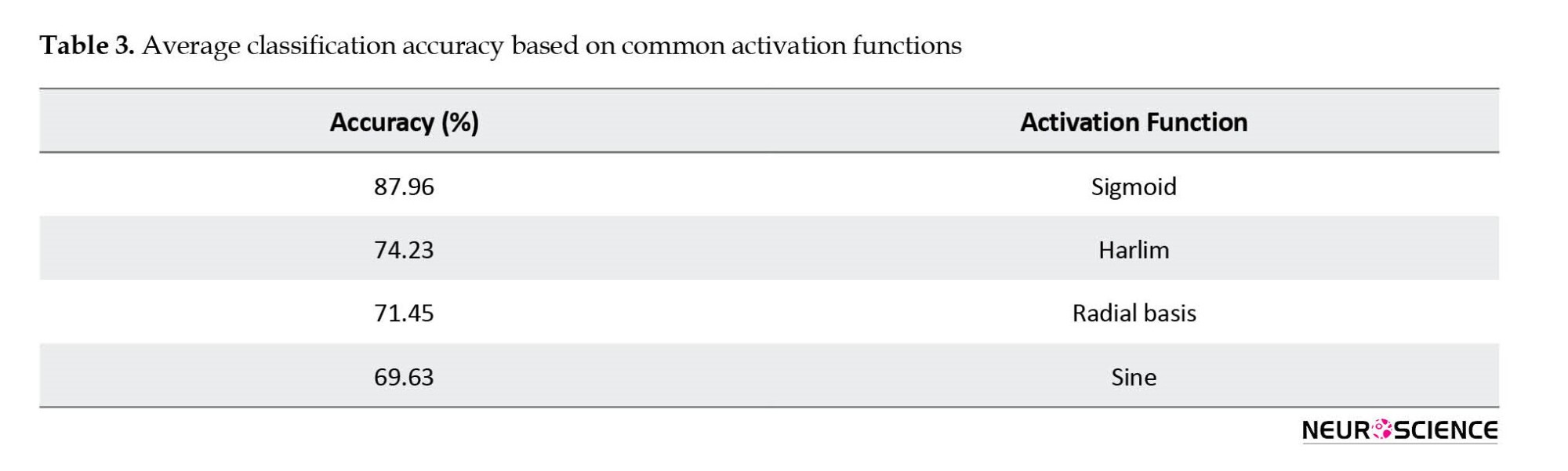

Activation function

As can be seen in Table 3, different activation functions show different results. Activation functions that were most commonly used in emotion recognition studies were used. Among them, the sigmoid shows the highest accuracy (87.96%) and sine shows the lowest accuracy (69.63%); therefore, the sigmoid is the best choice.

Effect of window size

Table 4 presents the average accuracy obtained for windows of different lengths. Since window length has a significant effect on accuracy, finding the right length can improve network results. As can be seen, very low (1) and high (60) window lengths lead to low accuracy (<68), and the best window length is 5. This is most likely because depending on the duration and the type of stimulus, the signal changes in this window are correctly detected.

Effect of maximum number of expert

Table 5 presents the average accuracy obtained for the maximum number of experts. Depending on the proposed structure, the maximum number of experts can drastically change the results of the network. Increasing the number of experts from 1 to 12 leads to increased accuracy, and decreasing from 12 to 30 leads to decreased accuracy. The maximum value is 12, and the maximum average accuracy is (87.96%). This is most likely because reducing the number of experts reduces the amount of training information and increases the number of experts, causing the network to overfit.

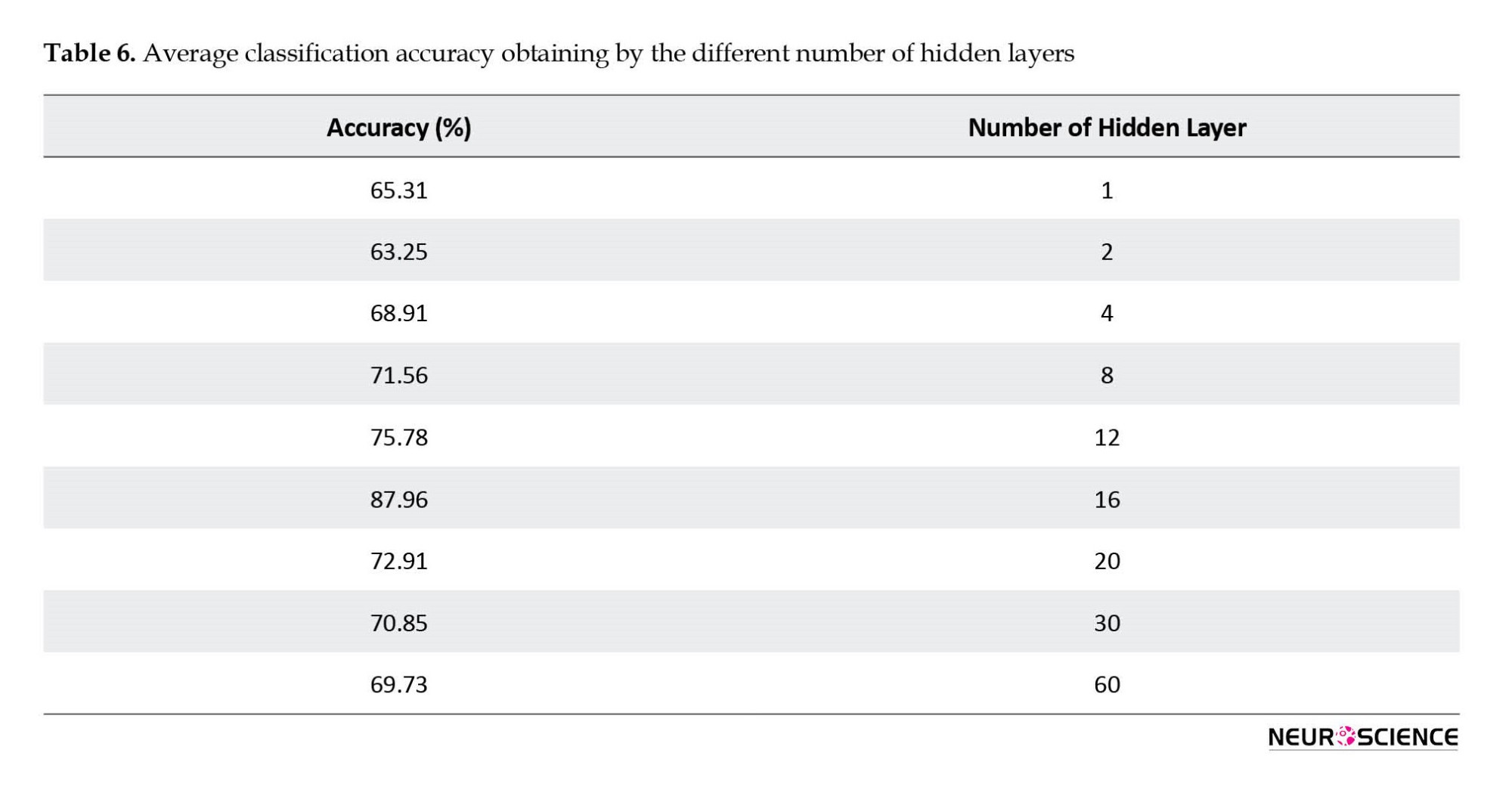

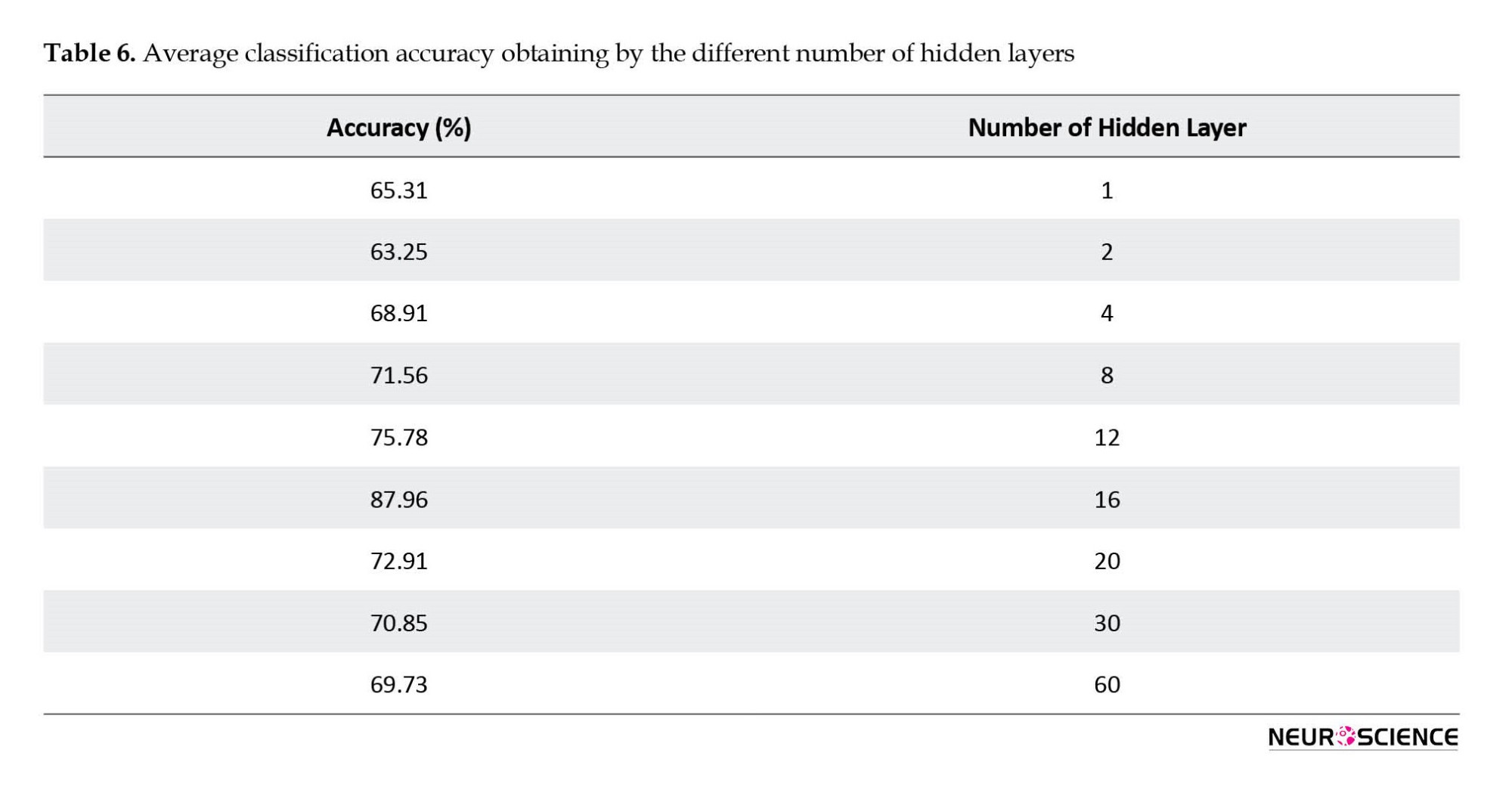

Effect of hidden layers

As shown in Table 6, increasing the number of hidden layers increases the accuracy of the classifier. These hidden layers draw boundaries between different classes. Therefore, raising the number of hidden layers does not necessarily improve the classification results and, in some cases, causes the network to become too complex. Therefore, finding the optimal number of hidden layers can improve the final network results. As seen, the highest accuracy (87.96%) for these hidden layers is equal to 16.

Effect of life factor

The life factor controls the amount of obsolescence of old data in the proposed network structure. Life factor values vary from 0 to 1. To more closely investigate the effect of this parameter on network accuracy, we increased this value from 0 to 1 in steps 0.1. As can be seen in Table 7, the maximum average accuracy (87.96%) was obtained with a life factor=0.1.

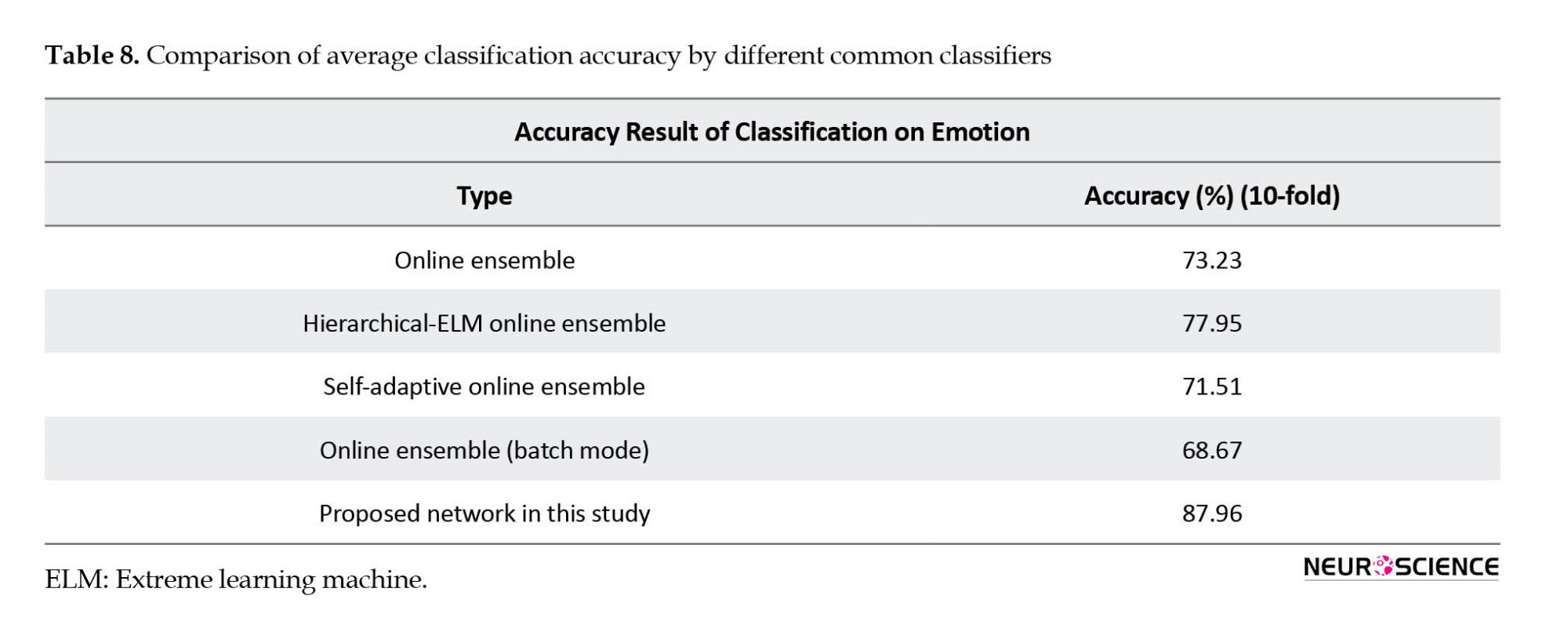

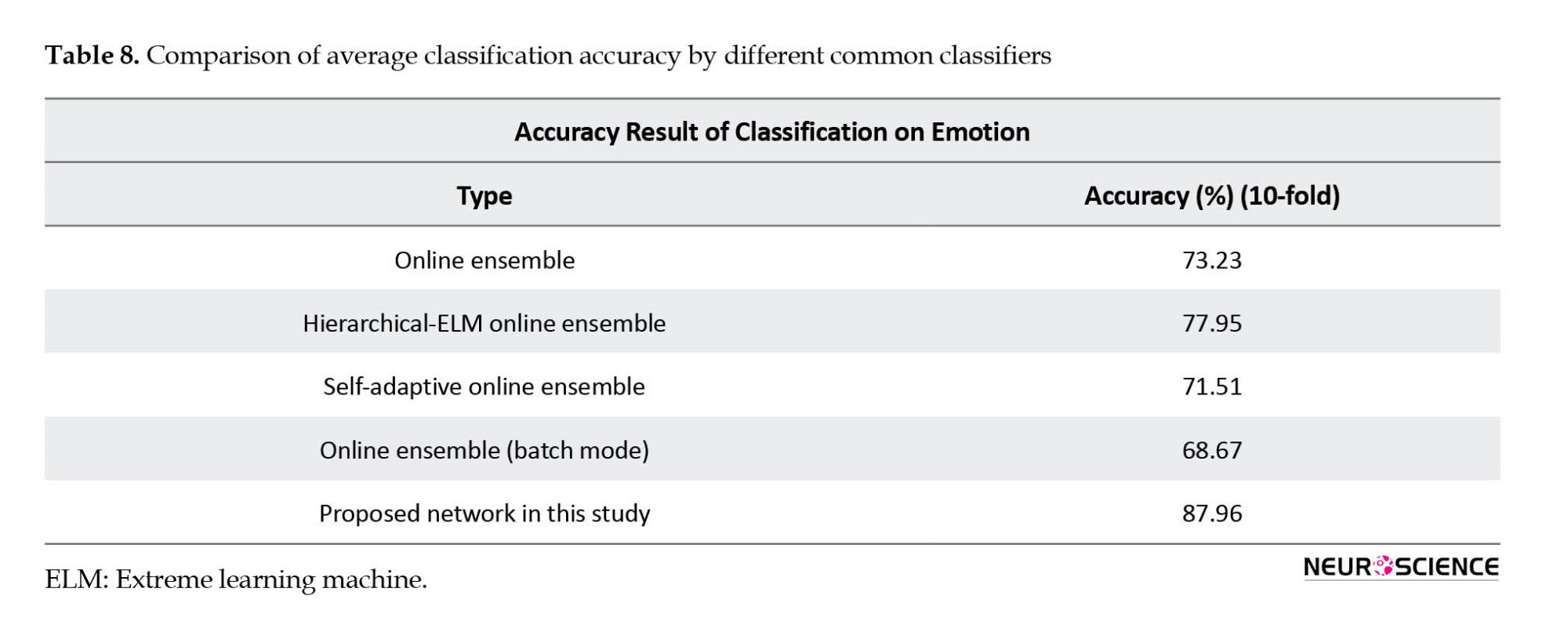

Compared to other similar networks

Finally, after determining the value for the parameters of the classifier structure, the proposed network was compared with some common networks. As can be seen in Table 8, the highest value is for the hierarchical-extreme learning machine (ELM) online ensemble (77.95), while the proposed network obtained 87.96%. The results showed that the proposed network can achieve a higher accuracy value (87.96%). To evaluate the generalization of the proposed network, Figure 1 shows the network loss and its accuracy.

Figure 4 shows the loss and accuracy of the proposed network for train and validation data, the network is well-fitted for the validation data. The maximum number of epochs is 200.

Computational efficiency

For further investigation, the computational efficiency of the proposed algorithm is compared with other methods. In this study, Figure 5 shows the system used and the network execution time. As can be seen, the highest time is related to the online ensemble network one, and the least time is related to the self-adaptive online ensemble network.

Learning emotions through machine learning techniques helps to better understand insight processes and brain function. The study results show that EEG signal changes are a good indicator of a person's emotional state. This study provides more insight and implications for the HM interface.

5. Conclusion

In this study, a new algorithm based on ensemble classifiers was presented. The value of the parameters of this network for different values was examined and the optimal value was obtained. Based on Eysenk’s personality model, participants were categorized into three major groups, depressive, mania, and normal. Two types of emotional stimuli were chosen, picture and movie clips. To achieve the synergetic effect, each movie clip was shown after similar pictures. Participants were stimulated randomly, with three types of emotional stimuli, consistent or in contrast with their personality type. The best-achieved accuracy is related to the alpha sub-band and tends to feature trends. It is most likely due to the personality traits of each group. Compared to common classifiers, the results showed that the proposed classifier has achieved the highest accuracy value (87.96%). For future studies, it is suggested to use more electrodes in signal recording and other types of stimuli other than images and clips. Online training methods can also improve the classifier to be more efficient in HM interfaces in real-time applications. In addition, it is suggested that classification be performed using networks that allow real-time analysis, for example, dynamic Bayesian networks. Also, according to the acceptable results obtained in this study, a fewer number of electrodes is recommended.

Ethical Considerations

Compliance with ethical guidelines

All ethical principles are considered in this article. The participants were informed of the purpose of the research and its implementation stages. They were also assured about the confidentiality of their information and were free to leave the study whenever they wished, and if desired, the research results would be available to them. A written consent has been obtained from the subjects. Principles of the Helsinki Convention was also observed.

Funding

The paper was extracted from the PhD dissertation of Mohammad Saleh Khajeh Hosseini, approved by Department of Biomedical Engineering, Faculty of Medical Sciences and Technologies, Science and Research Branch, Islamic Azad University.

Authors' contributions

All authors equally contributed to preparing this article.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors are grateful to all participants.

References

Alarcão, S. M., & Fonseca, M. J. (2017). Emotions recognition using EEG signals: A survey. IEEE Transactions on Affective Computing, 10(3), 374 - 393. [Link]

Asghar, M. A., Khan, M. J., Fawad, Amin, Y., Rizwan, M., & Rahman, M., et al. (2019). EEG-based multi-modal emotion recognition using bag of deep features: An optimal feature selection approach. Sensors, 19(23), 5218. [DOI:10.3390/s19235218]

Ashtiyani, M., Asadi, S., & Birgani, P. M. (2008). ICA-based EEG classification using fuzzy C-mean algorithm. Paper presented at: 3rd International Conference on Information and Communication Technologies: From Theory to Applications, Damascus, Syria, 23 May 2008. [DOI:10.1109/ICTTA.2008.4530056]

Ashtiyani, M., Asadi, S., Birgani, P. M., & Khordechi, E. A. (2008). EEG classification using neural networks and independent component analysis. Paper presented at: 4th Kuala Lumpur International Conference on Biomedical Engineering 2008. BI25–28 June 2008; Kuala Lumpur, Malaysia (pp. 179-182). Berlin: Springer. [Link]

Atkinson, J., & Campos, D. (2016). Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Systems with Applications, 47, 35-41. [DOI:10.1016/j.eswa.2015.10.049]

Brunner, C., Fischer, A., Luig, K., & Thies, T. (2012). Pairwise support vector machines and their application to large scale problems. The Journal of Machine Learning Research, 13(1), 2279-2292. [Link]

Craik, A., He, Y., & Contreras-Vidal, J. L. (2019). Deep learning for electroencephalogram (EEG) classification tasks: A review. Journal of Neural Engineering, 16(3), 031001. [DOI:10.1088/1741-2552/ab0ab5] [PMID]

Einalou, Z., Maghooli, K., Setarehdan, S. K., & Akin, A. (2017). Graph theoretical approach to functional connectivity in prefrontal cortex via fNIRS. Neurophotonics, 4(4), 041407.[DOI:10.1117/1.NPh.4.4.041407] [PMID]

Eslamieh, N., & Einalou, Z. (2018). Investigation of functional brain connectivity by electroencephalogram signals using Data Mining Technique. Journal of Cognitive Science, 19(4), 551-576. [Link]

Fürbass, F., Kural, M. A., Gritsch, G., Hartmann, M., Kluge, T., & Beniczky, S. (2020). An artificial intelligence-based EEG algorithm for detection of epileptiform EEG discharges: Validation against the diagnostic gold standard. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology, 131(6), 1174–1179.[DOI:10.1016/j.clinph.2020.02.032] [PMID]

Gao, Q. W., Wang, C. H., Wang, Z., Song, X. L., Dong, E. Z., & Song, Y. (2020). EEG based emotion recognition using fusion feature extraction method. Multimedia Tools and Applications, 79, 27057–27074. [DOI:10.1007/s11042-020-09354-y]

Greco, A., Valenza, G., Citi, L., & Scilingo, E. P. (2016). Arousal and Valence Recognition of Affective Sounds based on Electrodermal Activity. IEEE Sensors Journal, 17(3), 716 - 725. [DOI:10.1109/JSEN.2016.2623677]

Guyon-Harris, K. L., Carell, R., DeVlieger, S., Humphreys, K. L., & Huth-Bocks, A. C. (2021). The emotional tone of child descriptions during pregnancy is associated with later parenting. Infant Mental Health Journal, 42(5), 731–739. [PMID]

Hanjalic, A., & Xu, L. Q. (2005). Affective video content representation and modeling. IEEE Transactions on Multimedia, 7(1), 143-154. [DOI:10.1109/TMM.2004.840618]

Jirayucharoensak, S., Pan-Ngum, S., & Israsena, P. (2014). EEG-based emotion recognition using deep learning network with principal component based covariate shift adaptation. The Scientific World Journal, 2014. [DOI:10.1155/2014/627892]

Mehmood, R. M., & Lee, H. J. (2015). Emotion classification of EEG brain signal using SVM and KNN.Paper presented at: 2015 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Turin, Italy, 29 June 2015 - 03 July 2015. [DOI:10.1109/ICMEW.2015.7169786]

Sakalle, A., Tomar, P., Bhardwaj, H., Acharya, D., & Bhardwaj, A. (2021). A LSTM based deep learning network for recognizing emotions using wireless brainwave driven system. Expert Systems with Applications, 173, 114516. [DOI:10.1016/j.eswa.2020.114516]

Sammler, D., Grigutsch, M., Fritz, T., & Koelsch, S. (2007). Music and emotion: Electrophysiological correlates of the processing of pleasant and unpleasant music. Psychophysiology, 44(2), 293–304. [DOI:10.1111/j.1469-8986.2007.00497.x] [PMID]

Seifi, S., Nowshiravan Rahatabad, F., & Einalou, Z. (2018). Detection of different levels of multiple sclerosis by assessing nonlinear characteristics of posture. International Clinical Neuroscience Journal, 5(4), 115-120. [DOI:10.15171/icnj.2018.23]

Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., & Liao, D., et al. (2018). A review of emotion recognition using physiological signals. Sensors (Basel), 18(7), 2074. [PMID]

Subasi, A., Tuncer, T., Dogan, S., Tanko, D., & Sakoglu, U. (2021). EEG-based emotion recognition using tunable Q wavelet transform and rotation forest ensemble classifier. Biomedical Signal Processing and Control, 68, 102648. [DOI:10.1016/j.bspc.2021.102648]

Tyng, C. M., Amin, H. U., Saad, M. N. M., & Malik, A. S. (2017). The influences of emotion on learning and memory. Frontiers in Psychology, 8, 1454. [PMID]

Verma, G. K., Tiwary, U. S., & Agrawal, S. (2011). Multi-algorithm fusion for speech emotion recognition. In: A. braham, J.L. Mauri, J.F. Buford, J. Suzuki, & S. M. Thampi (Eds) , Advances in computing and communications. Berlin: Springer. [Link]

Wang, Y., Zhang, L., Xia, P., Wang, P., Chen, X., & Du, L., et al. (2022). EEG-based emotion recognition using a 2D CNN with different kernels. Bioengineering, 9(6), 231. [DOI:10.3390/bioengineering9060231]

Wu, W., Zhang, Y., Jiang, J., Lucas, M. V., Fonzo, G. A., & Rolle, C. E., et al. (2020). An electroencephalographic signature predicts antidepressant response in major depression. Nature Biotechnology, 38(4), 439-447. [Link]

Yin, Z., Zhao, M., Wang, Y., Yang, J., & Zhang, J. (2017). Recognition of emotions using multimodal physiological signals and an ensemble deep learning model. Computer Methods and Programs in Biomedicine, 140, 93–110. [PMID]

Yoon, H. J., & Chung, S. Y. (2013). EEG-based emotion estimation using Bayesian weighted-log-posterior function and perceptron convergence algorithm. Computers in Biology and Medicine, 43(12), 2230-2237. [DOI:10.1016/j.compbiomed.2013.10.017]

Zhang, Q., & Lee, M. (2012). Emotion development system by interacting with human EEG and natural scene understanding. Cognitive Systems Research, 14(1), 37-49. [DOI:10.1016/j.cogsys.2010.12.012]

Emotions play a critical role in human interactions and are a mental state associated with a wide variety of behaviors and feelings. Since emotions are affected by many subject-dependent factors, it is one of the most complicated research fields in psychology. One of the interesting topics in the study of emotion is the human-machine (HM) interaction (Alarcão, 2017). An emotion-enabled HM interface can match itself with the user’s feelings and show a proportional response. Emotions can be reflected through facial expressions, voice intonation, body language, physiological signals, etc (Craik, 2019; Einalou, 2017). Several physiological signals can be used to measure emotions. Electroencephalogram (EEG) is the time-series measure of the physiological signal of the brain. EEG has been used in many studies on emotion research. It has a high temporal resolution, low cost, and high correlation with emotions (As, 2021; Sammler, 2007). Emotions in a two-dimensional space are divided into two levels of valence–arousal (VA). Human emotions are obtained by decoding facial expressions and changing speech and behavior and neurophysiological signals resulting from emotional changes, which can enhance this process by integrating the individual’s emotional states and interacting with the HM (Tyng et al., 2017; Wu, 2020; Seifi, 2018). On the one hand, in the study of human emotional states, emotions can be classified into several discrete states by considering the VA levels. The degrees VA dimensions have two-dimensional cores in the affection state (Hanjalic & Xu, 2005). In these two dimensions, a capability can systematically define multiple emotional states. In addition, emotional changes are related to the activities of a part of the brain called the limbic system, which controls external behaviors and internal cognitive patterns. Zhang and Lee show a link between the valence dimension, and the activation level of the anterior parietal cortex, arousal dimension, and the right supramarginal gyrus (Zhang & Lee, 2013). Therefore, these relationships suggest that physiological neuronal signals beneath the subcortex can extract emotional cues, and neuroimaging techniques are necessarily needed. Based on many studies, EEG has become an effective solution and a crucial manner due to high temporal resolution and reliable repeatability for effective computing (Yoon & Chung, 2013; Eslamieh, 2018). Due to the development in the field of biomedical engineering in the development of recording equipment, EEG as a non-invasive method can be easily measured and recorded through portable and wireless sensors.

Time, frequency, and spatial features can be accurately extracted from EEG-filtered signals, and these features have been a suitable criterion to distinguish human emotional states in many studies (Asa, 2021). These features can be used as training data for many neural networks, such as deep learning for data classifiers (Brunner et al., 2011). Emotion classification studies are categorized into two groups, offline classification and online classification. In the recently reported study, Atkinson & Campos (2016) revealed that a hybrid EEG emotion classifier based on data minimization and combined with the support vector machine (SVM) network can improve emotion recognition process and reported an accuracy of 73.14% in the valence dimension and 73.06% in the arousal dimension. Verma et al. (2011) used the discrete wavelet transform technique to extract the desired features in emotion recognition. These extracted features were used in the classification of emotions by SVM and k-nearest neighbor algorithm (KNN) networks and the highest network accuracy was reported to be 81.45%. Yin et al. (2016) developed a suitable method for classifying emotions based on a deep learning model in stacked autoencoder networks to improve the deep learning network performance (Fürbass, 2020). They reported acceptable results with the highest accuracy of the dimensions of arousal and valence equal to 84.18% and 83.04%, respectively.

Mahmoud and Lee (2015) used a method based on the late positive potential to extract brain signal features using the SVM and K-nearest neighbor (KNN) classifiers to study emotions. Jirayucharoensak et al. (2014) illustrated a new approach to recognize EEG-based emotion by developing the stacked autoencoder network. To increase the static stability of EEG signals, variables are used by the principal component analysis (PCA) method. SVM and naive Bayes methods are used to investigate valence and arousal dimensions. According to recent studies, machine learning is used as a tool to detect emotions based on the features extracted from EEG signals. However, in these studies, online evaluation of an approach to designing a classifier has received less attention. For example, validating a method in a data classifier is very convenient and reliable for an offline mode, but cannot be invoked in an online or real-time process.

In 2017, Tyng et al., (2017) used an autoencoder for features extracted from the EEG signal, and their results were reported based on the emotion classifier online. Namely, the emotion EEG dataset (SEED) was divided into two groups of training and testing, which were collected in a non-overlapping manner and the average accuracy reached 77.88%. Wang et al. (2022) also designed an emotion classifier using the hierarchical Bayesian model and reported acceptable results. Yin et al. (2017) developed an online emotion classifier to derive stable features of the EEG signal. Gao et al. conducted their studies on a reliable classifier to find common patterns in EEG signal features (Gao, 2020). When recording a signal, if the number of participants is limited, this recording may provide incorrect information to the researcher. It can also report information more accurately when classifying data, whether generic or specific subjects, based on online and static methods, which makes the feature extraction model more efficient. However, the EEG signal is a time-based data set, information is regulated based on the body’s physiological system, and the importance of features in the central nervous system is determined by an inherent dynamic.

In this study, we present an adaptive classification method to enhance emotion recognition systems based on an online approach that can be used in an online process for each participant (Greco, 2017). This classifier can be a suitable alternative to the generic classification, especially when the number of participants is limited. This study is based on the Guyon et al. (2002) and Ashtiyani et al., 2008 studies considering the (recursive feature elimination) RFE feature algorithm. This approach is for all features through the classification margin of an SVM binary network (Shu et al., 2018; Khordechi, 2008). In this study, we have generalized the RFE algorithm to dynamic (D)-RFE, which considers the optimal EEG signal features by analyzing data and features in real-time and in the historical trend.

In this paper, firstly the EEG signal database is introduced and the required preprocessions are performed on this database. In the following, feature extraction methods will be described in detail. In the second part, the proposed classification algorithm will be stated. In the third part, the results obtained from the algorithm and model parameters as well as feature selection and its effect on the classifier performance will be expressed. In the discussion section, the results will be compared with conventional methods. Finally, this method will be expressed based on the performance and results of the proposed method and its limitations and advantages.

2. Materials and Methods

Eysenck personality questionnaire (EPQ)

As depicted in Figure 1, the Eysenck personality questionnaire (EPQ) is primarily based on two biologically independent dimensions of nature, N and E, including a third, P.

N- Neuroticism/Stability: Neuroticism represents the threshold of excitability of the nervous system. Neurotic or unstable people are unable to control their emotional reactions. On the other side, emotionally stable people can control their emotions.

E- Extraversion/Introversion: Extraversion is characterized by high positive affect. According to Eysenck’s arousal theory, extroverts are chronically under-aroused and need external stimulation to bring them up. On the other side, introverts are chronically over-aroused and need quiet to bring them down.

P- Psychoticism/Socialisation: Psychoticism is associated with liability.

L- Lie scale: To measure people lying to control their scores.

Due to the continuous nature of dimensions in the Eysenck questionnaire and based on recorded scores, four categories of personality are defined:

Introverts: Score lower than 11 on the extroversion dimension.

Extroverts: Score higher than 12 on the extroversion dimension.

Stables: Score lower than 15 on the neurotic dimension. U stables: Score higher than 15 on the neurotic dimension.

Subjects

A total of 270 healthy volunteers aged 19–30 years (Mean±SD 25.01±3.13 years), participated in this study. Three groups of volunteers were selected based on the highest score obtained by the Eysenck questionnaire. Each group included 12 healthy men and women. The unstable-introvert group tends to depression at 17–28 years of age (Mean±SD 25.0±3.11 years). Unstable-extrovert group tends to Mania at 18–29 years of age (Mean±SD 25.21±3.07 years) and the normal group at 17–29 years of age (Mean±SD 26.32±3.12 years). Table 1 presents the results of screening participants by EPQ. A total of 48% of the population was men and 52% of the population was women.

Stimuli

Emotions can be mapped into AV dimensions. Based on AV dimensions and to maximize differentiation of induced emotions, three basic emotions, sadness, happiness, and neutral, were selected. These emotions are consistent with participant groups. Among several types of emotional stimulation, such as music, text, and voice, we chose pictures and movie clips due to their desirable properties. Pictures were selected from the Geneva Affective Picture database with the maximum AV scores for the mentioned emotions. Forty individuals who did not engage in the final trial were requested to assess the movie clips by positive and negative affect scale (PANAS). Among 36 movie clips, 12 movie clips that received the highest positive and negative affect scale (PANAS) ratings were selected. Chosen pictures and movie clips were categorized as sadness, happiness, and neutral. Due to the continuous nature of dimensions in Eysenck’s questionnaire and based on recorded scores, four categories of personality are defined.

Experimental protocol

Participants filled out the informed consent EPQ and then sat on a resting chair in front of a monitor in a quiet room. EEG electrodes were installed on frontal positions (Fp1, Fp2, F3, F4), and the impedance of each electrode was checked below 5 k-ohm. To get quality data, the subjects were asked to keep control of their movement during the experiment. EEG was recorded using a thought technology Procomp infinite system and the sample rate was 256 Hz. The software platform was the national instrument, LabVIEW version 2019. The EEG signal was recorded in open eyes and closed eyes for 2 minutes. This record was used to calculate the signal features in the frontal section in the rest state. All participants then completed the three types of emotion induction trials randomly. Each trial consisted of 30 pictures and a movie clip at the end. Participants were asked to mark the peak of induced emotions by pressing the space key on the computer keyboard. At the end of the trials, participants were asked to complete EPQ. Figure 2 shows the stimulation procedures.

Signal processing

Recorded EEG is affected by several noises and artifacts. Independent component analysis (ICA) algorithm extracts statistically independent components from a mixture of sources. The adaptive mixture of independent component analysis (AMICA) calculates the maximum likelihood estimation for a mixture model of the independent component. AMICA has shown successful results on EMG artifacts; therefore, among the denoising methods based on independent component analysis (ICA), AMICA was chosen to remove EMG and blinking artifacts. In this project, recorded EEG was manually checked for EMG noise or motion artifact, and AMICA was applied on each noise-affected part of the EEG signal. A simple threshold filter was applied to remove blink artifacts.

Feature extraction is one of the essential success factors in classification problems. This study uses several emotion-specific feature types, wavelet, nonlinear dynamical analysis, and power spectrum. In this study, a 2-s hamming time window with 50% overlap was applied to extract all features.

Wavelet transform

Wavelet transform is one of the best time-frequency analysis methods in EEG signal processing [16]. Wavelet transform decomposes a signal into a family of wavelet functions. The wavelet function is correlated with the shape of the signal. This correlation forms wavelet coefficients. On the other hand, wavelet coefficients are used to measure the correlation of wavelet function and EEG signal at the time of occurrence and frequency resolution level. In this study, we applied for the fourth-order Daubechies wavelet function due to its optimal time-frequency properties on EEG signals. Resolution levels are almost correspondent with standard EEG frequency bands.

Relative wavelet energy was computed for each level by Equation 1:

1. Pl=El ⁄Et

Where El is the energy at level l and computed as corresponding wavelet coefficients. Et is the total energy and is computed as the sum of energy at all levels.

Then the wavelet entropy was calculated by Equation 2:

Pl is the relative energy at level l

Wavelet entropy and relative wavelet energy were used as time-frequency domain features.

Power spectrum

Power spectrum features can be used to investigate the dynamics of EEG signals. The relative powers [17] of each EEG sub-band can be used to monitor the dynamic of emotion changes. To calculate the power spectral density, the EEG signal was divided into 256 epochs with the Hamming windowing method then extended to 512 epochs by zero-padding technique, and to calculate the power spectral density, the 512-point Fourier transform was applied. The relative power was calculated for the frequency sub-bands, theta (4–7 Hz), alpha (8–13 Hz), beta (b 13–30 Hz), and gamma (c 31–50 Hz). The relative power was calculated using Equation 3:

Where RPb is the relative power at frequency band b. ps is the power in frequency band b and pb is the signal power.

Asymmetry features

As mentioned in the literature, asymmetric activity in frontal EEG can be used to measure affective tendency. Hence, asymmetry in each previously mentioned feature for the left and right hemispheres was calculated as below (Equation 4):

Whereas FL and FR are feature vectors for the left and right hemispheres, respectively.

Concept drift in feature space

Several studies showed that emotions are time-varying and affected by external and internal factors in the body. This causes problems because the predictions become less accurate over time. These are traditionally online learning problems, given the change expected in the data over time. Dynamic or online algorithms can track time-varying behavior and are more robust against dynamically changing or non-stationary environments. Figure 3 shows the extracted alpha energy of a subject during the proposed experiment. As seen extracted feature has a time-varying property (Asghar, 2019). In the next section, we propose an adaptive classifier that learns concept drift during the experiment. This can be achieved by designing supervised techniques in such a way that conceptual changes are considered.

3. Adaptive ensemble classification

This section describes the proposed algorithm presented in this study. The proposed algorithm can simultaneously provide the following features, which have not been considered in previous studies in the field of emotion classification: 1) Learning this algorithm for each group is online, while most groups are trained offline. Behavior change in data is not considered in conventional methods; 2) The data-driven algorithm shows higher accuracy and greater compatibility compared to the functional group study; 3) Determining weights by weight adaptation in classifier training (i), this algorithm incorporates a dynamic adaptation of the classifier weights based on accuracy. This method assigns weights based on the highest network accuracy, which incorrectly controls network performance; 4) Classifier parameters are considered adaptive (ii), faster adaptation is considered a criterion for the classifier; 5) Insert a dynamic model in the training section (iii), add a new classifier to the network and remove classifiers that do not play a role in the network; 6) The model is pruned based on the lowest network accuracy; therefore, previous accurate classifications can be maintained, which leads to training with unusual 7) Data; and 8) Excessive control can affect the evaluation of the model in the training phase. This study was conducted to provide a method for fine-tuning the classifiers that effectively consider network stability. Fine-tuning is done by assembling the output of the classifier and adjusting the classifier adaptively.

This algorithm is a set that is designed based on the dynamic algorithm process and its approach is based on the sliding window model. This means that a window is considered fixed and when a new instance is added that instance is added to the window and the previous instance is deleted. This is a new model in data training that removes data when it is not satisfactory and adds new data. Table 1 presents the proposed online ensemble classification method.

4. Results

EEG sub-band selection

Table 2 presents the accuracy of the proposed classifier in this study for different EEG sub-bands between personality groups. The accuracy was obtained by 10-fold cross-validation. The results showed that the highest average accuracy belongs to the alpha sub-band.

Trend features

As classification results show, the obtained accuracy for the previous features is rarely acceptable. Therefore, we examined more conceptual features to improve the results. According to the goal of the experiment and the scope of the study, affective responses change gradually during the experiment. However, extracted features always have irregular rising and falling, which may be unrelated to the emotional task. As affective responses are time-dependent, extracted features are also time-dependent. Thus the trend of features can be used as a new time-dependent feature which may lead to better classification results. To compute the trend of features, linear and exponential regression models were fitted to features. Table 2 presents the average classification accuracy for the extracted trend features. Principal component analysis was applied to feature space.

Activation function

As can be seen in Table 3, different activation functions show different results. Activation functions that were most commonly used in emotion recognition studies were used. Among them, the sigmoid shows the highest accuracy (87.96%) and sine shows the lowest accuracy (69.63%); therefore, the sigmoid is the best choice.

Effect of window size

Table 4 presents the average accuracy obtained for windows of different lengths. Since window length has a significant effect on accuracy, finding the right length can improve network results. As can be seen, very low (1) and high (60) window lengths lead to low accuracy (<68), and the best window length is 5. This is most likely because depending on the duration and the type of stimulus, the signal changes in this window are correctly detected.

Effect of maximum number of expert

Table 5 presents the average accuracy obtained for the maximum number of experts. Depending on the proposed structure, the maximum number of experts can drastically change the results of the network. Increasing the number of experts from 1 to 12 leads to increased accuracy, and decreasing from 12 to 30 leads to decreased accuracy. The maximum value is 12, and the maximum average accuracy is (87.96%). This is most likely because reducing the number of experts reduces the amount of training information and increases the number of experts, causing the network to overfit.

Effect of hidden layers

As shown in Table 6, increasing the number of hidden layers increases the accuracy of the classifier. These hidden layers draw boundaries between different classes. Therefore, raising the number of hidden layers does not necessarily improve the classification results and, in some cases, causes the network to become too complex. Therefore, finding the optimal number of hidden layers can improve the final network results. As seen, the highest accuracy (87.96%) for these hidden layers is equal to 16.

Effect of life factor

The life factor controls the amount of obsolescence of old data in the proposed network structure. Life factor values vary from 0 to 1. To more closely investigate the effect of this parameter on network accuracy, we increased this value from 0 to 1 in steps 0.1. As can be seen in Table 7, the maximum average accuracy (87.96%) was obtained with a life factor=0.1.

Compared to other similar networks

Finally, after determining the value for the parameters of the classifier structure, the proposed network was compared with some common networks. As can be seen in Table 8, the highest value is for the hierarchical-extreme learning machine (ELM) online ensemble (77.95), while the proposed network obtained 87.96%. The results showed that the proposed network can achieve a higher accuracy value (87.96%). To evaluate the generalization of the proposed network, Figure 1 shows the network loss and its accuracy.

Figure 4 shows the loss and accuracy of the proposed network for train and validation data, the network is well-fitted for the validation data. The maximum number of epochs is 200.

Computational efficiency

For further investigation, the computational efficiency of the proposed algorithm is compared with other methods. In this study, Figure 5 shows the system used and the network execution time. As can be seen, the highest time is related to the online ensemble network one, and the least time is related to the self-adaptive online ensemble network.

Learning emotions through machine learning techniques helps to better understand insight processes and brain function. The study results show that EEG signal changes are a good indicator of a person's emotional state. This study provides more insight and implications for the HM interface.

5. Conclusion

In this study, a new algorithm based on ensemble classifiers was presented. The value of the parameters of this network for different values was examined and the optimal value was obtained. Based on Eysenk’s personality model, participants were categorized into three major groups, depressive, mania, and normal. Two types of emotional stimuli were chosen, picture and movie clips. To achieve the synergetic effect, each movie clip was shown after similar pictures. Participants were stimulated randomly, with three types of emotional stimuli, consistent or in contrast with their personality type. The best-achieved accuracy is related to the alpha sub-band and tends to feature trends. It is most likely due to the personality traits of each group. Compared to common classifiers, the results showed that the proposed classifier has achieved the highest accuracy value (87.96%). For future studies, it is suggested to use more electrodes in signal recording and other types of stimuli other than images and clips. Online training methods can also improve the classifier to be more efficient in HM interfaces in real-time applications. In addition, it is suggested that classification be performed using networks that allow real-time analysis, for example, dynamic Bayesian networks. Also, according to the acceptable results obtained in this study, a fewer number of electrodes is recommended.

Ethical Considerations

Compliance with ethical guidelines

All ethical principles are considered in this article. The participants were informed of the purpose of the research and its implementation stages. They were also assured about the confidentiality of their information and were free to leave the study whenever they wished, and if desired, the research results would be available to them. A written consent has been obtained from the subjects. Principles of the Helsinki Convention was also observed.

Funding

The paper was extracted from the PhD dissertation of Mohammad Saleh Khajeh Hosseini, approved by Department of Biomedical Engineering, Faculty of Medical Sciences and Technologies, Science and Research Branch, Islamic Azad University.

Authors' contributions

All authors equally contributed to preparing this article.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors are grateful to all participants.

References

Alarcão, S. M., & Fonseca, M. J. (2017). Emotions recognition using EEG signals: A survey. IEEE Transactions on Affective Computing, 10(3), 374 - 393. [Link]

Asghar, M. A., Khan, M. J., Fawad, Amin, Y., Rizwan, M., & Rahman, M., et al. (2019). EEG-based multi-modal emotion recognition using bag of deep features: An optimal feature selection approach. Sensors, 19(23), 5218. [DOI:10.3390/s19235218]

Ashtiyani, M., Asadi, S., & Birgani, P. M. (2008). ICA-based EEG classification using fuzzy C-mean algorithm. Paper presented at: 3rd International Conference on Information and Communication Technologies: From Theory to Applications, Damascus, Syria, 23 May 2008. [DOI:10.1109/ICTTA.2008.4530056]

Ashtiyani, M., Asadi, S., Birgani, P. M., & Khordechi, E. A. (2008). EEG classification using neural networks and independent component analysis. Paper presented at: 4th Kuala Lumpur International Conference on Biomedical Engineering 2008. BI25–28 June 2008; Kuala Lumpur, Malaysia (pp. 179-182). Berlin: Springer. [Link]

Atkinson, J., & Campos, D. (2016). Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Systems with Applications, 47, 35-41. [DOI:10.1016/j.eswa.2015.10.049]

Brunner, C., Fischer, A., Luig, K., & Thies, T. (2012). Pairwise support vector machines and their application to large scale problems. The Journal of Machine Learning Research, 13(1), 2279-2292. [Link]

Craik, A., He, Y., & Contreras-Vidal, J. L. (2019). Deep learning for electroencephalogram (EEG) classification tasks: A review. Journal of Neural Engineering, 16(3), 031001. [DOI:10.1088/1741-2552/ab0ab5] [PMID]

Einalou, Z., Maghooli, K., Setarehdan, S. K., & Akin, A. (2017). Graph theoretical approach to functional connectivity in prefrontal cortex via fNIRS. Neurophotonics, 4(4), 041407.[DOI:10.1117/1.NPh.4.4.041407] [PMID]

Eslamieh, N., & Einalou, Z. (2018). Investigation of functional brain connectivity by electroencephalogram signals using Data Mining Technique. Journal of Cognitive Science, 19(4), 551-576. [Link]

Fürbass, F., Kural, M. A., Gritsch, G., Hartmann, M., Kluge, T., & Beniczky, S. (2020). An artificial intelligence-based EEG algorithm for detection of epileptiform EEG discharges: Validation against the diagnostic gold standard. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology, 131(6), 1174–1179.[DOI:10.1016/j.clinph.2020.02.032] [PMID]

Gao, Q. W., Wang, C. H., Wang, Z., Song, X. L., Dong, E. Z., & Song, Y. (2020). EEG based emotion recognition using fusion feature extraction method. Multimedia Tools and Applications, 79, 27057–27074. [DOI:10.1007/s11042-020-09354-y]

Greco, A., Valenza, G., Citi, L., & Scilingo, E. P. (2016). Arousal and Valence Recognition of Affective Sounds based on Electrodermal Activity. IEEE Sensors Journal, 17(3), 716 - 725. [DOI:10.1109/JSEN.2016.2623677]

Guyon-Harris, K. L., Carell, R., DeVlieger, S., Humphreys, K. L., & Huth-Bocks, A. C. (2021). The emotional tone of child descriptions during pregnancy is associated with later parenting. Infant Mental Health Journal, 42(5), 731–739. [PMID]

Hanjalic, A., & Xu, L. Q. (2005). Affective video content representation and modeling. IEEE Transactions on Multimedia, 7(1), 143-154. [DOI:10.1109/TMM.2004.840618]

Jirayucharoensak, S., Pan-Ngum, S., & Israsena, P. (2014). EEG-based emotion recognition using deep learning network with principal component based covariate shift adaptation. The Scientific World Journal, 2014. [DOI:10.1155/2014/627892]

Mehmood, R. M., & Lee, H. J. (2015). Emotion classification of EEG brain signal using SVM and KNN.Paper presented at: 2015 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Turin, Italy, 29 June 2015 - 03 July 2015. [DOI:10.1109/ICMEW.2015.7169786]

Sakalle, A., Tomar, P., Bhardwaj, H., Acharya, D., & Bhardwaj, A. (2021). A LSTM based deep learning network for recognizing emotions using wireless brainwave driven system. Expert Systems with Applications, 173, 114516. [DOI:10.1016/j.eswa.2020.114516]

Sammler, D., Grigutsch, M., Fritz, T., & Koelsch, S. (2007). Music and emotion: Electrophysiological correlates of the processing of pleasant and unpleasant music. Psychophysiology, 44(2), 293–304. [DOI:10.1111/j.1469-8986.2007.00497.x] [PMID]

Seifi, S., Nowshiravan Rahatabad, F., & Einalou, Z. (2018). Detection of different levels of multiple sclerosis by assessing nonlinear characteristics of posture. International Clinical Neuroscience Journal, 5(4), 115-120. [DOI:10.15171/icnj.2018.23]

Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., & Liao, D., et al. (2018). A review of emotion recognition using physiological signals. Sensors (Basel), 18(7), 2074. [PMID]

Subasi, A., Tuncer, T., Dogan, S., Tanko, D., & Sakoglu, U. (2021). EEG-based emotion recognition using tunable Q wavelet transform and rotation forest ensemble classifier. Biomedical Signal Processing and Control, 68, 102648. [DOI:10.1016/j.bspc.2021.102648]

Tyng, C. M., Amin, H. U., Saad, M. N. M., & Malik, A. S. (2017). The influences of emotion on learning and memory. Frontiers in Psychology, 8, 1454. [PMID]

Verma, G. K., Tiwary, U. S., & Agrawal, S. (2011). Multi-algorithm fusion for speech emotion recognition. In: A. braham, J.L. Mauri, J.F. Buford, J. Suzuki, & S. M. Thampi (Eds) , Advances in computing and communications. Berlin: Springer. [Link]

Wang, Y., Zhang, L., Xia, P., Wang, P., Chen, X., & Du, L., et al. (2022). EEG-based emotion recognition using a 2D CNN with different kernels. Bioengineering, 9(6), 231. [DOI:10.3390/bioengineering9060231]

Wu, W., Zhang, Y., Jiang, J., Lucas, M. V., Fonzo, G. A., & Rolle, C. E., et al. (2020). An electroencephalographic signature predicts antidepressant response in major depression. Nature Biotechnology, 38(4), 439-447. [Link]

Yin, Z., Zhao, M., Wang, Y., Yang, J., & Zhang, J. (2017). Recognition of emotions using multimodal physiological signals and an ensemble deep learning model. Computer Methods and Programs in Biomedicine, 140, 93–110. [PMID]

Yoon, H. J., & Chung, S. Y. (2013). EEG-based emotion estimation using Bayesian weighted-log-posterior function and perceptron convergence algorithm. Computers in Biology and Medicine, 43(12), 2230-2237. [DOI:10.1016/j.compbiomed.2013.10.017]

Zhang, Q., & Lee, M. (2012). Emotion development system by interacting with human EEG and natural scene understanding. Cognitive Systems Research, 14(1), 37-49. [DOI:10.1016/j.cogsys.2010.12.012]

Type of Study: Original |

Subject:

Cognitive Neuroscience

Received: 2021/11/26 | Accepted: 2023/06/22 | Published: 2023/09/1

Received: 2021/11/26 | Accepted: 2023/06/22 | Published: 2023/09/1

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |