Volume 15, Issue 6 (November & December 2024)

BCN 2024, 15(6): 795-806 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Soltani Dehaghani N, Maess B, Khosrowabadi R, Zarei M, Braeutigam S. Comparing Face and Object Processing in Perception and Recognition. BCN 2024; 15 (6) :795-806

URL: http://bcn.iums.ac.ir/article-1-1830-en.html

URL: http://bcn.iums.ac.ir/article-1-1830-en.html

Narjes Soltani Dehaghani1

, Burkhard Maess2

, Burkhard Maess2

, Reza Khosrowabadi1

, Reza Khosrowabadi1

, Mojtaba Zarei *3

, Mojtaba Zarei *3

, Sven Braeutigam4

, Sven Braeutigam4

, Burkhard Maess2

, Burkhard Maess2

, Reza Khosrowabadi1

, Reza Khosrowabadi1

, Mojtaba Zarei *3

, Mojtaba Zarei *3

, Sven Braeutigam4

, Sven Braeutigam4

1- Institute for Cognitive and Brain Sciences, Shahid Beheshti University, Tehran, Iran.

2- Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany.

3- Institute of Medical Sciences and Technology, Shahid Beheshti University, Tehran, Iran.

4- Oxford Centre for Human Brain Activity, University of Oxford, Oxford, United Kingdom.

2- Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany.

3- Institute of Medical Sciences and Technology, Shahid Beheshti University, Tehran, Iran.

4- Oxford Centre for Human Brain Activity, University of Oxford, Oxford, United Kingdom.

Full-Text [PDF 2443 kb]

| Abstract (HTML)

Full-Text:

1. Introduction

The face is a unique dominant stimulus. There are various reasons behind this fact, including quick processing speed, the ability to convey an immense amount of information at a glance, and its extensive familiarity with human beings (Rossion 2014).

There are many ways to study how brain activities change when confronted with facial stimuli. One of the most prevalent approaches to studying brain functions is event-related activities. Event-related activity is a robust measurement to inspect time-locked events (Rossion 2014; Besson et al., 2017). A well-accepted modality for recording event-related activities is magnetoencephalography (MEG). It has a high temporal and acceptable spatial resolution, allowing us to track momentary changes in brain dynamics (Singh, 2014).

Inspecting the electrophysiological correlates of facial processing, multiple studies have found a face-selective component around 170 ms after stimulus onset (Yovel, 2016). This component is known as M170 for faces. Besides the face, in visual inspection of objects, a negative event-related component known as N1 (M1 in MEG) occurs with similar latency to M170 (Rossion & Caharel, 2011). However, compared to N1, the M170 component has a larger peak and is most conspicuous over the occipitotemporal parts of the human brain (Rossion & Jacques, 2008). The M170 component is believed to represent the early structural encoding of faces (Bentin & Deouell, 2000; Eimer, 2000) and sometimes even contains some information about the identity (Jacques & Rossion, 2006; Vizioli et al., 2010).

An earlier component peaks about 100 ms after the starting time of stimulus presentation and is called M100 or P1 (Linkenkaer-Hansen et al., 1998; Rivolta et al., 2012). The M100 component reflects low-level properties in visual stimuli, including features like luminance and size (Negrini et al., 2017); it is also an important attentional component (Luck et al., 1990; Mangun & Hillyard, 1995). The M100 component is sometimes considered a face-selective component, and some studies have reported a larger M100 peak in response to face stimuli than objects (Goffaux et al., 2003; Itier & Taylor, 2004). However, some studies have stated that the M100 is not a face-selective component (Boutsen et al., 2006).

Although previous studies have shed light on the importance of the M100 and M170 components in the context of the face as well as object processing, the existence of significant effects in these components during different levels of facial processing as well as the onset and peak time of these components are still controversial. The onset time of an effect is important because it determines the speed at which the distinction between various stimulus pairs takes place in the human brain. Additionally, the time at which the maximum difference between the two conditions happens clarifies their maximum separability. In the present study, we examined the M100 and M170 components in perception and recognition levels of facial and object processing. Additionally, we did not confine facial processing to human face stimuli, but we extended it to another species of face stimuli and sought the probable difference in any of the mentioned processing levels.

2. Materials and Methods

Study participants

In our study, 22 healthy individuals with a mean age of 24±5 years participated. They included 21 males, and 20 were right-handed.

Experimental procedure and data acquisition

Images were static and grey-scale acquired from the FERET open-source database (Phillips et al., 1998). Each image was displayed for 200 ms. All images were standardized for size (subtended 8×6 degrees at the eye) and luminosity (42±8 cd⁄m²). The images were projected onto a screen placed 90 cm in front of participants’ eyes while they sat under the MEG helmet. Three different types of stimuli were recruited in the present study: human face, monkey face, and motorbike. We presented two images of the same type in sequential order during each trial. The beginning time of the presentation for the second image was after a delay of 1.2±0.3 s, pursuing the first displayed image. The subjects had to decide about the equality of the second image with the first one in a response window of 1 second. In other words, our task contained a perception level in the first presented stimulus and a recognition level in the second presented stimulus per trial. The two images presented in each trial were always the same type and displayed randomly. We included 48 trials per category, in half of which the first image was repeated as the second image and in the other half not.

While the subjects were doing the task, the neuromagnetic responses were recorded with the VectorViewTM MEG system of the Brain Research Group at the Oxford Centre for Human Brain Activity (OHBA). During the recording, the sampling frequency was set to 1000 Hz (0.03–330 Hz bandwidth).

Data preprocessing

The initial preprocessing was performed using the signal-space separation (SSS) noise reduction method implemented in MEGIN/Elekta Neuromag Maxfilter (Maxfilter version 2.2.15, MEGIN, Helsinki), and we used the FieldTrip toolbox for EEG/MEG-analysis (Oostenveld et al., 2011) for our later analyses. We segmented the data such that each epoch started 300 ms before stimulus onset and continued to 1000 ms after stimulus onset. We first padded the trials to filter the data by setting the padding length to 10 seconds. Later, low-pass and high-pass filters were applied with cutoffs at 0.5 and 150 Hz by applying a single-pass, zero-phase windowed-sinc FIR filter and Kaiser window setting max passband deviation to 0.001. The coefficients were 93 and 3625 for low-pass and high-pass filters, respectively. To reduce the heartbeat and eye movement artifacts, we used independent component analysis and removed the contaminated components.

Data processing and statistical analyses

Data were processed based on the data in magnetometer channels because after applying the SSS correction, information in magnetometer and gradiometer channels is about the same (Garcés et al., 2017).

We considered various pairs of stimuli to compare face and object processing in perception and recognition. We considered three categories for faces, i.e. human face, monkey face, and general face (the combination of human and monkey faces), and compared any of these types with motorbike stimuli. We also compared human and monkey face processings. Additionally, we compared the perception and recognition per stimulus type. To compare any pair of conditions, we first normalized the data between that pair per subject and then ran cluster-based permutation on the evoked responses of the two conditions using a dependent-sample permutation two-tail t-test (Maris & Oostenveld, 2007) separately for M100 and M170 components. This process was done to test whether any component has a significant difference between the two conditions. We applied the Monte Carlo method (Maris & Oostenveld, 2007) for cluster-based permutation and considered the time interval from 80 to 130 ms after stimulus onset for the M100 component and the period from 130 to 200 ms after stimulus onset for the M170 component. Temporal and spatial adjacent samples whose t values exceeded a critical threshold for an uncorrected P level of 0.05 were clustered in connected sets. Cluster-level statistics were calculated by taking the sum of the t values within every cluster and then considering the maximum of the cluster-level statistic. The statistical test was corrected for the false alarm rate using a threshold value 0.05 for the two-tail test. We set the number of draws from the permutation distribution to 1000. As we performed the analysis separately for M100 and M170 components, we performed a Bonferroni correction for them, i.e. we accepted the cluster-level statistic only if the related P<0.025.

We used the bootstrapping approach to have a more exact approximation for the onset and the peak effect of the effects we found by cluster-based permutation. This condition was carried out by bootstrapping the participants’ samples to determine a 95% confidence interval (CI) for the onset and peak latencies of the significant effects revealed by the cluster-based permutation analyses. The overall procedure was based on the approach explained in (Cichy et al., 2014) by creating 100 bootstrapped samples by sampling from the participant with replacement and each time repeating the cluster-based permutation test with the same configurations as the initial level of permutation analyses.

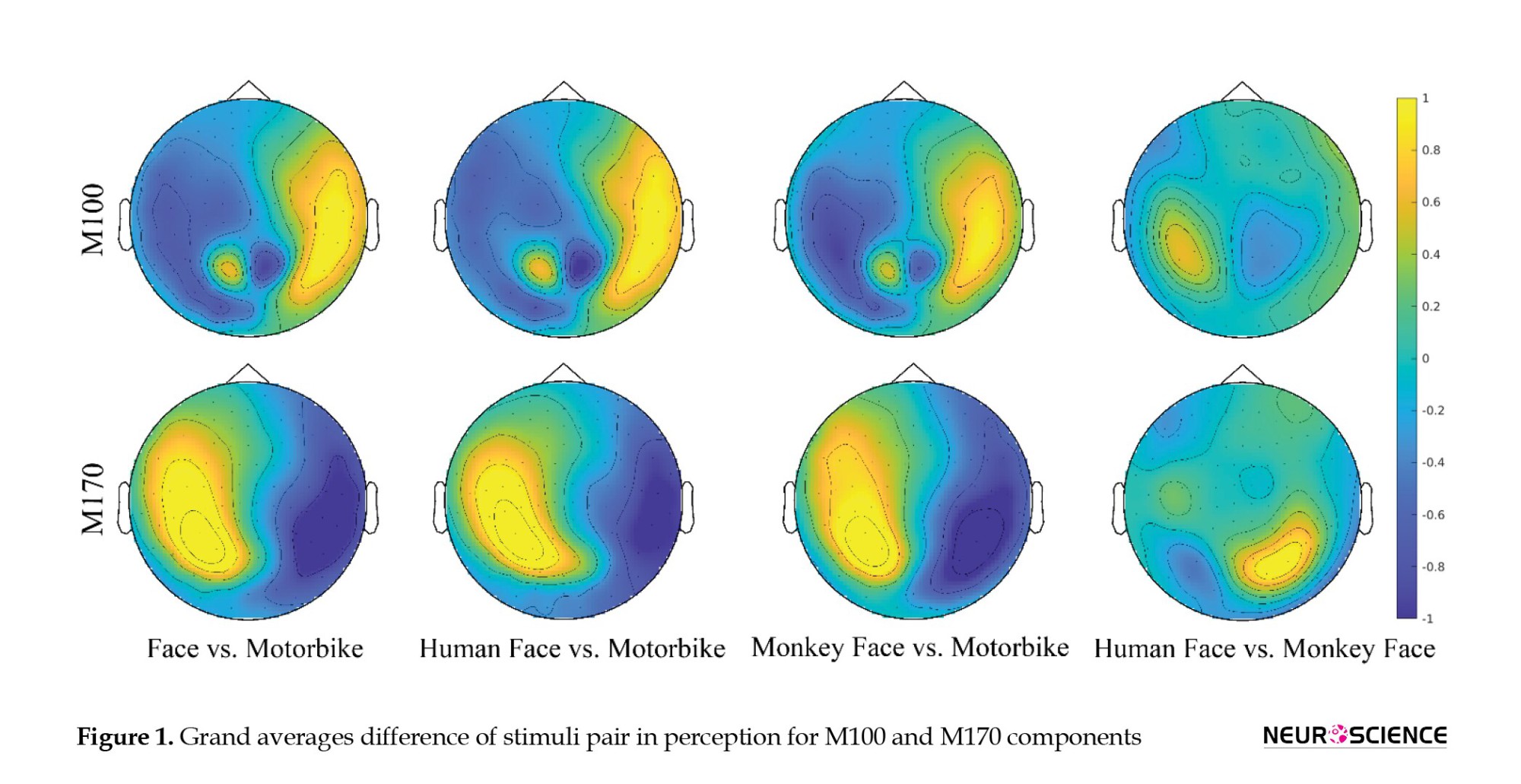

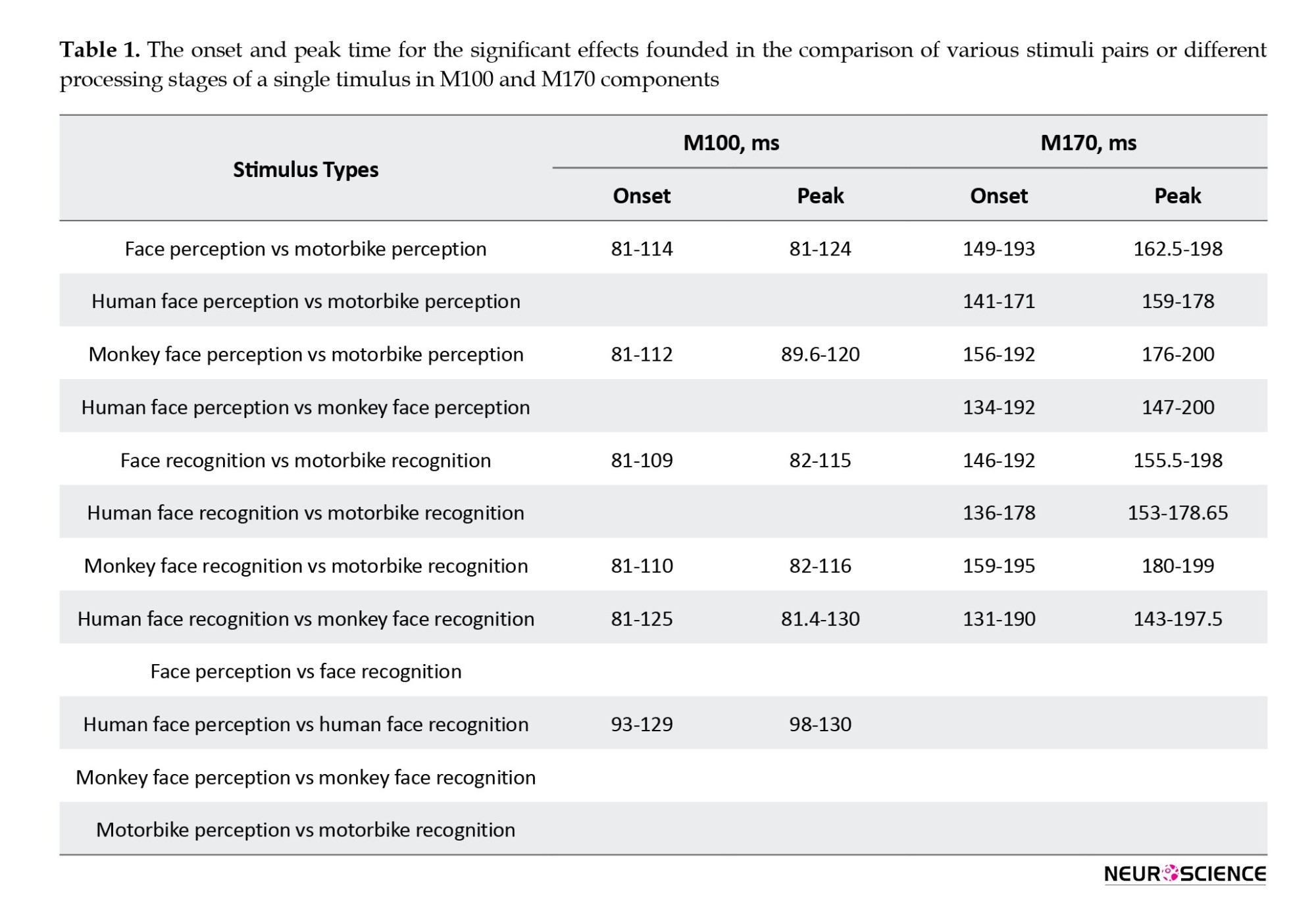

3. Results

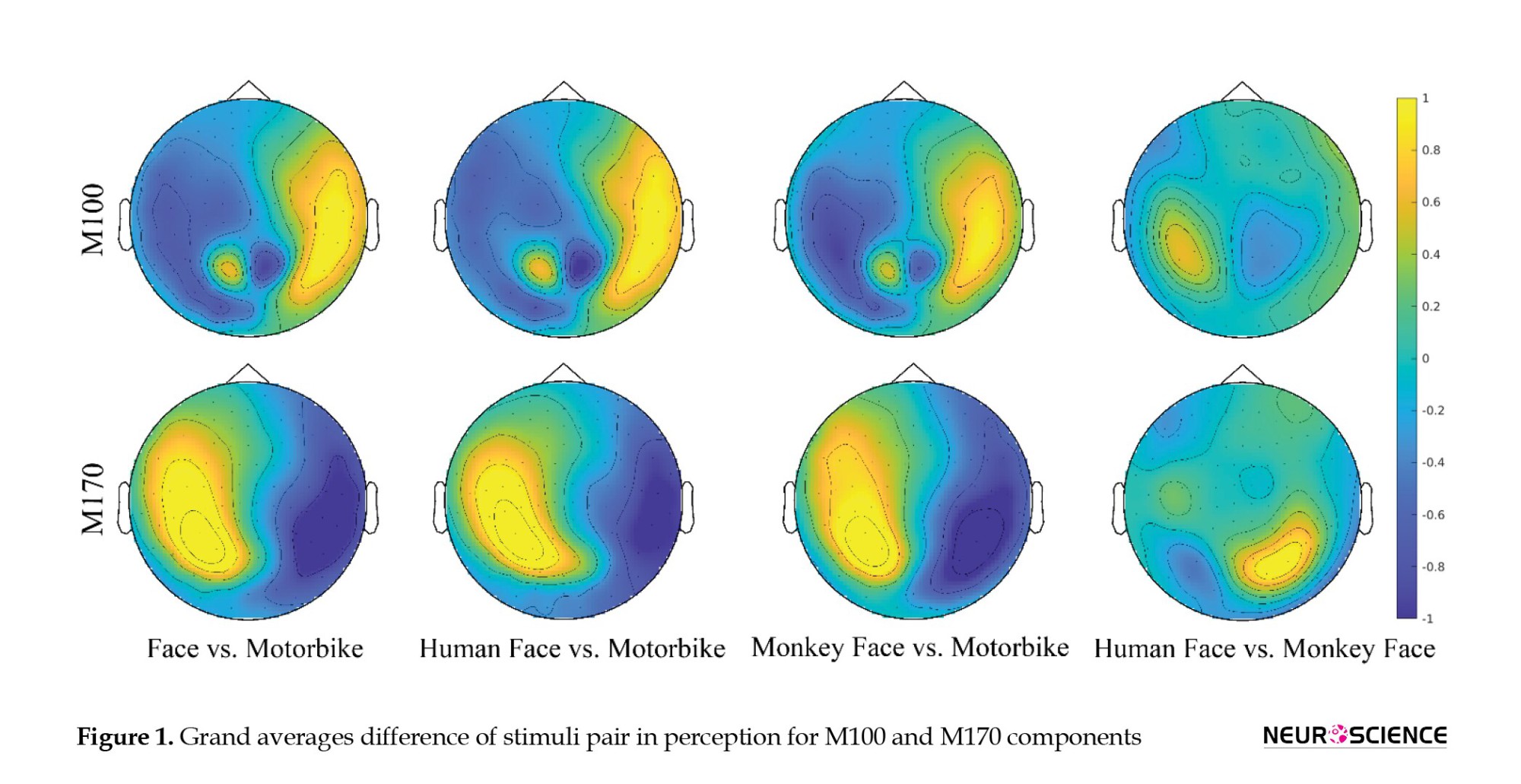

Examining the M100 component in general face perception and comparing it to motorbike perception, we found a significant effect from 81 to 130 ms after stimulus onset (P=0.004). The 95% CI for the beginning time of this effect started at 81 ms and ended at 114 ms. For the peak of this effect, the 95% CI was from 81 to 124 ms after stimulus onset. There was also a significant effect between faces and motorbikes when considering the M170 component (P=0.002) that emerged from 153 to 200 ms after stimulus presentation. For this effect, 95% CIs for starting and peak time were 149 to 193 ms and 162.5 to 198 ms, respectively.

Going into more detail by separately comparing human face perception and monkey face perception with motorbike perception, the results were as follows. Focusing on the M100 component when comparing human face vs motorbike perception, we could not find any significant effect after Bonferroni correction (P=0.026). There was, however, a significant effect between monkey face and motorbike perception in the M100 component, manifested from 90 to 125 ms after stimulus onset (P=0.018) with a 95% CI for the beginning time from 89 to 130 ms and from 89.6 to 120 ms for the peak time. Considering the N170 component, cluster-based permutation revealed a significant difference (P=0.002) between the human face and motorbike perception. This effect was found from 149 to 181 ms after stimulus onset. Bootstrapping showed that a 95% CI for the onset time of this effect started from 141 ms and continued to 171 ms after stimulus onset. These values were from 159 to 178 ms for the peak time of the effect. About monkey face vs motorbike perception, a significant difference in the M170 component appeared from 158 to 200 ms after stimulus onset (P=0.002) with 95% CIs from 156 to 192 ms for the onset and from 176 to 200 ms for the peak time.

There were no significant results in the comparison of M100 between the human face and monkey face perception (P=0.34). However, the difference between the two stimuli was significant in M170 (P=0.002) with a 95% CI from 134 to 192 ms for the beginning time and from 147 to 200 ms for the peak time.

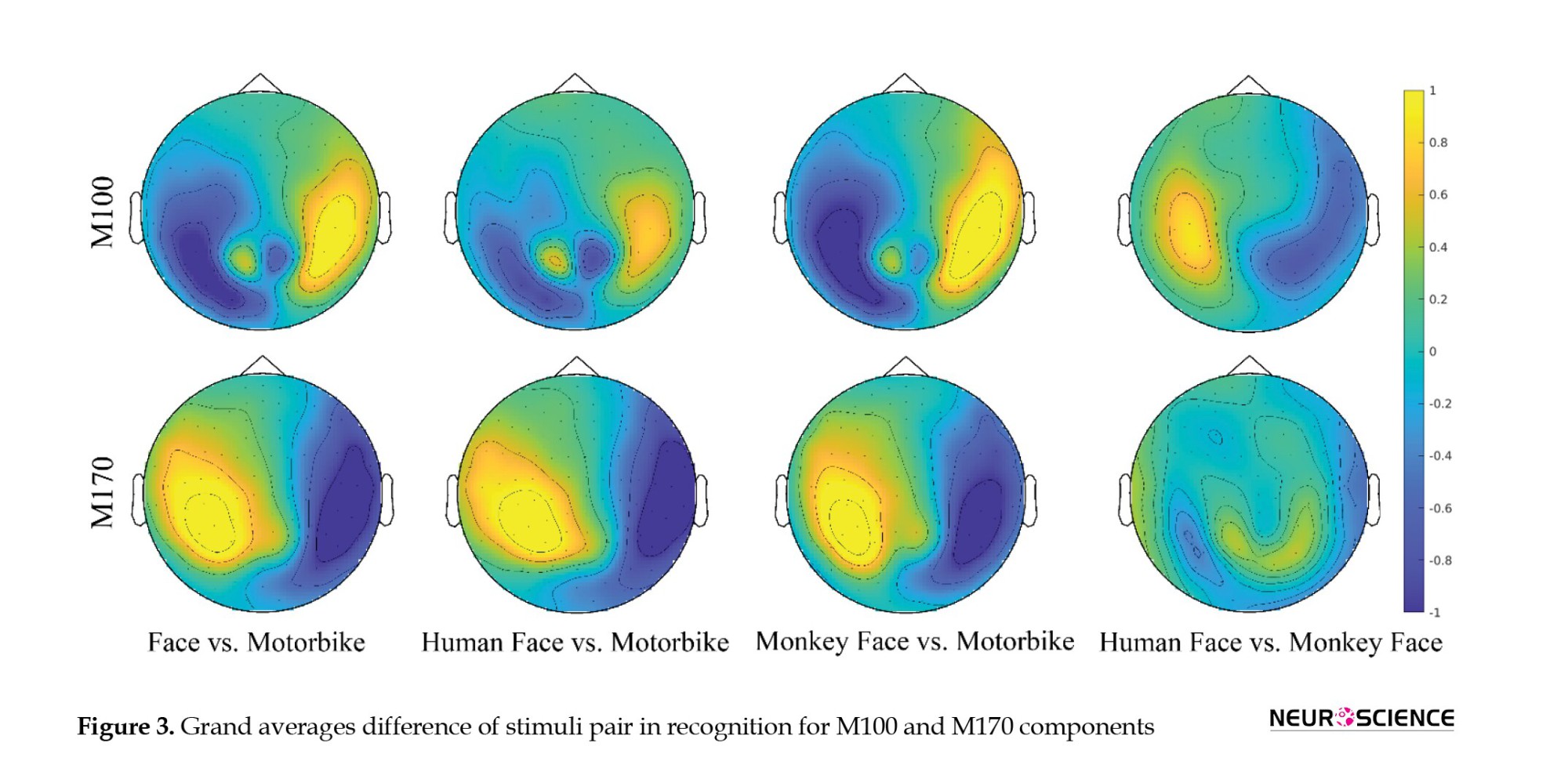

The topoplot for the grand average difference between the mentioned comparisons in perception is shown in Figure 1, which shows the activation mainly in occipitotemporal channels.

The time courses for the grand average of the stimuli perception over the occipitotemporal channels are shown in Figure 2.

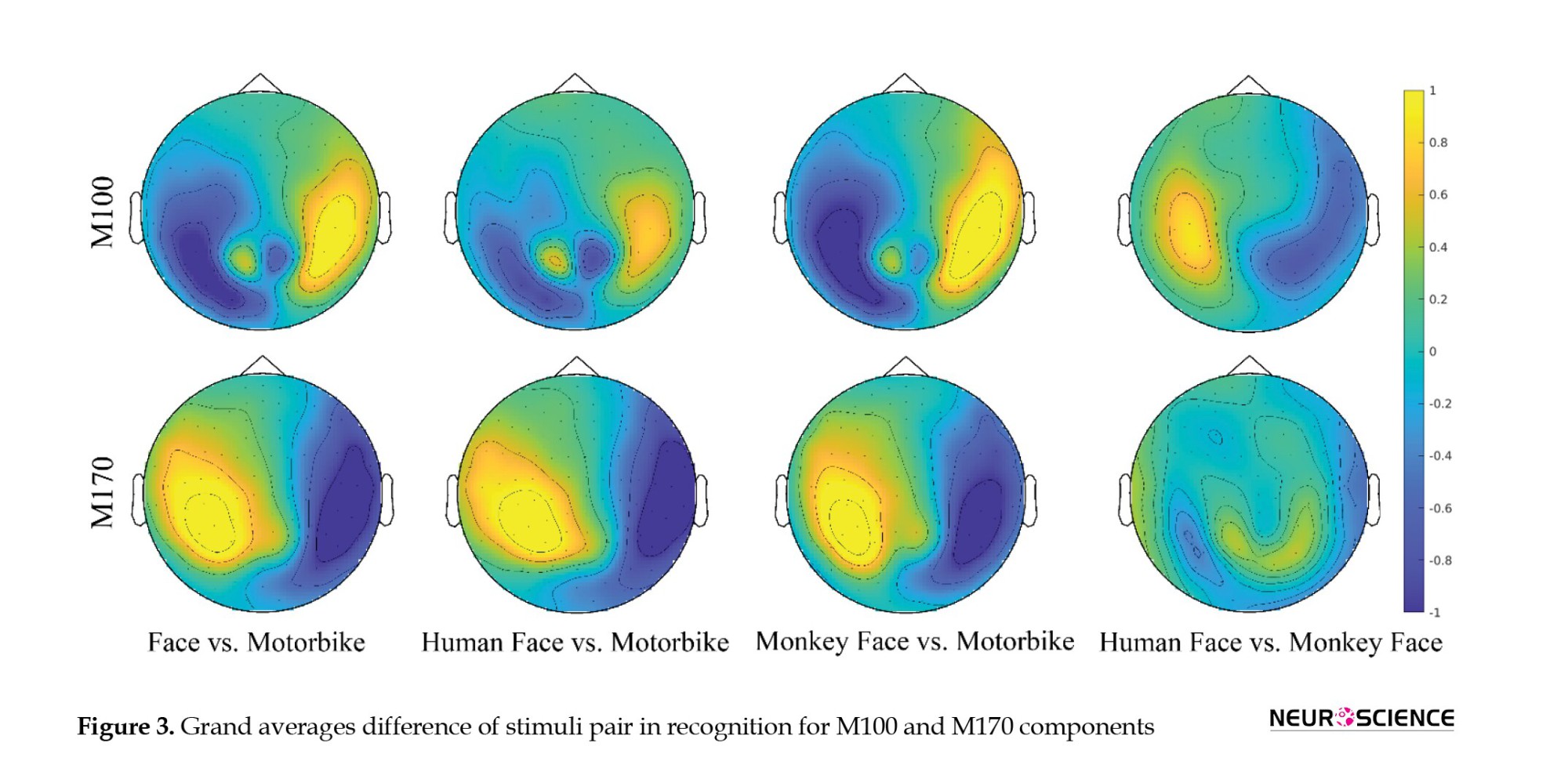

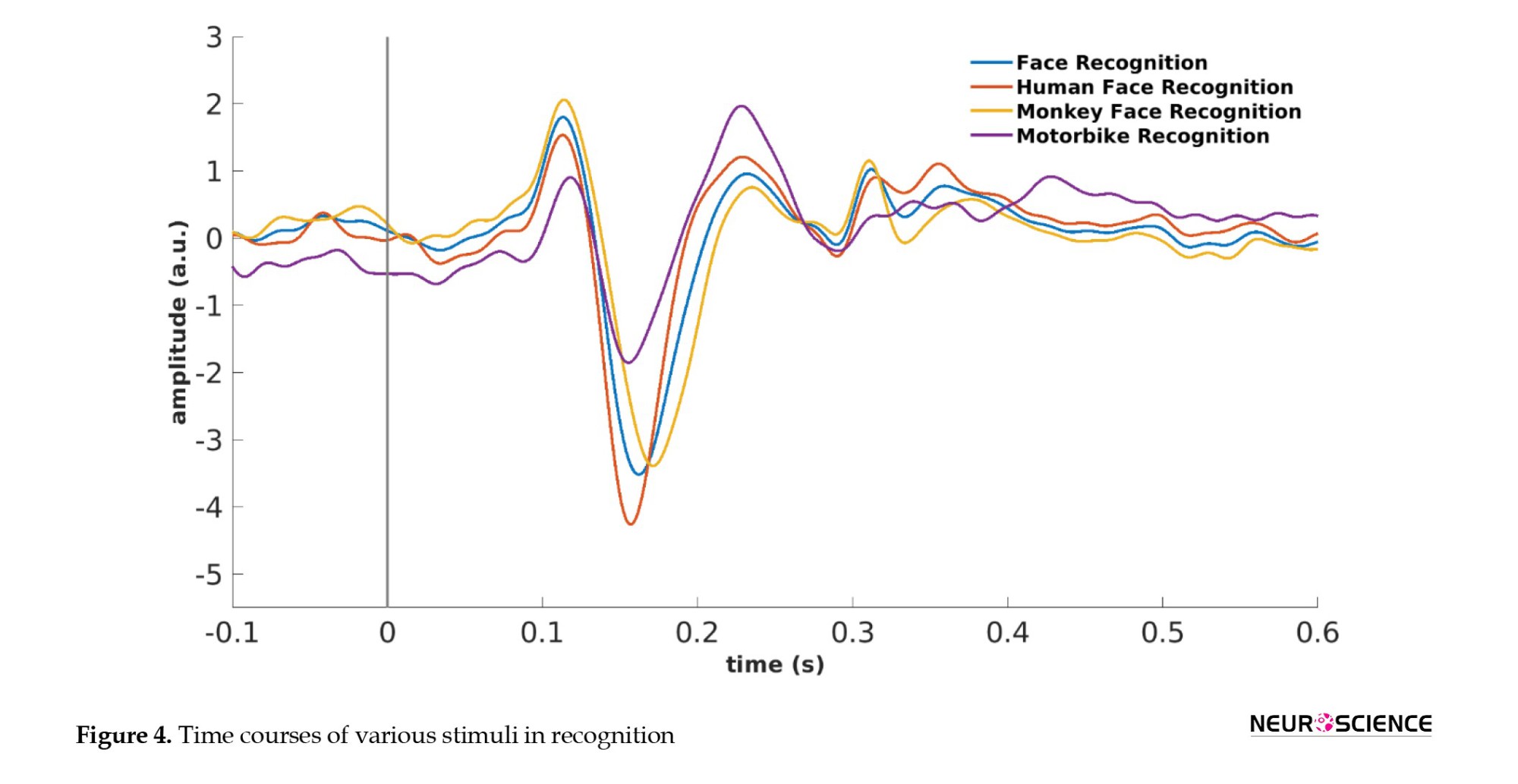

The difference between the stimuli in recognition was significant in most cases. Considering general faces and motorbike stimuli, there was a significant difference in M100 (P=0.006) emerging from 93 to 114 ms after stimulus onset with 95% CIs for the onset from 81 to 109 ms and for the peak time from 82 to 115 ms after stimulus onset. The comparison between the two stimuli was also significant in M170 (P=0.002) and emerged from 149 to 200 ms after stimulus onset. For the beginning time of this effect, the 95% CI was from 146 to 192 ms, while it was from 155.5 to 198 ms for the peak time.

There was no significant effect when comparing M100 components of human face recognition and motorbike recognition (P=0.058). However, the difference between the two stimuli was significant in M170, which emerged from 142 to 180 ms after stimulus onset. For this effect, the 95% CIs were from 136 to 178 ms for the beginning time and from 153 to 178.65 ms for the peak time.

The comparison between monkey face and motorbike recognition was significant for the M100 (P=0.004) and M170 (P=0.002) components. Regarding the M100, a significant effect was found from 81 to 117 ms with a 95% CI from 81 to 110 ms for the onset and for the peak time from 82 to 116 ms after stimulus onset. Concerning M170, a significant effect emerged from 159 to 200 ms after stimulus onset. The 95% CIs were from 159 to 195 ms for the onset and 180 to 199 ms for the peak time.

Subcategories of face recognition also showed a significant difference in M100 and M170 (P=0.002 for both components). The difference between human face recognition and monkey face recognition in M100 emerged from 95 to 130 ms and in M170 from 131 to 200 ms after stimulus onset. The 95% CIs for the onset of the effect were from 81 to 125 ms and for the peak time from 81.4 to 130 ms in M100. Considering the M170 component, the 95% CIs was from 131 to 190 ms for the beginning time and from 143 to 197.5 ms for the peak time.

The topoplots for the difference in recognition are shown in Figure 3.

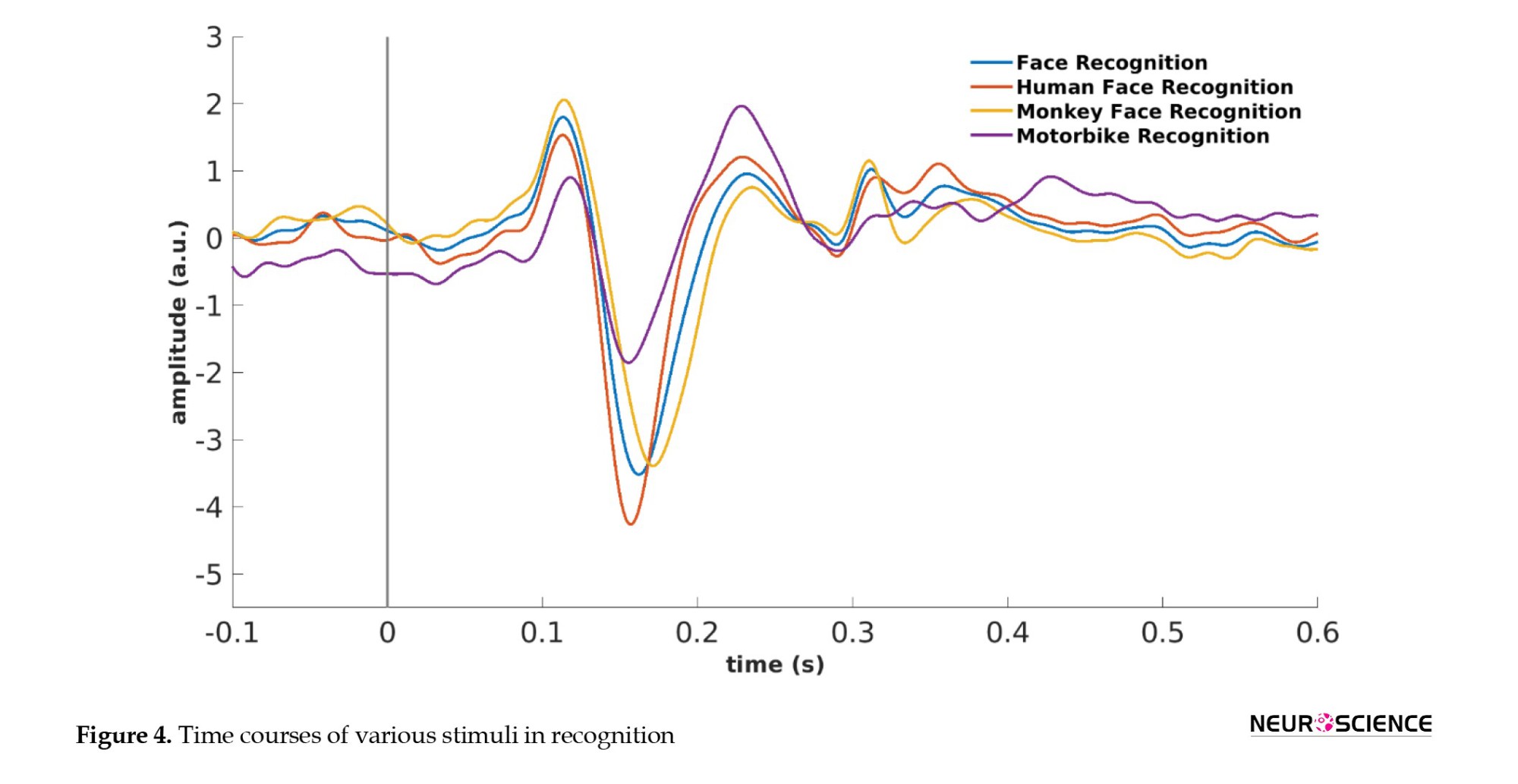

Figure 4 plots the time courses for the grand average of various stimuli types over the occipitotemporal channels in recognition.

Considering the face as a single stimulus type and comparing the perception and recognition, we could not find any significant effect in the M100 and M170 components (P=0.61 and 0.07, respectively).

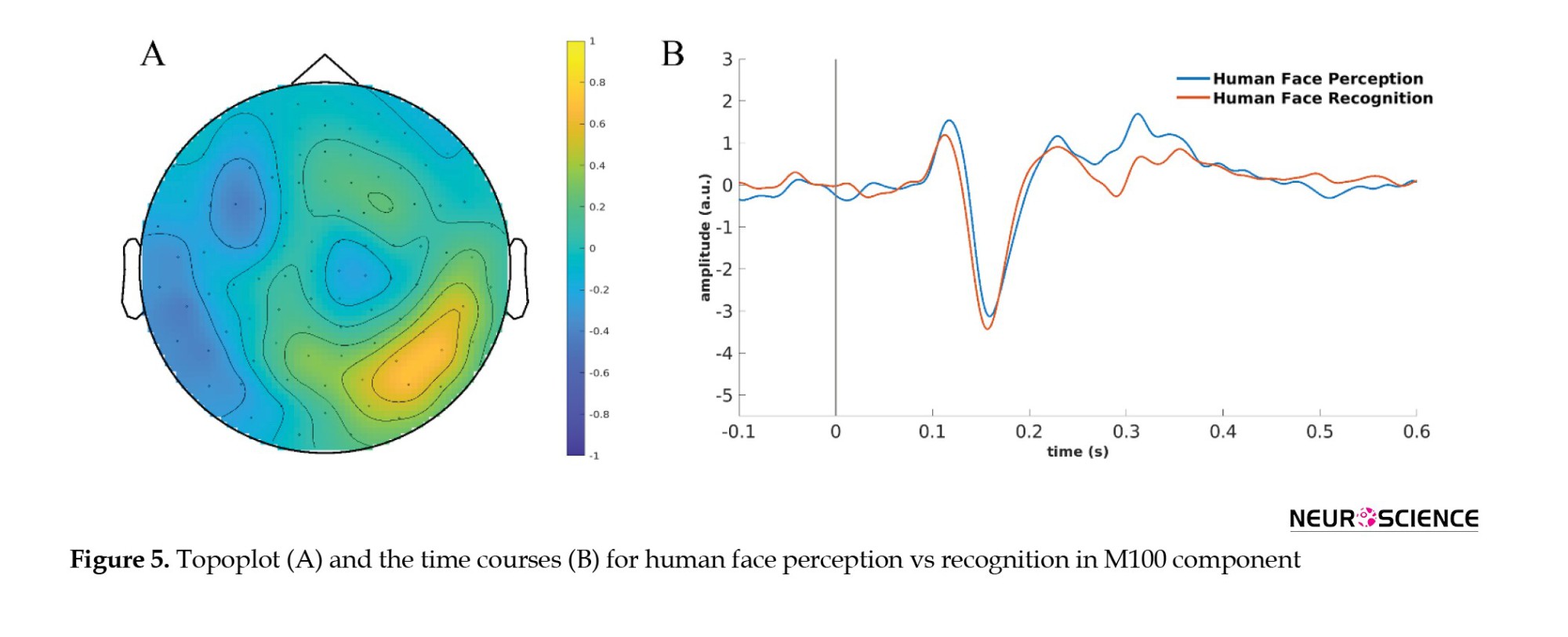

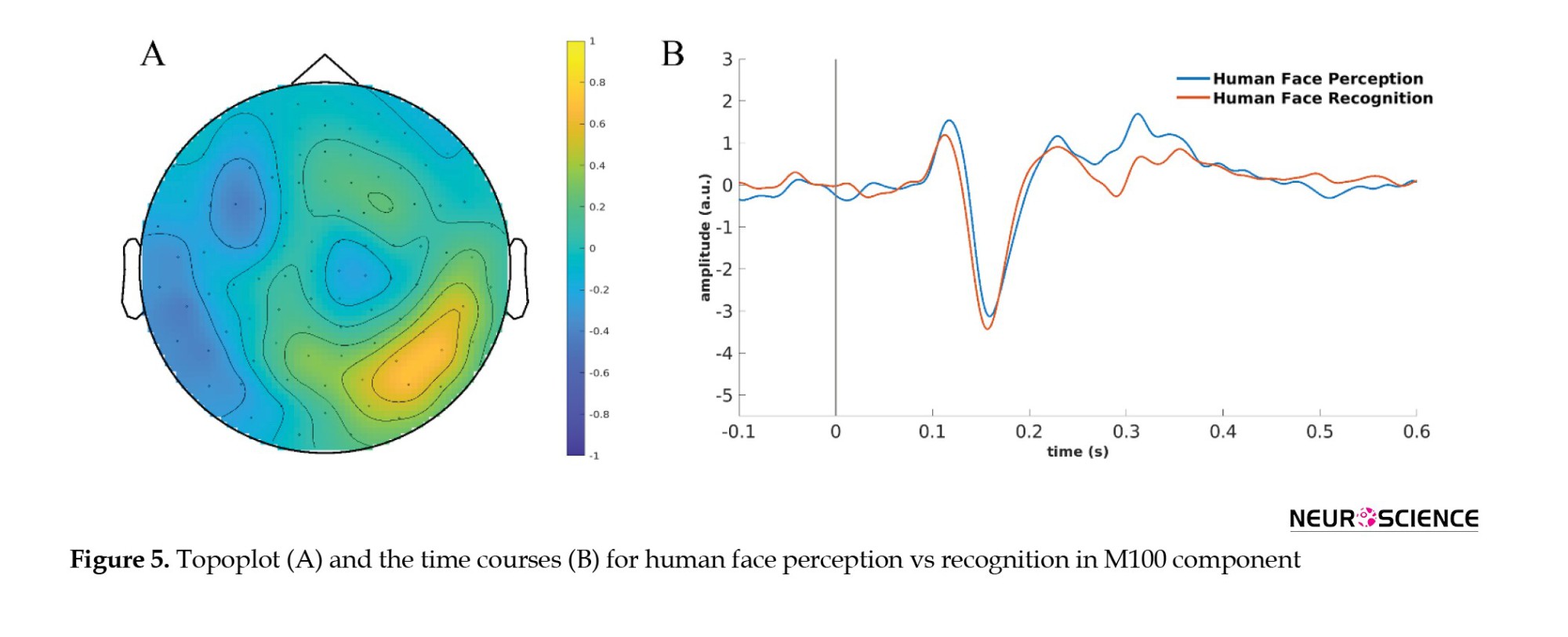

There was a significant effect in the M100 component (P=0.01) when comparing human face perception with recognition, which appeared from 117 to 130 ms after stimulus onset. The 95% CIs for this effect were from 93 to 129 ms for the onset and from 98 to 130 ms for the peak time. However, there was no significant effect in M170 between human face perception and recognition after Bonferroni correction (P=0.032).

The comparison between monkey face perception and recognition did not significantly affect M100 (P=0.31) or M170 after Bonferroni correction (P=0.026). There was also no significant effect on motorbike perception and recognition in the M100 (P=0.47) and M170 (P=0.14) components.

The topoplot in Figure 5A shows the difference between grand averages of human face perception and recognition in the M100 component. The time course over occipitotemporal channels for human face perception and recognition is shown in Figure 5B.

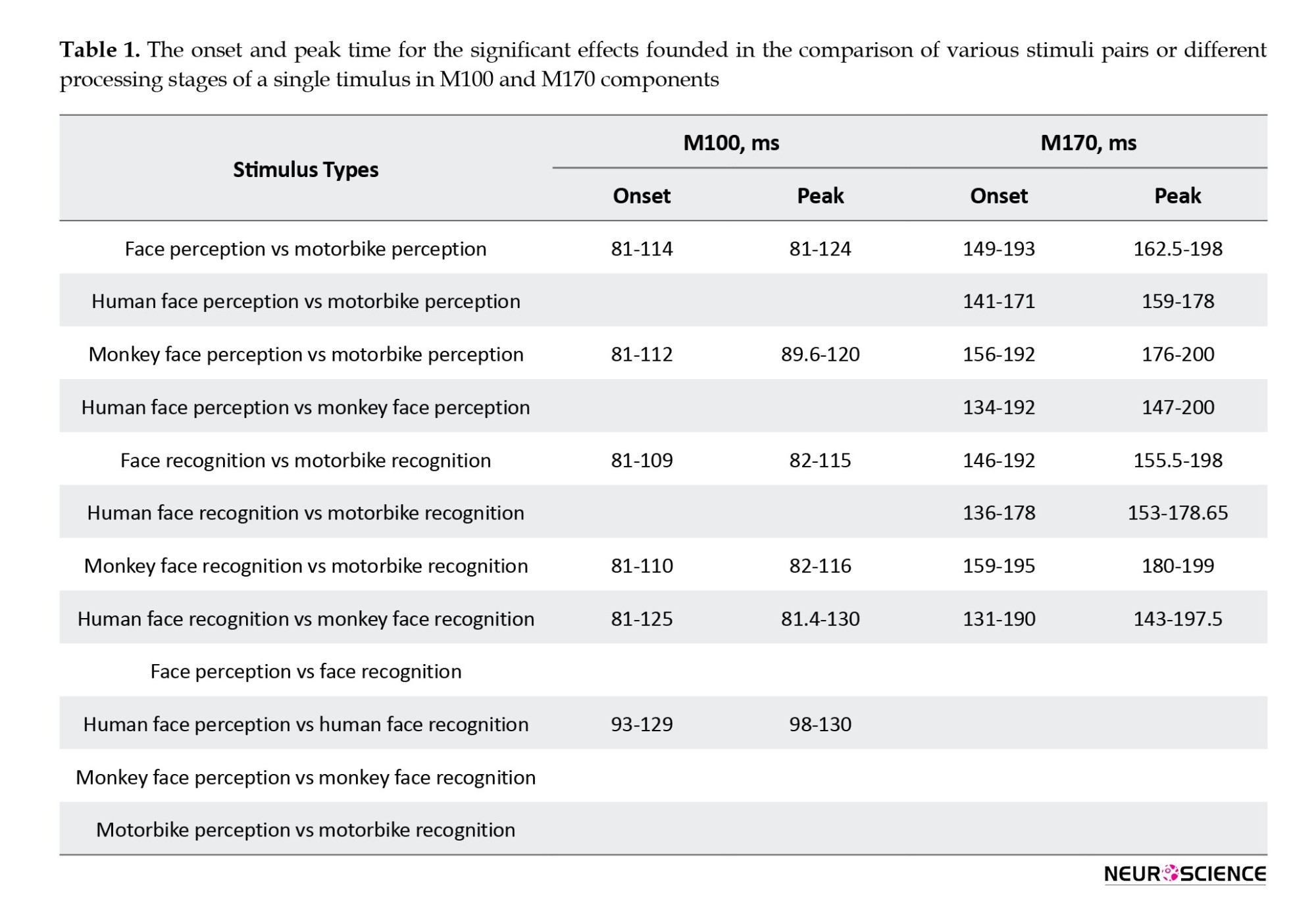

We found the onset and the peak time for the significant effects when comparing various stimuli pairs in M100 and M170 components in perception and recognition. Also, the onset and peak time for the effect is different in perception vs recognition (Table 1). The comparisons which showed no significant effect were highlighted in grey.

4. Discussion

The most prominent visual event-related activities happen before 200 ms after stimulus onset (Rossion & Caharel, 2011). In this study, we assessed two of the most conspicuous event-related components, i.e. M100 and M170, and traced their changes of amplitude in stimulus perception and recognition. To this end, we ran cluster-based permutations separately for each pair of stimulus types in perception and recognition. We also performed cluster-based permutations between perception and recognition for any stimulus type. We ran separate bootstrap tests for any of those effects to determine the significant effects of onset and peak time found by permutation analyses.

Considering the M100 component, human face traces had a bigger peak than the motorbike in the recognition phase, but the comparison was not significant in perception after the Bonferroni correction. Nevertheless, based on the grand average time courses, we can still see the same pattern between the human face and motorbike perception. Consistent with our findings, multiple previous studies reported a larger amplitude of M100 for faces than objects (Herrmann et al., 2005; Okazaki et al., 2008; Rossion & Jacques, 2008). The comparison between monkey face and motorbike stimuli in M100 was significant in perception and recognition states, with the same pattern in which monkey faces had a higher peak than motorbike traces. This result implies that a more face-selective pattern in M100 is present for non-human faces. Examining the difference between human face vs monkey face activities in the M100 component, we found that the monkey face stimulus had a higher peak than human faces in recognition. Following our results, Balas and Koldewyn (2013) found a significant difference between human and non-human face processing in M100, in which a larger peak amplitude for dog faces was observed than for human faces. We could not dissociate human face perception from monkey face activities in the M100 component based on our findings. Our results suggest that while we usually have face selectivity in the M100 component, it is not always the case, which is compatible with the debate already present in the literature about the face-selectivity of this component (Boutsen et al., 2006; Rivolta et al., 2012). In other words, while M100 is face-selective in recognition for human and monkey face stimuli, it is not in perception for human faces (but still selective for monkey faces) if conservative statistical tests like permutation analyses and Bonferroni correction are applied.

Based on our results, in most cases, there is a clear pattern between the stimuli amplitudes in M170 in the perception and recognition phases. In more detail, the amplitude of M170 always had a bigger peak in human face traces compared to motorbike time courses. The fact that faces generate a bigger peak than objects in M170 has been prevalently reported in the literature (Rousselet et al., 2008; Rossion & Caharel, 2011; Daniel & Bentin, 2012). On the other hand, comparing subcategories of faces (in our study, human face vs monkey face) was more challenging. Previous studies mainly compared human and non-human faces at the perception level. In this issue, some studies reported no difference between human and non-human face perception in M170 (Carmel & Bentin, 2002; Rousselet et al., 2004; Balas and Koldewyn, 2013), while some studies reported a bigger peak of M170 for non-human face in comparison to human face stimuli (Haan et al., 2002). Based on our results, when examining the differences between human face perception and monkey face perception, multiple occipitotemporal channels emerged during the permutation analyses. While in some of these channels, we observed a bigger peak of M170 for monkey faces in contrast to human face stimuli; there were some temporal and occipital channels in which the reverse finding was detected, i.e. the amplitude of M170 in human face perception traces was larger than the monkey face perception time courses. Inspecting the difference between human and monkey faces at the recognition level, we observed a higher peak of M170 for human faces than for monkey faces in the recognition stage. This condition may be due to human expertise in their face processing but not in other species’ facial processing (Pascalis & Bachevalier, 1998).

The monkey face traces had a bigger peak in M170 compared to motorbike traces in the same component. This pattern was the same in perception and recognition states. Moreover, when merging human face and monkey face stimuli and considering them as the general face, the amplitude in M170 peaked in contrast to the motorbike time course. This result implies that in the comparison between faces and objects, the race of the face rarely matters, and the brain always generates a larger M170 for faces than objects.

Regarding the differentiation between perception and recognition per stimulus type in the M100 component, the only significant comparison was for the human face stimulus. This finding indicates that human face stimuli require different attentional resources, while the amount of attention used in perception and recognition by other stimuli is comparable.

The difference between perception and recognition in the M170 component was not significant in any of the stimulus types, which is in contrast to what was found by Daniel & Bentin, (2012), indicating a significant difference between face perception and recognition in M170. However, the criterion for recognition in Daniel and Bentin’s study was different from ours. In other words, while in their study, participants should decide about the gender or familiarity of the second presented stimulus, in our research, the equality of the second presented stimulus with the first one matters. This discrepancy in the results shows the high sensitivity of the result to the task design and analysis methods.

Considering the onset time for the significant effects, a 95% CI helps us understand the speed at which the distinction between various stimulus types happens in the human brain. On the other hand, a 95% CI of the peak time for the significant effects sheds light on the time at which the maximum separability between two kinds of stimuli has occurred. Our results indicate a unique pattern for the speed at which various comparisons happened in the N170 component, both for the onset and the peak times and in perception and recognition. In more detail, the distinction between the human and monkey faces happened earlier than the discrimination between the human face and motorbike, which occurred earlier than the differentiation between monkey face vs motorbike, both in terms of the onset and peak time in the N170 component. This pattern has been repeated in perception and recognition. Previous studies have widely reported that face processing happens faster than object processing, and this speed is mostly exposed in the N170 component (Hsiao & Cottrell, 2008; Crouzet et al., 2010; Rossion 2014). Our results extend these findings by revealing that this speed is also present in discerning between the subcategories of faces, i.e. in human vs monkey facial processing. Moreover, our results signify that the distinguishment between human face vs motorbike happens earlier than monkey face vs motorbike, which is likely because of human expertise in own species face processing, but not in other species (Pascalis & Bachevalier, 1998). However, we could not find significant results when searching for the distinction between perception and recognition per stimulus type in N170. When comparing stimuli, we usually inspect earlier onset and peak time in recognition rather than perception. This result is probably due to the facilitation in recognition compared to perception due to our task design. In other words, because participants knew the second presented stimulus in each trial is always from the same category as the first one, it is rational that the N170 onset and peak happen earlier in recognition than for perception.

Regarding the M100 component, there was hardly any difference between the onset and peak time of various comparisons. We could barely discriminate between the speed and maximum separability time of any pair of stimuli at about 100 ms after stimulus onset.

5. Conclusion

The current study examined the differences between face and object processing in perception and recognition in M100 and M170 components. Based on our findings, M100 is a face-selective component in recognition but not always in perception. The comparison between faces and objects in M170 always revealed a higher peak for faces than objects. Considering the onset and the peak time, our results suggest that while there was hardly any distinction between various comparisons in M100, a unique pattern was present in the M170 component.

Limitations

The major limitation of this study was confining the results to sensor space activities. This limitation was due to the absence of individual MRI scans. In future studies, it would be helpful to learn about the brain regions involved in the effects we have found and the possible latencies in those regions. Additionally, event-related field components later than 200 ms also deserve to be assessed in later studies, especially in terms of their onset and peak time.

Ethical Considerations

Compliance with ethical guidelines

All procedures agreed with the Code of Ethics of the World Medical Association (the Declaration of Helsinki). This study was approved by the Oxford Research Ethics Committee B (Code: 07/H0605/124). Written informed consent was acquired from all individuals.

Funding

The paper was extracted from the PhD dissertation of Narjes Soltani Dehaghani, approved by the Institute for Cognitive and Brain Sciences, Shahid Beheshti University, Tehran, Iran. In this study, Sven Braeutigam was supported by the Welcome Centre for Integrative Neuroscience (WIN) and the Department of Psychiatry, University of Oxford, Oxford, United Kingdom. Narjes Soltani Dehaghani was supported for lab visits to the Max Planck Institute, Leipzig,, Germany, and Oxford Centre for Human Brain Activity (OHBA), by the German Academic Exchange Service (DAAD) and Cognitive Sciences and Technologies Council, Tehran, Iran.

Authors' contributions

Conceptualization, study design, and data collection: Sven Braeutigam; Source analysis and statistical analysis: Narjes Soltani Dehaghani and Burkhard Maess; Writing the original draft: Narjes Soltani Dehaghani; Review and editing: Burkhard Maess, Reza Khosrowabadi, Mojtaba Zarei, and Sven Braeutigam; Data interpretation and final approval: All authors.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors thank Vanessa Murray-Walpole for helping with data acquisition and Anthony Bailey for contributing to the study design.

References

The face is a unique dominant stimulus. There are various reasons behind this fact, including quick processing speed, the ability to convey an immense amount of information at a glance, and its extensive familiarity with human beings (Rossion 2014).

There are many ways to study how brain activities change when confronted with facial stimuli. One of the most prevalent approaches to studying brain functions is event-related activities. Event-related activity is a robust measurement to inspect time-locked events (Rossion 2014; Besson et al., 2017). A well-accepted modality for recording event-related activities is magnetoencephalography (MEG). It has a high temporal and acceptable spatial resolution, allowing us to track momentary changes in brain dynamics (Singh, 2014).

Inspecting the electrophysiological correlates of facial processing, multiple studies have found a face-selective component around 170 ms after stimulus onset (Yovel, 2016). This component is known as M170 for faces. Besides the face, in visual inspection of objects, a negative event-related component known as N1 (M1 in MEG) occurs with similar latency to M170 (Rossion & Caharel, 2011). However, compared to N1, the M170 component has a larger peak and is most conspicuous over the occipitotemporal parts of the human brain (Rossion & Jacques, 2008). The M170 component is believed to represent the early structural encoding of faces (Bentin & Deouell, 2000; Eimer, 2000) and sometimes even contains some information about the identity (Jacques & Rossion, 2006; Vizioli et al., 2010).

An earlier component peaks about 100 ms after the starting time of stimulus presentation and is called M100 or P1 (Linkenkaer-Hansen et al., 1998; Rivolta et al., 2012). The M100 component reflects low-level properties in visual stimuli, including features like luminance and size (Negrini et al., 2017); it is also an important attentional component (Luck et al., 1990; Mangun & Hillyard, 1995). The M100 component is sometimes considered a face-selective component, and some studies have reported a larger M100 peak in response to face stimuli than objects (Goffaux et al., 2003; Itier & Taylor, 2004). However, some studies have stated that the M100 is not a face-selective component (Boutsen et al., 2006).

Although previous studies have shed light on the importance of the M100 and M170 components in the context of the face as well as object processing, the existence of significant effects in these components during different levels of facial processing as well as the onset and peak time of these components are still controversial. The onset time of an effect is important because it determines the speed at which the distinction between various stimulus pairs takes place in the human brain. Additionally, the time at which the maximum difference between the two conditions happens clarifies their maximum separability. In the present study, we examined the M100 and M170 components in perception and recognition levels of facial and object processing. Additionally, we did not confine facial processing to human face stimuli, but we extended it to another species of face stimuli and sought the probable difference in any of the mentioned processing levels.

2. Materials and Methods

Study participants

In our study, 22 healthy individuals with a mean age of 24±5 years participated. They included 21 males, and 20 were right-handed.

Experimental procedure and data acquisition

Images were static and grey-scale acquired from the FERET open-source database (Phillips et al., 1998). Each image was displayed for 200 ms. All images were standardized for size (subtended 8×6 degrees at the eye) and luminosity (42±8 cd⁄m²). The images were projected onto a screen placed 90 cm in front of participants’ eyes while they sat under the MEG helmet. Three different types of stimuli were recruited in the present study: human face, monkey face, and motorbike. We presented two images of the same type in sequential order during each trial. The beginning time of the presentation for the second image was after a delay of 1.2±0.3 s, pursuing the first displayed image. The subjects had to decide about the equality of the second image with the first one in a response window of 1 second. In other words, our task contained a perception level in the first presented stimulus and a recognition level in the second presented stimulus per trial. The two images presented in each trial were always the same type and displayed randomly. We included 48 trials per category, in half of which the first image was repeated as the second image and in the other half not.

While the subjects were doing the task, the neuromagnetic responses were recorded with the VectorViewTM MEG system of the Brain Research Group at the Oxford Centre for Human Brain Activity (OHBA). During the recording, the sampling frequency was set to 1000 Hz (0.03–330 Hz bandwidth).

Data preprocessing

The initial preprocessing was performed using the signal-space separation (SSS) noise reduction method implemented in MEGIN/Elekta Neuromag Maxfilter (Maxfilter version 2.2.15, MEGIN, Helsinki), and we used the FieldTrip toolbox for EEG/MEG-analysis (Oostenveld et al., 2011) for our later analyses. We segmented the data such that each epoch started 300 ms before stimulus onset and continued to 1000 ms after stimulus onset. We first padded the trials to filter the data by setting the padding length to 10 seconds. Later, low-pass and high-pass filters were applied with cutoffs at 0.5 and 150 Hz by applying a single-pass, zero-phase windowed-sinc FIR filter and Kaiser window setting max passband deviation to 0.001. The coefficients were 93 and 3625 for low-pass and high-pass filters, respectively. To reduce the heartbeat and eye movement artifacts, we used independent component analysis and removed the contaminated components.

Data processing and statistical analyses

Data were processed based on the data in magnetometer channels because after applying the SSS correction, information in magnetometer and gradiometer channels is about the same (Garcés et al., 2017).

We considered various pairs of stimuli to compare face and object processing in perception and recognition. We considered three categories for faces, i.e. human face, monkey face, and general face (the combination of human and monkey faces), and compared any of these types with motorbike stimuli. We also compared human and monkey face processings. Additionally, we compared the perception and recognition per stimulus type. To compare any pair of conditions, we first normalized the data between that pair per subject and then ran cluster-based permutation on the evoked responses of the two conditions using a dependent-sample permutation two-tail t-test (Maris & Oostenveld, 2007) separately for M100 and M170 components. This process was done to test whether any component has a significant difference between the two conditions. We applied the Monte Carlo method (Maris & Oostenveld, 2007) for cluster-based permutation and considered the time interval from 80 to 130 ms after stimulus onset for the M100 component and the period from 130 to 200 ms after stimulus onset for the M170 component. Temporal and spatial adjacent samples whose t values exceeded a critical threshold for an uncorrected P level of 0.05 were clustered in connected sets. Cluster-level statistics were calculated by taking the sum of the t values within every cluster and then considering the maximum of the cluster-level statistic. The statistical test was corrected for the false alarm rate using a threshold value 0.05 for the two-tail test. We set the number of draws from the permutation distribution to 1000. As we performed the analysis separately for M100 and M170 components, we performed a Bonferroni correction for them, i.e. we accepted the cluster-level statistic only if the related P<0.025.

We used the bootstrapping approach to have a more exact approximation for the onset and the peak effect of the effects we found by cluster-based permutation. This condition was carried out by bootstrapping the participants’ samples to determine a 95% confidence interval (CI) for the onset and peak latencies of the significant effects revealed by the cluster-based permutation analyses. The overall procedure was based on the approach explained in (Cichy et al., 2014) by creating 100 bootstrapped samples by sampling from the participant with replacement and each time repeating the cluster-based permutation test with the same configurations as the initial level of permutation analyses.

3. Results

Examining the M100 component in general face perception and comparing it to motorbike perception, we found a significant effect from 81 to 130 ms after stimulus onset (P=0.004). The 95% CI for the beginning time of this effect started at 81 ms and ended at 114 ms. For the peak of this effect, the 95% CI was from 81 to 124 ms after stimulus onset. There was also a significant effect between faces and motorbikes when considering the M170 component (P=0.002) that emerged from 153 to 200 ms after stimulus presentation. For this effect, 95% CIs for starting and peak time were 149 to 193 ms and 162.5 to 198 ms, respectively.

Going into more detail by separately comparing human face perception and monkey face perception with motorbike perception, the results were as follows. Focusing on the M100 component when comparing human face vs motorbike perception, we could not find any significant effect after Bonferroni correction (P=0.026). There was, however, a significant effect between monkey face and motorbike perception in the M100 component, manifested from 90 to 125 ms after stimulus onset (P=0.018) with a 95% CI for the beginning time from 89 to 130 ms and from 89.6 to 120 ms for the peak time. Considering the N170 component, cluster-based permutation revealed a significant difference (P=0.002) between the human face and motorbike perception. This effect was found from 149 to 181 ms after stimulus onset. Bootstrapping showed that a 95% CI for the onset time of this effect started from 141 ms and continued to 171 ms after stimulus onset. These values were from 159 to 178 ms for the peak time of the effect. About monkey face vs motorbike perception, a significant difference in the M170 component appeared from 158 to 200 ms after stimulus onset (P=0.002) with 95% CIs from 156 to 192 ms for the onset and from 176 to 200 ms for the peak time.

There were no significant results in the comparison of M100 between the human face and monkey face perception (P=0.34). However, the difference between the two stimuli was significant in M170 (P=0.002) with a 95% CI from 134 to 192 ms for the beginning time and from 147 to 200 ms for the peak time.

The topoplot for the grand average difference between the mentioned comparisons in perception is shown in Figure 1, which shows the activation mainly in occipitotemporal channels.

The time courses for the grand average of the stimuli perception over the occipitotemporal channels are shown in Figure 2.

The difference between the stimuli in recognition was significant in most cases. Considering general faces and motorbike stimuli, there was a significant difference in M100 (P=0.006) emerging from 93 to 114 ms after stimulus onset with 95% CIs for the onset from 81 to 109 ms and for the peak time from 82 to 115 ms after stimulus onset. The comparison between the two stimuli was also significant in M170 (P=0.002) and emerged from 149 to 200 ms after stimulus onset. For the beginning time of this effect, the 95% CI was from 146 to 192 ms, while it was from 155.5 to 198 ms for the peak time.

There was no significant effect when comparing M100 components of human face recognition and motorbike recognition (P=0.058). However, the difference between the two stimuli was significant in M170, which emerged from 142 to 180 ms after stimulus onset. For this effect, the 95% CIs were from 136 to 178 ms for the beginning time and from 153 to 178.65 ms for the peak time.

The comparison between monkey face and motorbike recognition was significant for the M100 (P=0.004) and M170 (P=0.002) components. Regarding the M100, a significant effect was found from 81 to 117 ms with a 95% CI from 81 to 110 ms for the onset and for the peak time from 82 to 116 ms after stimulus onset. Concerning M170, a significant effect emerged from 159 to 200 ms after stimulus onset. The 95% CIs were from 159 to 195 ms for the onset and 180 to 199 ms for the peak time.

Subcategories of face recognition also showed a significant difference in M100 and M170 (P=0.002 for both components). The difference between human face recognition and monkey face recognition in M100 emerged from 95 to 130 ms and in M170 from 131 to 200 ms after stimulus onset. The 95% CIs for the onset of the effect were from 81 to 125 ms and for the peak time from 81.4 to 130 ms in M100. Considering the M170 component, the 95% CIs was from 131 to 190 ms for the beginning time and from 143 to 197.5 ms for the peak time.

The topoplots for the difference in recognition are shown in Figure 3.

Figure 4 plots the time courses for the grand average of various stimuli types over the occipitotemporal channels in recognition.

Considering the face as a single stimulus type and comparing the perception and recognition, we could not find any significant effect in the M100 and M170 components (P=0.61 and 0.07, respectively).

There was a significant effect in the M100 component (P=0.01) when comparing human face perception with recognition, which appeared from 117 to 130 ms after stimulus onset. The 95% CIs for this effect were from 93 to 129 ms for the onset and from 98 to 130 ms for the peak time. However, there was no significant effect in M170 between human face perception and recognition after Bonferroni correction (P=0.032).

The comparison between monkey face perception and recognition did not significantly affect M100 (P=0.31) or M170 after Bonferroni correction (P=0.026). There was also no significant effect on motorbike perception and recognition in the M100 (P=0.47) and M170 (P=0.14) components.

The topoplot in Figure 5A shows the difference between grand averages of human face perception and recognition in the M100 component. The time course over occipitotemporal channels for human face perception and recognition is shown in Figure 5B.

We found the onset and the peak time for the significant effects when comparing various stimuli pairs in M100 and M170 components in perception and recognition. Also, the onset and peak time for the effect is different in perception vs recognition (Table 1). The comparisons which showed no significant effect were highlighted in grey.

4. Discussion

The most prominent visual event-related activities happen before 200 ms after stimulus onset (Rossion & Caharel, 2011). In this study, we assessed two of the most conspicuous event-related components, i.e. M100 and M170, and traced their changes of amplitude in stimulus perception and recognition. To this end, we ran cluster-based permutations separately for each pair of stimulus types in perception and recognition. We also performed cluster-based permutations between perception and recognition for any stimulus type. We ran separate bootstrap tests for any of those effects to determine the significant effects of onset and peak time found by permutation analyses.

Considering the M100 component, human face traces had a bigger peak than the motorbike in the recognition phase, but the comparison was not significant in perception after the Bonferroni correction. Nevertheless, based on the grand average time courses, we can still see the same pattern between the human face and motorbike perception. Consistent with our findings, multiple previous studies reported a larger amplitude of M100 for faces than objects (Herrmann et al., 2005; Okazaki et al., 2008; Rossion & Jacques, 2008). The comparison between monkey face and motorbike stimuli in M100 was significant in perception and recognition states, with the same pattern in which monkey faces had a higher peak than motorbike traces. This result implies that a more face-selective pattern in M100 is present for non-human faces. Examining the difference between human face vs monkey face activities in the M100 component, we found that the monkey face stimulus had a higher peak than human faces in recognition. Following our results, Balas and Koldewyn (2013) found a significant difference between human and non-human face processing in M100, in which a larger peak amplitude for dog faces was observed than for human faces. We could not dissociate human face perception from monkey face activities in the M100 component based on our findings. Our results suggest that while we usually have face selectivity in the M100 component, it is not always the case, which is compatible with the debate already present in the literature about the face-selectivity of this component (Boutsen et al., 2006; Rivolta et al., 2012). In other words, while M100 is face-selective in recognition for human and monkey face stimuli, it is not in perception for human faces (but still selective for monkey faces) if conservative statistical tests like permutation analyses and Bonferroni correction are applied.

Based on our results, in most cases, there is a clear pattern between the stimuli amplitudes in M170 in the perception and recognition phases. In more detail, the amplitude of M170 always had a bigger peak in human face traces compared to motorbike time courses. The fact that faces generate a bigger peak than objects in M170 has been prevalently reported in the literature (Rousselet et al., 2008; Rossion & Caharel, 2011; Daniel & Bentin, 2012). On the other hand, comparing subcategories of faces (in our study, human face vs monkey face) was more challenging. Previous studies mainly compared human and non-human faces at the perception level. In this issue, some studies reported no difference between human and non-human face perception in M170 (Carmel & Bentin, 2002; Rousselet et al., 2004; Balas and Koldewyn, 2013), while some studies reported a bigger peak of M170 for non-human face in comparison to human face stimuli (Haan et al., 2002). Based on our results, when examining the differences between human face perception and monkey face perception, multiple occipitotemporal channels emerged during the permutation analyses. While in some of these channels, we observed a bigger peak of M170 for monkey faces in contrast to human face stimuli; there were some temporal and occipital channels in which the reverse finding was detected, i.e. the amplitude of M170 in human face perception traces was larger than the monkey face perception time courses. Inspecting the difference between human and monkey faces at the recognition level, we observed a higher peak of M170 for human faces than for monkey faces in the recognition stage. This condition may be due to human expertise in their face processing but not in other species’ facial processing (Pascalis & Bachevalier, 1998).

The monkey face traces had a bigger peak in M170 compared to motorbike traces in the same component. This pattern was the same in perception and recognition states. Moreover, when merging human face and monkey face stimuli and considering them as the general face, the amplitude in M170 peaked in contrast to the motorbike time course. This result implies that in the comparison between faces and objects, the race of the face rarely matters, and the brain always generates a larger M170 for faces than objects.

Regarding the differentiation between perception and recognition per stimulus type in the M100 component, the only significant comparison was for the human face stimulus. This finding indicates that human face stimuli require different attentional resources, while the amount of attention used in perception and recognition by other stimuli is comparable.

The difference between perception and recognition in the M170 component was not significant in any of the stimulus types, which is in contrast to what was found by Daniel & Bentin, (2012), indicating a significant difference between face perception and recognition in M170. However, the criterion for recognition in Daniel and Bentin’s study was different from ours. In other words, while in their study, participants should decide about the gender or familiarity of the second presented stimulus, in our research, the equality of the second presented stimulus with the first one matters. This discrepancy in the results shows the high sensitivity of the result to the task design and analysis methods.

Considering the onset time for the significant effects, a 95% CI helps us understand the speed at which the distinction between various stimulus types happens in the human brain. On the other hand, a 95% CI of the peak time for the significant effects sheds light on the time at which the maximum separability between two kinds of stimuli has occurred. Our results indicate a unique pattern for the speed at which various comparisons happened in the N170 component, both for the onset and the peak times and in perception and recognition. In more detail, the distinction between the human and monkey faces happened earlier than the discrimination between the human face and motorbike, which occurred earlier than the differentiation between monkey face vs motorbike, both in terms of the onset and peak time in the N170 component. This pattern has been repeated in perception and recognition. Previous studies have widely reported that face processing happens faster than object processing, and this speed is mostly exposed in the N170 component (Hsiao & Cottrell, 2008; Crouzet et al., 2010; Rossion 2014). Our results extend these findings by revealing that this speed is also present in discerning between the subcategories of faces, i.e. in human vs monkey facial processing. Moreover, our results signify that the distinguishment between human face vs motorbike happens earlier than monkey face vs motorbike, which is likely because of human expertise in own species face processing, but not in other species (Pascalis & Bachevalier, 1998). However, we could not find significant results when searching for the distinction between perception and recognition per stimulus type in N170. When comparing stimuli, we usually inspect earlier onset and peak time in recognition rather than perception. This result is probably due to the facilitation in recognition compared to perception due to our task design. In other words, because participants knew the second presented stimulus in each trial is always from the same category as the first one, it is rational that the N170 onset and peak happen earlier in recognition than for perception.

Regarding the M100 component, there was hardly any difference between the onset and peak time of various comparisons. We could barely discriminate between the speed and maximum separability time of any pair of stimuli at about 100 ms after stimulus onset.

5. Conclusion

The current study examined the differences between face and object processing in perception and recognition in M100 and M170 components. Based on our findings, M100 is a face-selective component in recognition but not always in perception. The comparison between faces and objects in M170 always revealed a higher peak for faces than objects. Considering the onset and the peak time, our results suggest that while there was hardly any distinction between various comparisons in M100, a unique pattern was present in the M170 component.

Limitations

The major limitation of this study was confining the results to sensor space activities. This limitation was due to the absence of individual MRI scans. In future studies, it would be helpful to learn about the brain regions involved in the effects we have found and the possible latencies in those regions. Additionally, event-related field components later than 200 ms also deserve to be assessed in later studies, especially in terms of their onset and peak time.

Ethical Considerations

Compliance with ethical guidelines

All procedures agreed with the Code of Ethics of the World Medical Association (the Declaration of Helsinki). This study was approved by the Oxford Research Ethics Committee B (Code: 07/H0605/124). Written informed consent was acquired from all individuals.

Funding

The paper was extracted from the PhD dissertation of Narjes Soltani Dehaghani, approved by the Institute for Cognitive and Brain Sciences, Shahid Beheshti University, Tehran, Iran. In this study, Sven Braeutigam was supported by the Welcome Centre for Integrative Neuroscience (WIN) and the Department of Psychiatry, University of Oxford, Oxford, United Kingdom. Narjes Soltani Dehaghani was supported for lab visits to the Max Planck Institute, Leipzig,, Germany, and Oxford Centre for Human Brain Activity (OHBA), by the German Academic Exchange Service (DAAD) and Cognitive Sciences and Technologies Council, Tehran, Iran.

Authors' contributions

Conceptualization, study design, and data collection: Sven Braeutigam; Source analysis and statistical analysis: Narjes Soltani Dehaghani and Burkhard Maess; Writing the original draft: Narjes Soltani Dehaghani; Review and editing: Burkhard Maess, Reza Khosrowabadi, Mojtaba Zarei, and Sven Braeutigam; Data interpretation and final approval: All authors.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors thank Vanessa Murray-Walpole for helping with data acquisition and Anthony Bailey for contributing to the study design.

References

Balas, B., & Koldewyn, K. (2013). Early visual ERP sensitivity to the species and animacy of faces. Neuropsychologia, 51(13), 2876–2881. [DOI:10.1016/j.neuropsychologia.2013.09.014] [PMID]

Bentin, S., & Deouell, L. Y. (2000). Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cognitive Neuropsychology, 17(1), 35-55. [DOI:10.1080/026432900380472] [PMID]

Besson, G., Barragan-Jason, G., Thorpe, S. J., Fabre-Thorpe, M., Puma, S., & Ceccaldi, M., et al. (2017). From face processing to face recognition: Comparing three different processing levels. Cognition, 158, 33–43. [DOI:10.1016/j.cognition.2016.10.004] [PMID]

Boutsen, L., Humphreys, G. W., Praamstra, P., & Warbrick, T. (2006). Comparing neural correlates of configural processing in faces and objects: An ERP study of the Thatcher illusion. Neuroimage, 32(1), 352-367. [DOI:10.1016/j.neuroimage.2006.03.023] [PMID]

Carmel, D., & Bentin, S. (2002). Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition, 83(1), 1-29. [DOI:10.1016/S0010-0277(01)00162-7] [PMID]

Cichy, R. M., Pantazis, D., & Oliva, A. (2014). Resolving human object recognition in space and time. Nature Neuroscience, 17(3), 455–462. [DOI:10.1038/nn.3635] [PMID]

Crouzet, S. M., Kirchner, H., & Thorpe, S. J. (2010). Fast saccades toward faces: Face detection in just 100 ms. Journal of Vision, 10(4), 1-17. [DOI:10.1167/10.4.16] [PMID]

Daniel, S., & Bentin, S. (2012). Age-related changes in processing faces from detection to identification: ERP evidence. Neurobiology of Aging, 33(1), 206.e1–206.e28. [DOI:10.1016/j.neurobiolaging.2010.09.001] [PMID]

Eimer, M. (2000). The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport, 11(10), 2319–2324. [DOI:10.1097/00001756-200007140-00050] [PMID]

Garcés, P., López-Sanz, D., Maestú, F., & Pereda, E. (2017). Choice of magnetometers and gradiometers after signal space separation. Sensors, 17(12), 2926. [DOI:10.3390/s17122926] [PMID]

Goffaux, V., Gauthier, I., & Rossion, B. (2003). Spatial scale contribution to early visual differences between face and object processing. Cognitive Brain Research, 16(3), 416-424. [DOI:10.1016/S0926-6410(03)00056-9] [PMID]

de Haan, M., Pascalis, O., & Johnson, M. H. (2002). Specialization of neural mechanisms underlying face recognition in human infants. Journal of Cognitive Neuroscience, 14(2), 199-209. [DOI:10.1162/089892902317236849] [PMID]

Herrmann, M. J., Ehlis, A. C., Muehlberger, A., & Fallgatter, A. J. (2005). Source localization of early stages of face processing.Brain Topography, 18(2), 77-85. [DOI:10.1007/s10548-005-0277-7] [PMID]

Hsiao, J. H., & Cottrell, G. (2008). Two fixations suffice in face recognition. Psychological Science, 19(10), 998–1006.[DOI:10.1111/j.1467-9280.2008.02191.x] [PMID]

Itier, R. J., & Taylor, M. J. (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs.Cerebral Cortex (New York, N.Y. : 1991), 14(2), 132–142. [DOI:10.1093/cercor/bhg111] [PMID]

Jacques, C., & Rossion, B. (2006). The speed of individual face categorization. Psychological Science, 17(6), 485-492. [DOI:10.1111/j.1467-9280.2006.01733.x] [PMID]

Linkenkaer-Hansen, K., Palva, J. M., Sams, M., Hietanen, J. K., Aronen, H. J., & Ilmoniemi, R. J. (1998). Face-selective processing in human extrastriate cortex around 120 ms after stimulus onset revealed by magneto-and electroencephalography. Neuroscience Letters, 253(3), 147-150. [DOI:10.1016/S0304-3940(98)00586-2] [PMID]

Luck, S. J., Heinze, H. J., Mangun, G. R., & Hillyard, S. A. (1990).“Visual event-related potentials index focused attention within bilateral stimulus arrays. II. Functional dissociation of P1 and N1 components.” Electroencephalography and Clinical Neurophysiology, 75(6), 528-542. [DOI:10.1016/0013-4694(90)90139-B] [PMID]

Mangun, G. R., & Hillyard, S. A. (1996). Mechanisms and models of selective attention. In M. D. Rugg, & G. H. Coles (Eds), Electrophysiology of Mind: Event-related Brain Potentials and Cognition (PP. 40-85). Oxford: Oxford Academic [DOI:10.1093/acprof:oso/9780198524168.003.0003]

Maris, E., & Oostenveld, R. (2007). Nonparametric statistical testing of EEG-and MEG-data. Journal of Neuroscience Methods, 164(1), 177-190. [DOI:10.1016/j.jneumeth.2007.03.024] [PMID]

Negrini, M., Brkić, D., Pizzamiglio, S., Premoli, I., & Rivolta, D. (2017). Neurophysiological correlates of featural and spacing processing for face and non-face stimuli. Frontiers in Psychology, 8, 333. [DOI:10.3389/fpsyg.2017.00333] [PMID]

Okazaki, Y., Abrahamyan, A., Stevens, C. J., & Ioannides, A. A. (2008). The timing of face selectivity and attentional modulation in visual processing. Neuroscience, 152(4), 1130–1144.[DOI:10.1016/j.neuroscience.2008.01.056] [PMID]

Oostenveld, R., Fries, P., Maris, E., & Schoffelen, J. M. (2011). FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience, 2011, 156869. [DOI:10.1155/2011/156869] [PMID]

Pascalis, O., & Bachevalier, J. (1998). Face recognition in primates: A cross-species study. Behavioural Processes, 43(1), 87-96. [DOI:10.1016/S0376-6357(97)00090-9] [PMID]

Phillips, P., Wechsler, H., Huang, J., Rauss, P. J. (1998). “The FERET database and evaluation procedure for face-recognition algorithms. Image and Vision Computing, 16(5), 295-306. [DOI:10.1016/S0262-8856(97)00070-X]

Rivolta, D., Palermo, R., Schmalzl, L., & Williams, M. A. (2012). An early category-specific neural response for the perception of both places and faces. Cognitive Neuroscience, 3(1), 45-51. [DOI:10.1080/17588928.2011.604726] [PMID]

Rossion, B. (2014). “Understanding face perception by means of human electrophysiology.” Trends in Cognitive Sciences, 18(6), 310-318. [DOI:10.1016/j.tics.2014.02.013] [PMID]

Rossion, B., & Caharel, S. (2011). ERP evidence for the speed of face categorization in the human brain: Disentangling the contribution of low-level visual cues from face perception. Vision Research, 51(12), 1297–1311. [DOI:10.1016/j.visres.2011.04.003] [PMID]

Rossion, B., & Jacques, C. (2008). Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170.Neuroimage, 39(4), 1959-1979. [DOI:10.1016/j.neuroimage.2007.10.011] [PMID]

Rousselet, G. A., Husk, J. S., Bennett, P. J., & Sekuler, A. B. (2008). Time course and robustness of ERP object and face differences. Journal of vision, 8(12), 1-18. [DOI:10.1167/8.12.3] [PMID]

Rousselet, G. A., Macé, M. J., & Fabre-Thorpe, M. (2004). Animal and human faces in natural scenes: How specific to human faces is the N170 ERP component?” Journal of Vision, 4(1), 13-21. [DOI:10.1167/4.1.2] [PMID]

Singh, S. P. (2014). Magnetoencephalography: Basic principles.Annals of Indian Academy of Neurology, 17(Suppl 1), S107–S112.[DOI:10.4103/0972-2327.128676] [PMID]

Type of Study: Original |

Subject:

Cognitive Neuroscience

Received: 2020/06/23 | Accepted: 2023/07/13 | Published: 2024/11/1

Received: 2020/06/23 | Accepted: 2023/07/13 | Published: 2024/11/1

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |